A feature point localization method based on hybrid reality

A technology of feature point positioning and mixed reality, applied in the field of image recognition and medical image processing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0030] A method for extracting facial image feature point data comprises:

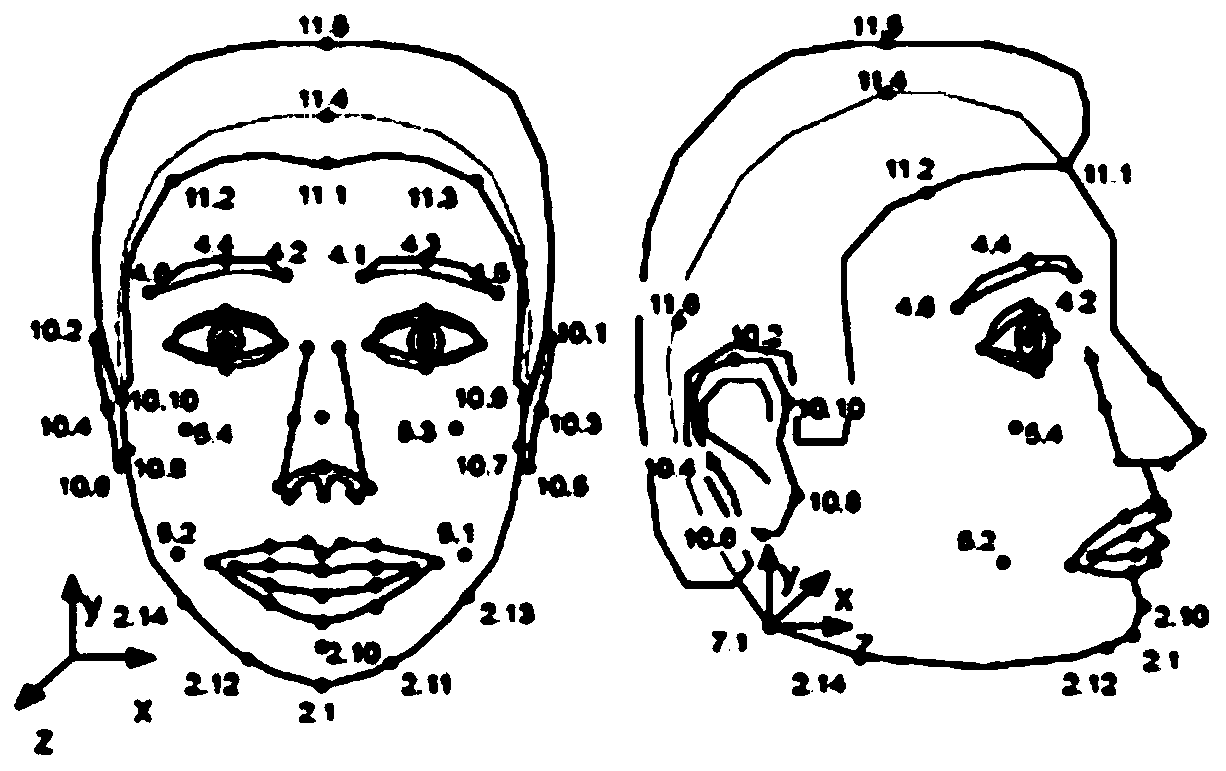

[0031] Step 1. Use 90 feature points to locate the face. The feature points are distributed as follows: 18 points mark the mouth, 14 points mark the jaw, 12 points mark the eyes, 6 points mark the eyebrows, and 4 points mark the cheeks and gills 10 dots mark the nose, 4 dots mark the nape of the neck, 10 dots mark the ears, and 12 dots mark the hair. Such as figure 1 shown.

[0032] Step 2: Establish a Cartesian coordinate system, draw up any origin, record the coordinate information of feature points, and construct point cloud data. The point cloud data is a coordinate array with 90 rows and 3 columns, and the distribution of the 3 columns corresponds to the x, y, and z coordinate values, and each row represents a different feature point.

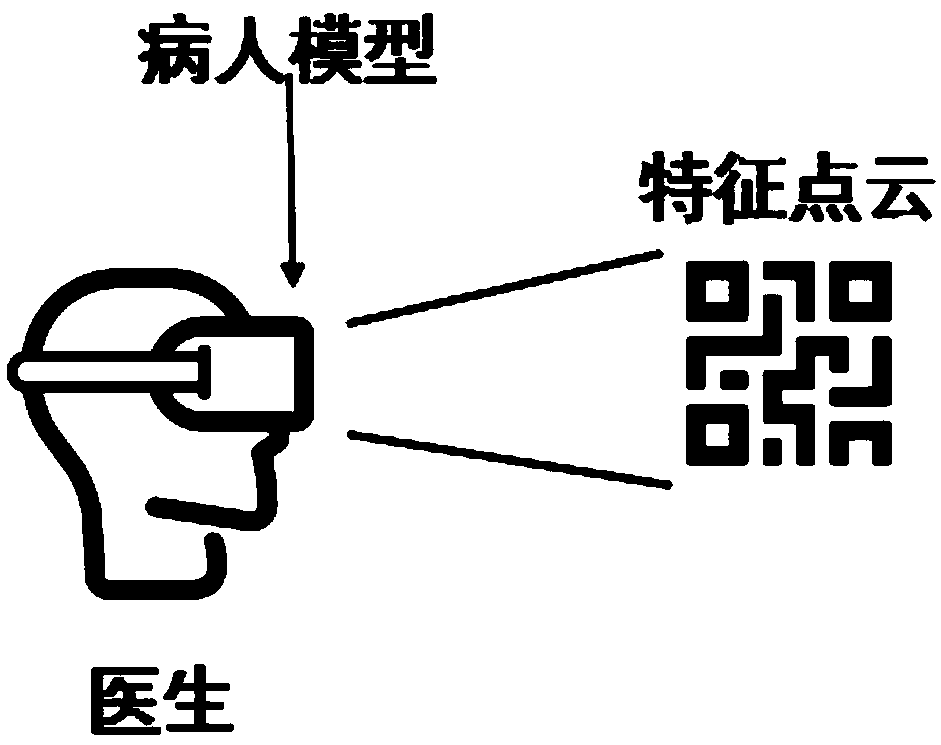

[0033] Step 3: Convert the point cloud data into a QR code for storage, which is convenient for mixed reality devices to scan and identify.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com