A pedestrian re-recognition method based on transfer learning and feature fusion

A pedestrian re-identification and feature fusion technology, applied in the research field of computer vision, can solve the problems of complex manual design features and poor network learning results, achieve good generalization and portability, good recognition effect, and reduce training time Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

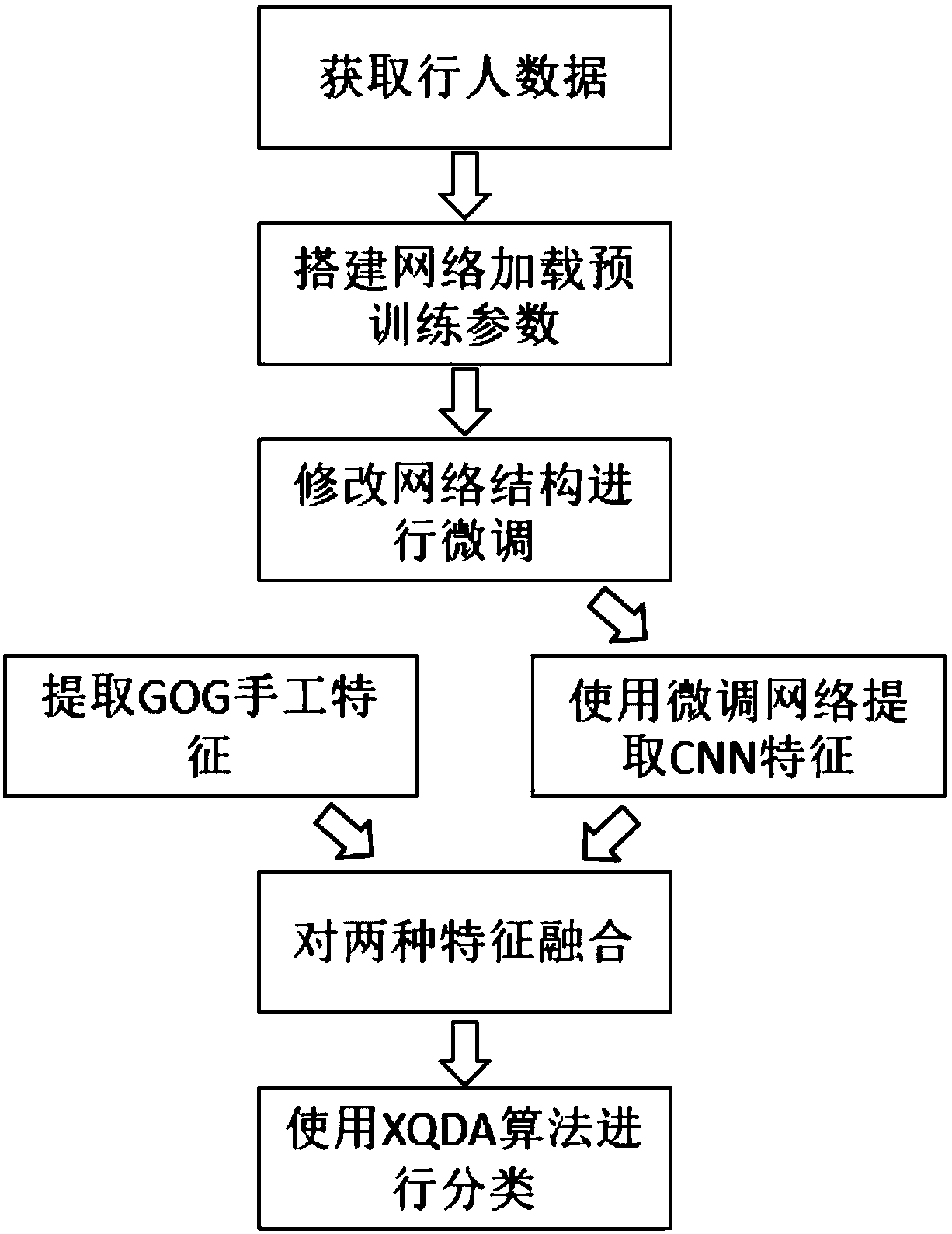

[0047] A pedestrian re-identification method based on migration learning and feature fusion, characterized in that it comprises the following steps:

[0048] Step 1: Obtain pedestrian data from public datasets;

[0049] The present invention uses four public data sets, namely VIPeR, CUHK01, GRID and MARS. These pedestrian data sets are captured by cameras from different angles in real situations, and then manually selected or automatically selected. The pedestrian part is cropped to obtain pedestrian pictures. Each pedestrian category includes multiple pictures. During the experiment, the verified data is divided into two parts: the test set and the training set. Among them, we use MARS to fine-tune the pre-trained neural network, and use VIPeR, CUHK01 and GRID to verify the effect of the present invention.

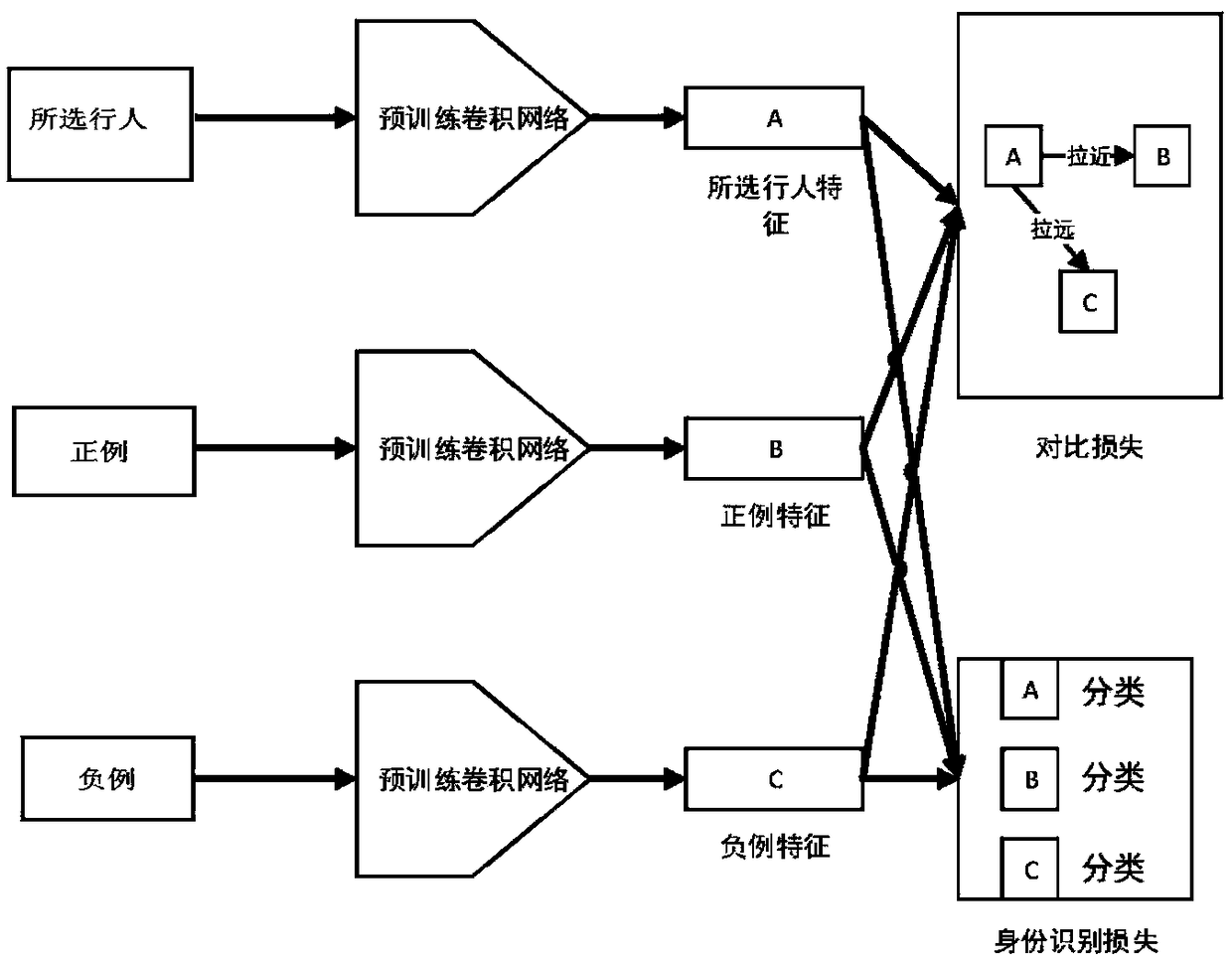

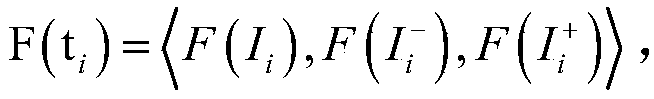

[0050] The second step: build a neural network ResNet, the neural network ResNet is a deep convolutional neural network with a residual mechanism, consisting of more than ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com