A method for recognizing human behavior in a video

A video and video technology, applied in character and pattern recognition, image data processing, instruments, etc., can solve the problem of lack of semantic information in the description ability of depth representation method

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

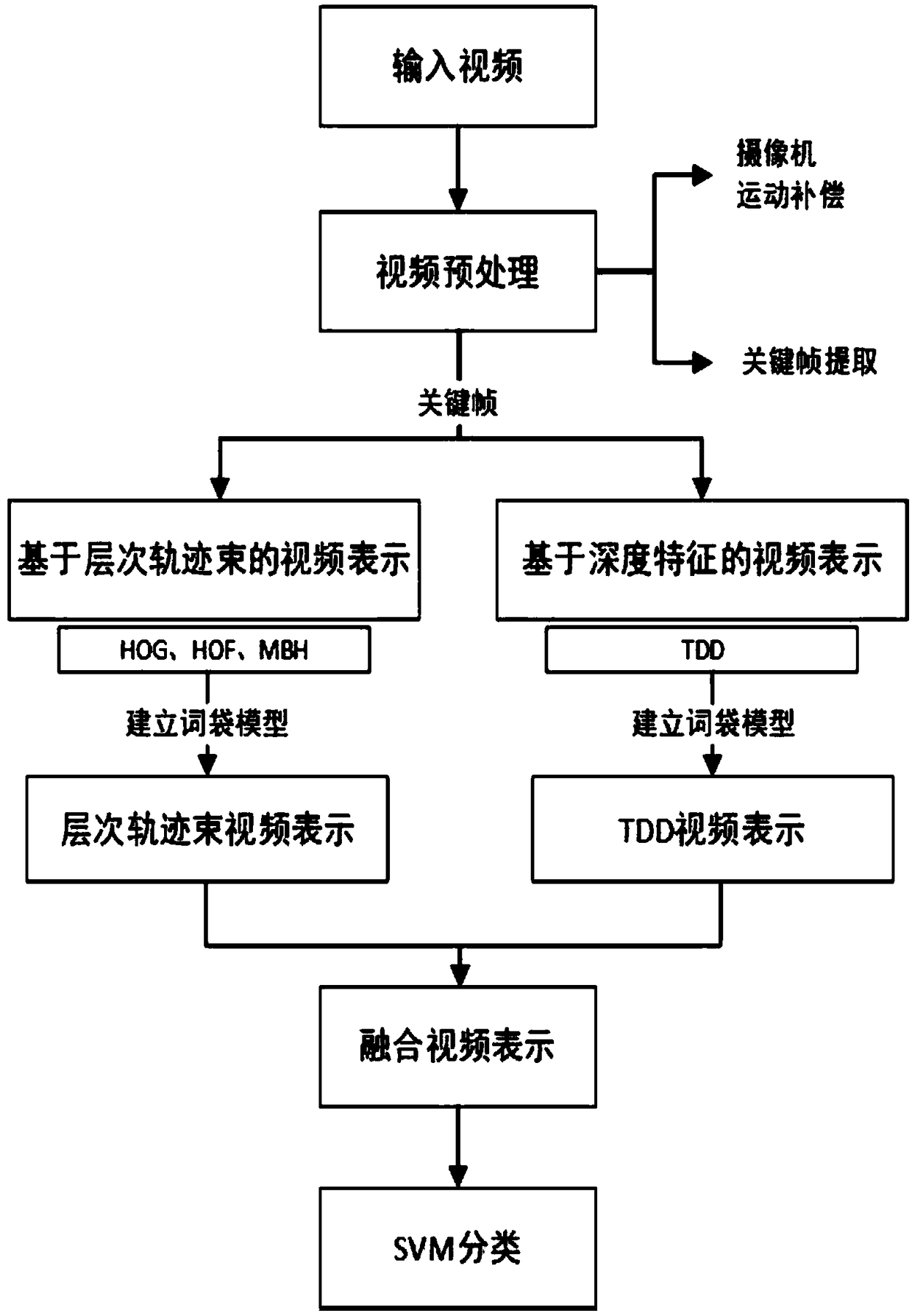

[0068] Such as figure 1 As shown, a method for human behavior recognition in a video, specifically includes the following steps:

[0069] Step S1: Preprocessing the video to obtain improved dense trajectories of all frames of the entire video;

[0070] Step S2: extract key frames from the video based on temporal saliency and spatial saliency;

[0071] Step S3: filter the dense trajectories obtained in step S1 through key frames, retain the dense trajectories of key frames, and remove the dense trajectories of non-key frames;

[0072] Step S4: On the basis of the improved compact shelf trajectory, perform video representation based on hierarchical trajectory bundles;

[0073] Step S5: extracting the deep learning features of key frames, and performing video representation based on deep features to the video;

[0074] Step S6: Fusing the video representation based on the hierarchical trajectory beam and the video representation based on the depth feature;

[0075] Step S7: S...

Embodiment 2

[0124] The experimental data of this embodiment are as follows:

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com