An illumination estimation method based on monocular camera

A technology for monocular camera and illumination estimation, applied in the fields of computer graphics and artificial intelligence, can solve the problems of inability to estimate light source information, limitations, and inapplicability of only monocular cameras, etc., to avoid insufficient precision and improve effectiveness Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

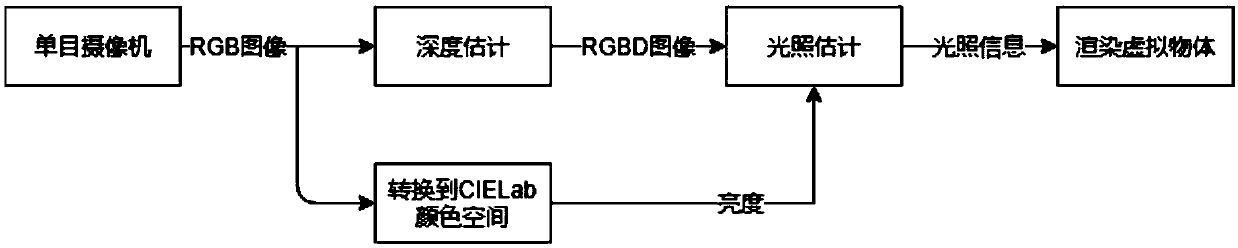

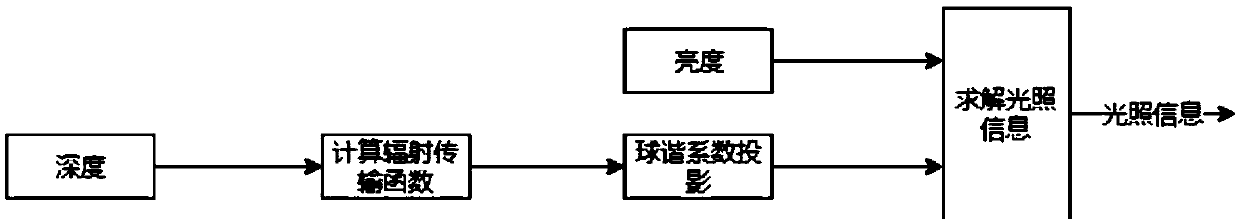

[0020] In this embodiment, the overall flow of the illumination estimation method based on the monocular camera is as follows figure 1 shown, including the following steps:

[0021] In the first step, the monocular camera collects RGB images as input for depth estimation;

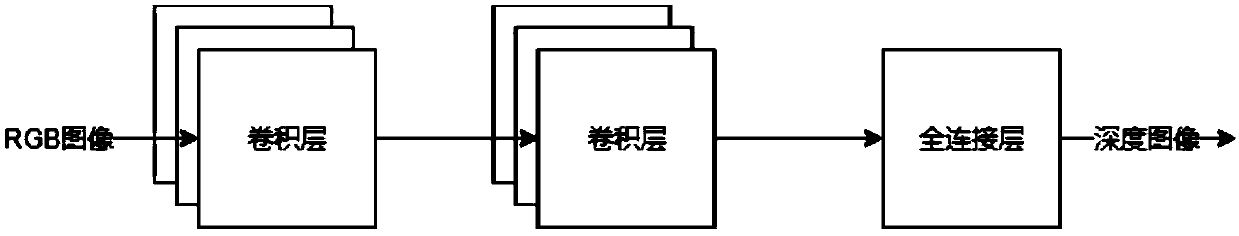

[0022] The second step is to construct a convolutional neural network for monocular camera depth estimation, and use the public data set to train the convolutional neural network; input the RGB image to the convolutional neural network whose parameters have been trained for depth estimation, and obtain the depth Prediction value, output depth prediction map;

[0023] In the depth estimation process of this step, first construct a convolutional neural network to predict the depth information in the monocular RGB image, and the training data is from the KITTI public data set, which is a collection of RGB images with depth annotations. After the training is completed, an RGB image is input, and an RGBD image...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com