Real-time subtitle translation and system implementation method for live broadcast scene

A technology for system implementation and subtitles, applied in the field of deep learning, can solve problems such as limited expressive ability of convolution layer, inability to see long enough voice context information, high time delay, etc., achieve excellent user experience, solve real-time problems, and improve efficiency effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

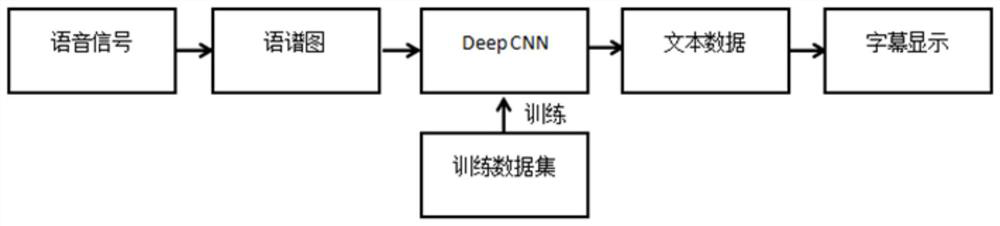

[0033] Such as figure 1 As shown, the present invention discloses a real-time subtitle translation and system implementation method for live broadcast scenes, which is characterized in that it includes the following steps:

[0034] S1. Use the training data set to train the deep convolutional neural network.

[0035] Specifically, S1 specifically includes the following steps:

[0036] S11. Using the training data set to train the deep convolutional neural network to obtain the trained deep convolutional neural network;

[0037] S12, using the gradient descent method to optimize all parameters to reduce the cost function;

[0038] S13. Use the gradient descent method for training, and update all weights of all layers of the network.

[0039] The training data set includes spectrograms of various speech signals and text data corresponding to the speech signals.

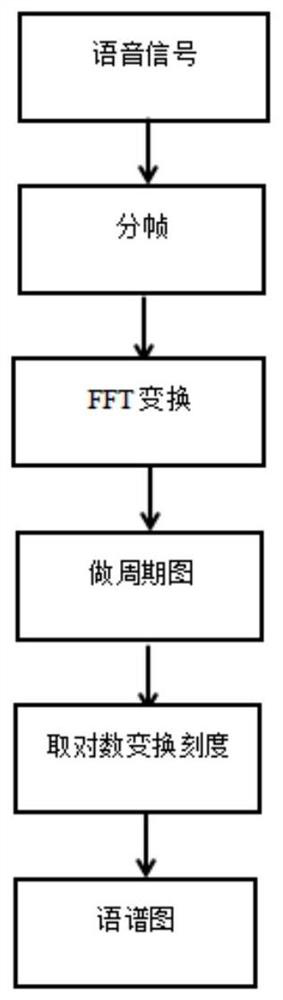

[0040] S2. Perform Fourier transform on each frame of input speech, take time and frequency as two dimensions of ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com