A projection mapping method of video in 3D scene based on screen space

A 3D scene, screen space technology, applied in the field of texture mapping and projection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] The present invention will be described in detail below with reference to the embodiments and the accompanying drawings, but the present invention is not limited thereto.

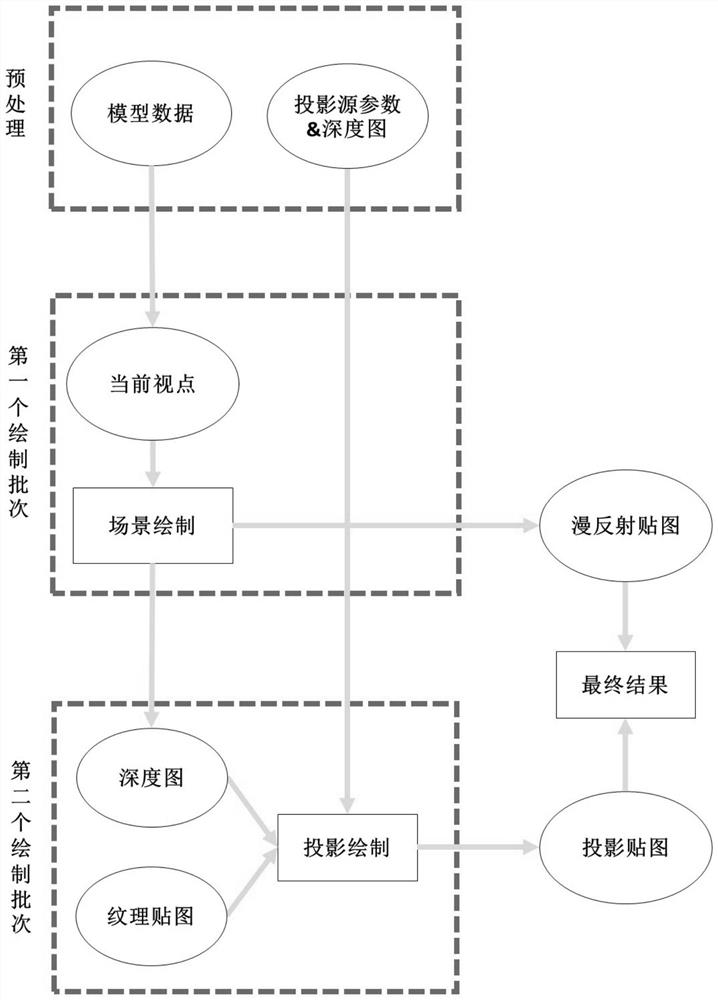

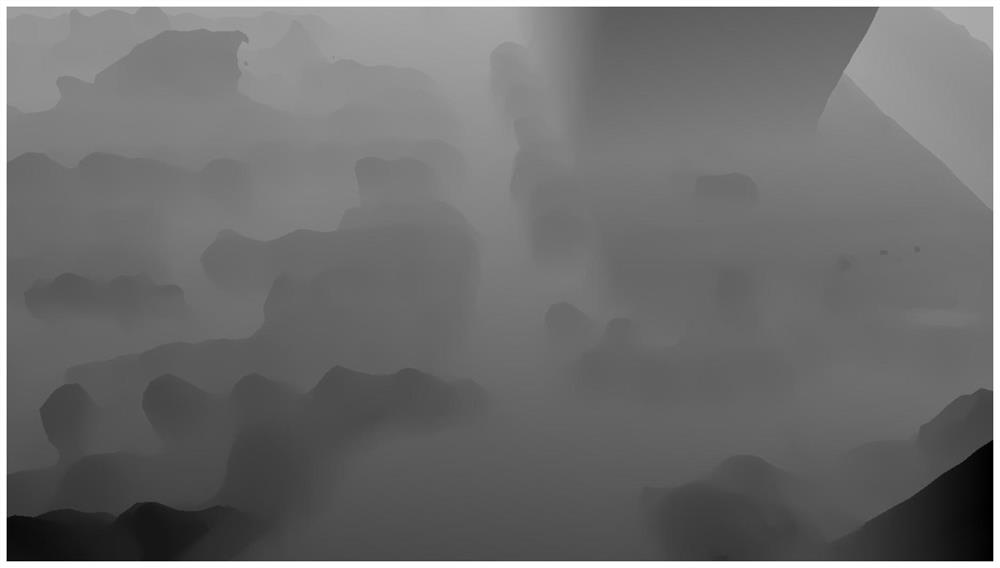

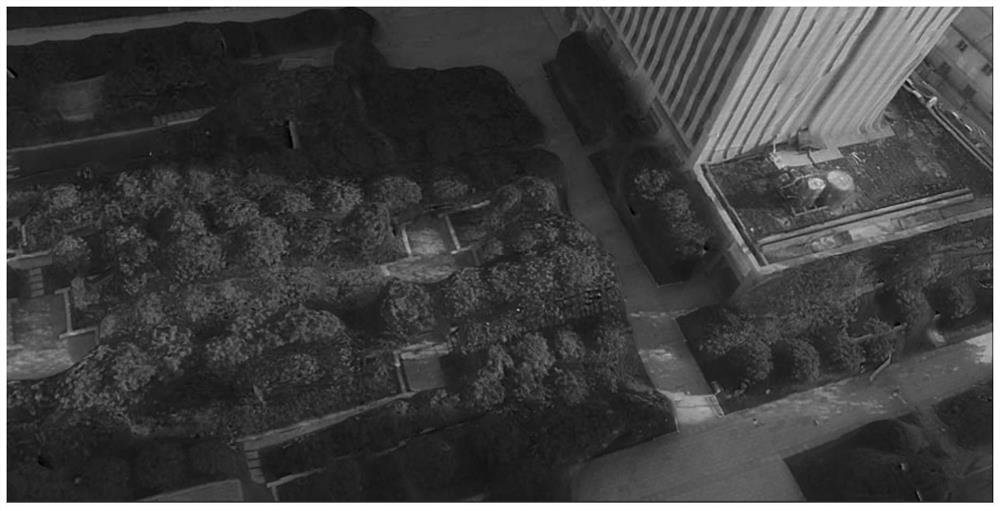

[0036] The flow of the texture projection mapping algorithm based on screen space in this embodiment is as follows: figure 1 As shown, it includes three steps of preprocessing for the projection source, starting from the current viewpoint to draw the 3D scene, and starting from the projection source to draw the texture.

[0037] (1) Preprocessing process

[0038] For each projection source in the scene, it is necessary to first obtain parameter information such as the position and orientation of the projection source in the 3D scene. According to the acquired internal and external parameters of the projection source, the model matrix M, the viewpoint matrix V and the projection matrix P of the projection source can be calculated respectively, and the MVP matrix 7T of the projection can be obtained b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com