Detecting objects in video data

A technology for video data and objects, which is applied in the field of video processing and can solve the problems of frequent changes in the position and orientation of the shooting device.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

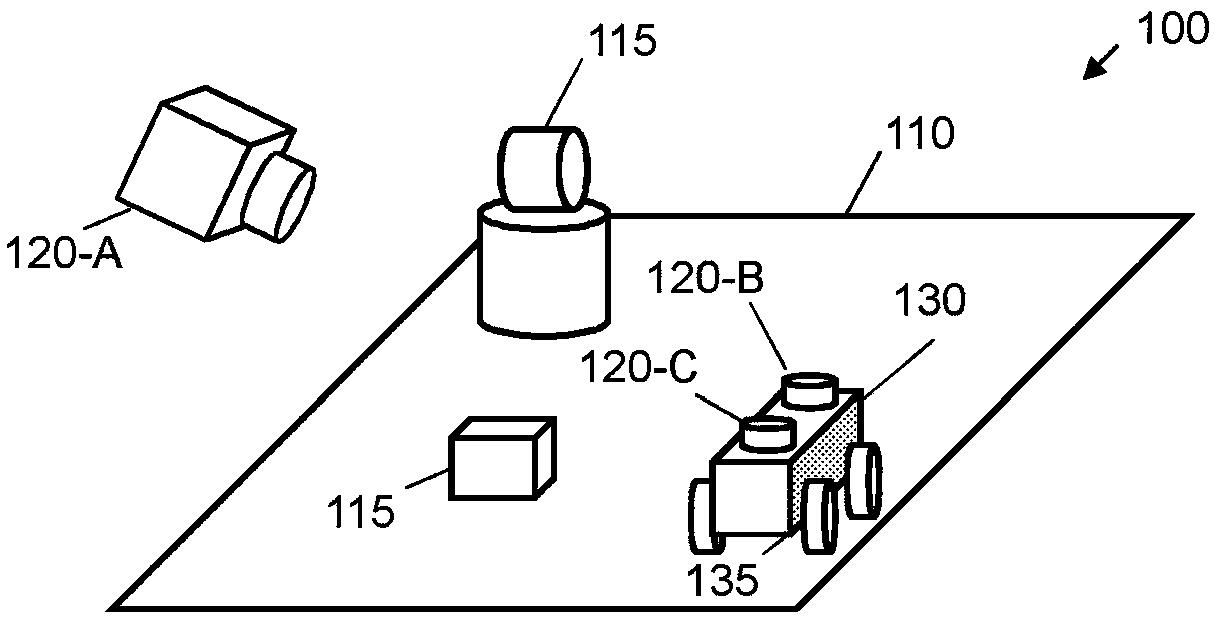

example 100

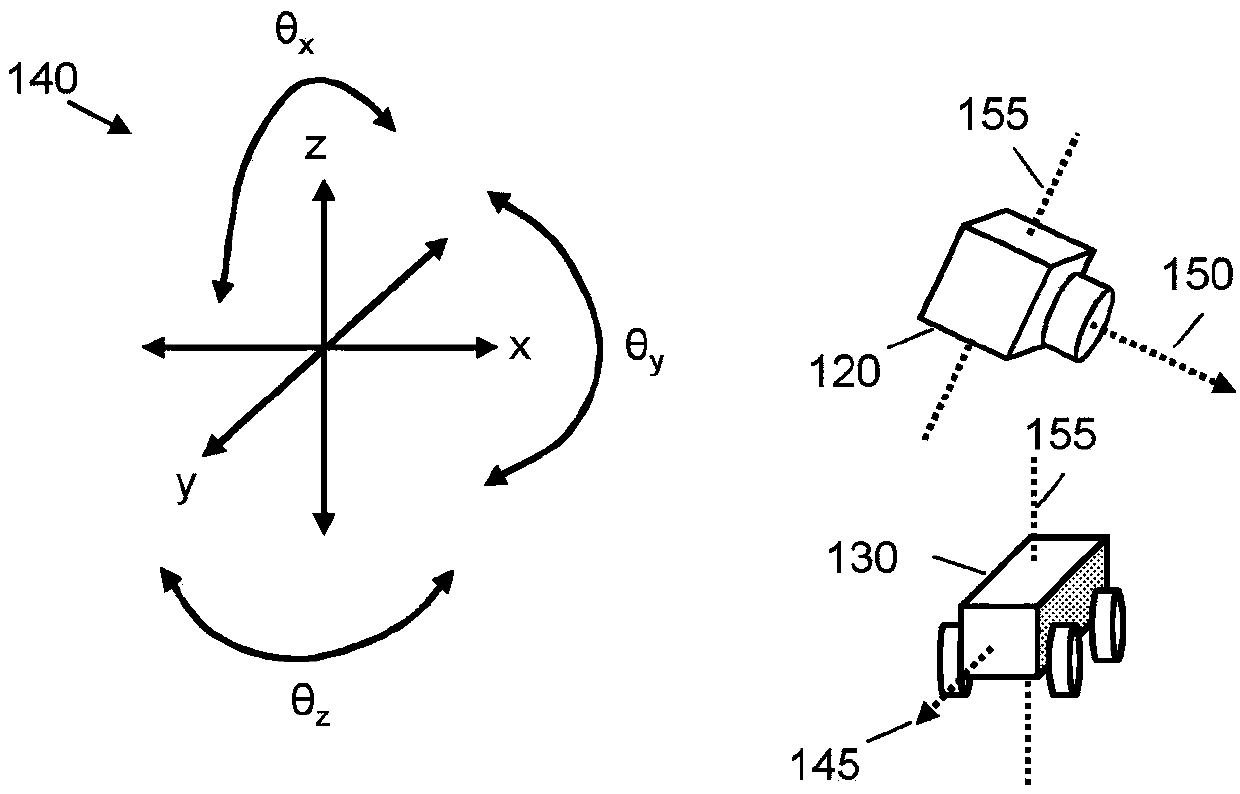

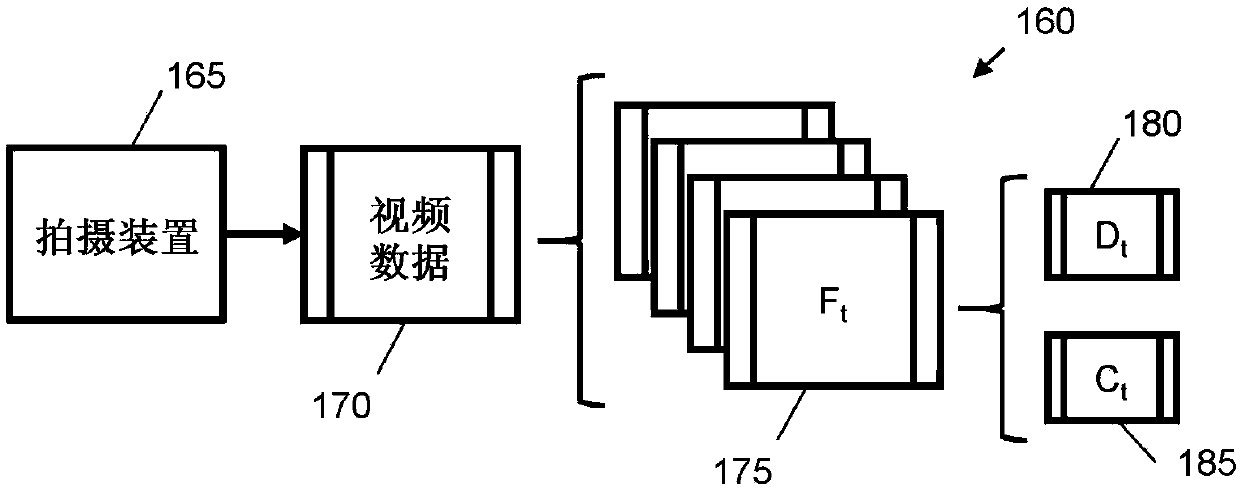

[0052] Example 100 also illustrates various exemplary capture devices 120 that may be used to capture video data associated with a 3D space. The camera 120 may comprise a camera arranged to record data resulting from viewing the 3D space 110 in digital or analog form. In some cases, the photographing device 120 is movable, for example, can be set to photograph different frames corresponding to different viewing portions of the 3D space 110 . The camera 120 is movable relative to the static mount, for example, may include actuators to change the position and / or orientation of the camera relative to the three-dimensional space 110 . In another case, the camera 120 may be a handheld device operated and moved by a human user.

[0053] exist Figure 1A A plurality of camera devices 120 are also shown coupled to a robotic device 130 arranged to move within the 3D space 110 . Robotic devices 135 may include autonomous aerial and / or land mobile devices. In this example 100 , roboti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com