An acceleration method for realizing sparse convolutional neural network inference for hardware

A convolutional neural network and hardware implementation technology, applied in the fields of electronic information and deep learning, can solve problems such as load imbalance, internal buffer misalignment, inefficiency of accelerator architecture, etc., to reduce logic complexity and improve overall efficiency Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The technical solutions and beneficial effects of the present invention will be described in detail below in conjunction with the accompanying drawings.

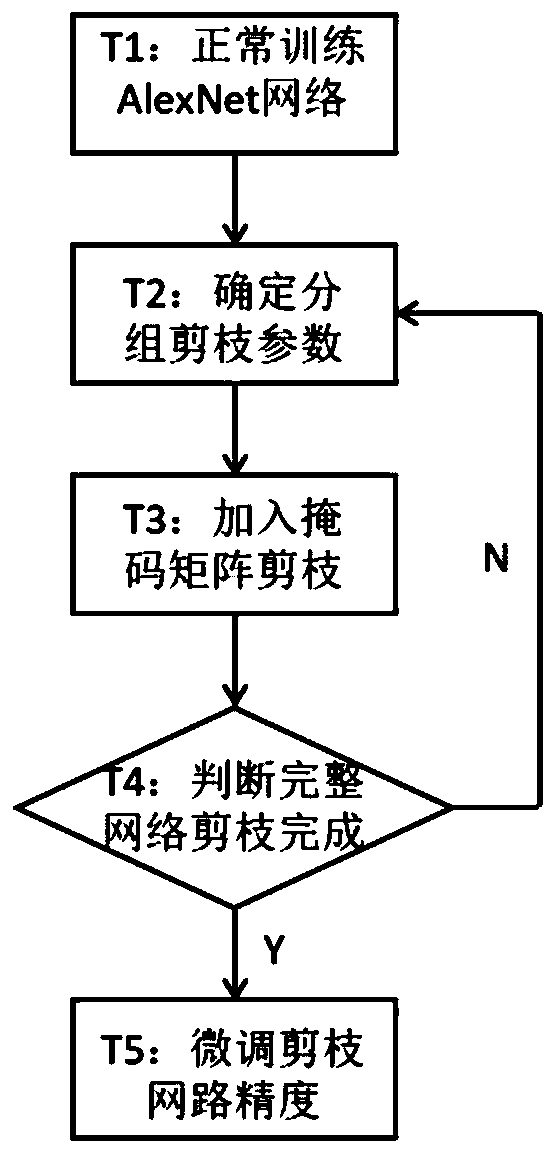

[0030] The present invention provides an acceleration method for realizing sparse convolutional neural network inference for hardware, including a method for determining group pruning parameters for sparse hardware acceleration architecture, a method for group pruning training for sparse hardware acceleration architecture, and a method for sparse volume A Deployment Method for Forward Inference of Productive Neural Networks.

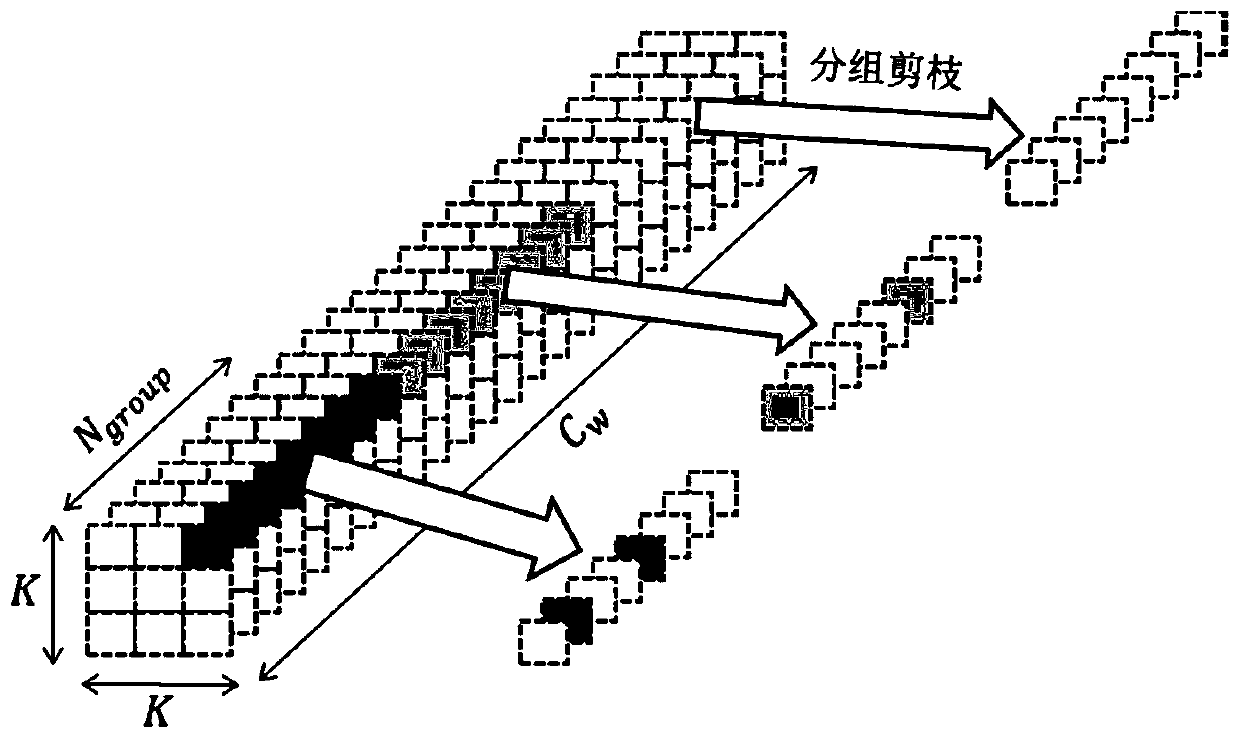

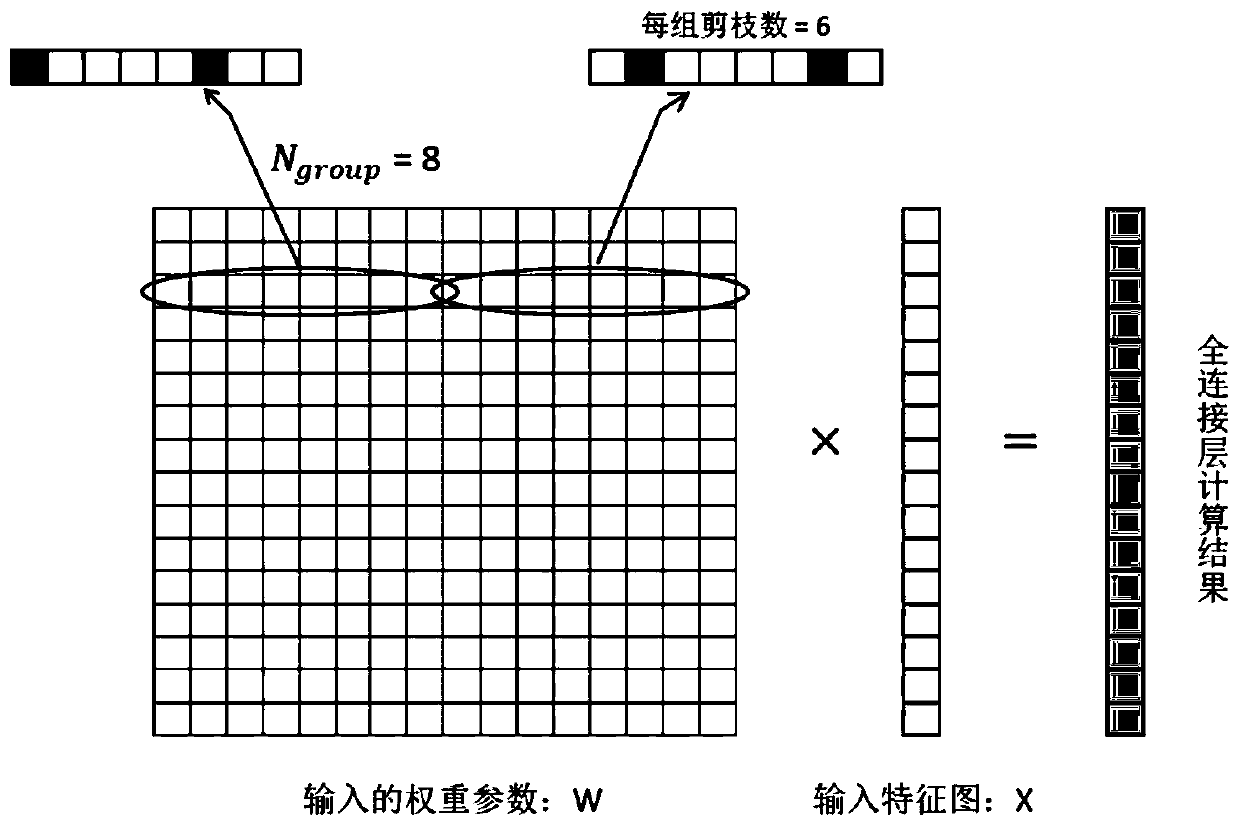

[0031] Such as figure 1 Shown is a schematic diagram of the implementation of the group pruning scheme proposed by the present invention in the channel direction of the convolutional layer. Here, the number of activation values N is obtained in batches m =16, packet length g=8, compression rate Δ=0.25 as an example to specifically illustrate the working method of the present invention.

[...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com