View and point cloud fused stereoscopic vision content classification method and system

A technology of stereo vision and classification method, applied in the field of stereo vision content classification system of view and point cloud fusion, can solve the problems of information loss, loss of stereo information, lack of feature identification, etc., to improve reliability, efficient representation and classification , the effect of improving the accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

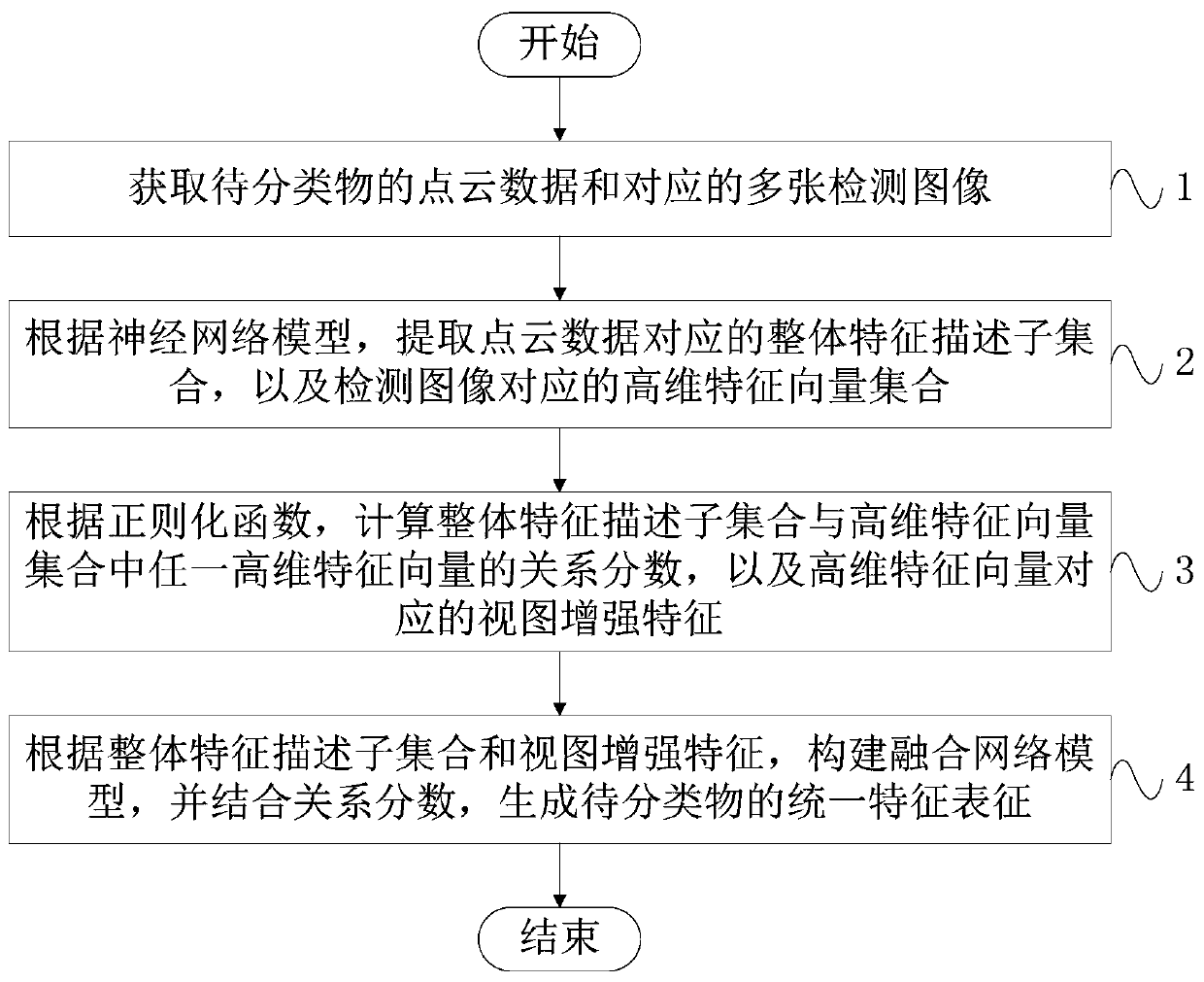

[0024] combine figure 1 and figure 2 Embodiment 1 of the present application will be described.

[0025] Such as figure 1 As shown, this embodiment provides a stereoscopic vision content classification method for view and point cloud fusion, including:

[0026] Step 1, obtain the point cloud data of the object to be classified and the corresponding multiple detection images;

[0027] Specifically, the object to be classified is scanned by a lidar sensor to obtain a set of three-dimensional coordinate points of the object to be classified, which is recorded as point cloud data of the object to be classified, and the point cloud data is usually 1024 or 2048 coordinate points. Then, through image acquisition devices set at different angles, such as cameras, multiple detection images of the object to be classified are obtained at different angles, and the detection images are usually 8 views or 12 views.

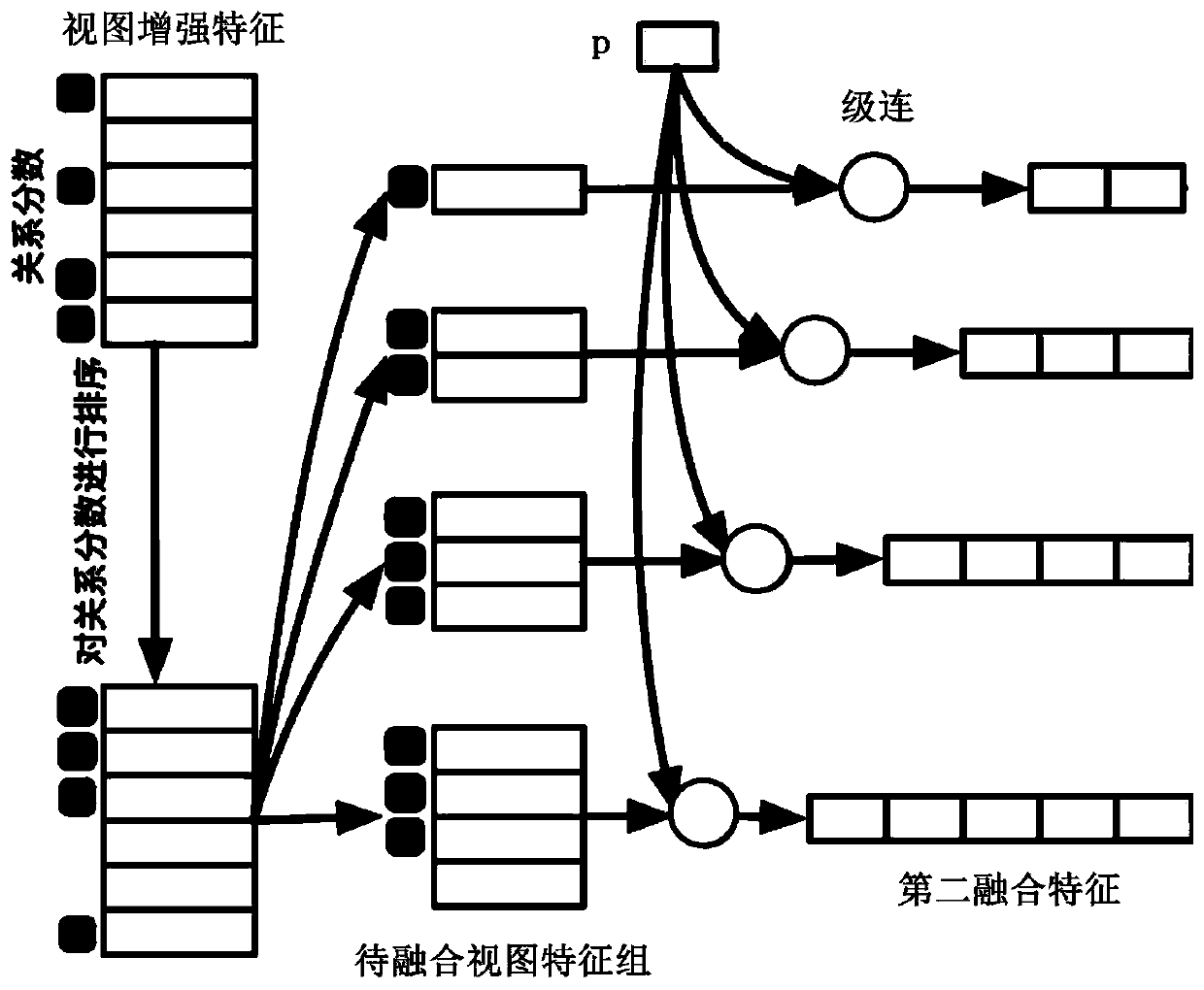

[0028] Step 2, according to the neural network model, extract the over...

Embodiment 2

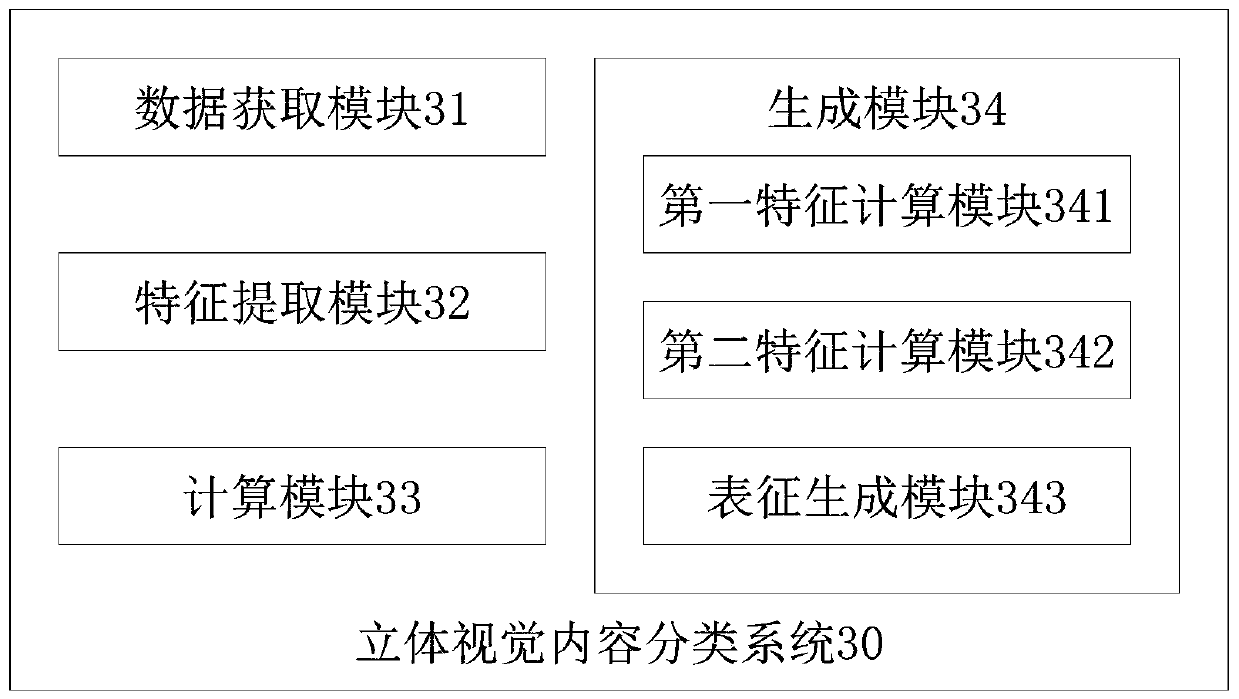

[0067] Such as image 3 As shown, the present embodiment provides a stereoscopic vision content classification system 30 of view and point cloud fusion, including: a data acquisition module, a feature extraction module, a calculation module and a generation module; the data acquisition module is used to obtain the points of objects to be classified Cloud data and corresponding multiple detection images;

[0068]Specifically, the object to be classified is scanned by a lidar sensor to obtain a set of three-dimensional coordinate points of the object to be classified, which is recorded as point cloud data of the object to be classified, and the point cloud data is usually 1024 or 2048 coordinate points. Then, through image acquisition devices set at different angles, such as cameras, multiple detection images of the object to be classified are obtained at different angles, and the detection images are usually 8 views or 12 views.

[0069] In this embodiment, the feature extract...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap