A text classification method based on a local and global mutual attention mechanism

A text classification and attention technology, applied in the direction of text database clustering/classification, text database query, unstructured text data retrieval, etc., can solve the problems of model deepening, no attempt to learn interaction, gradient disappearance, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

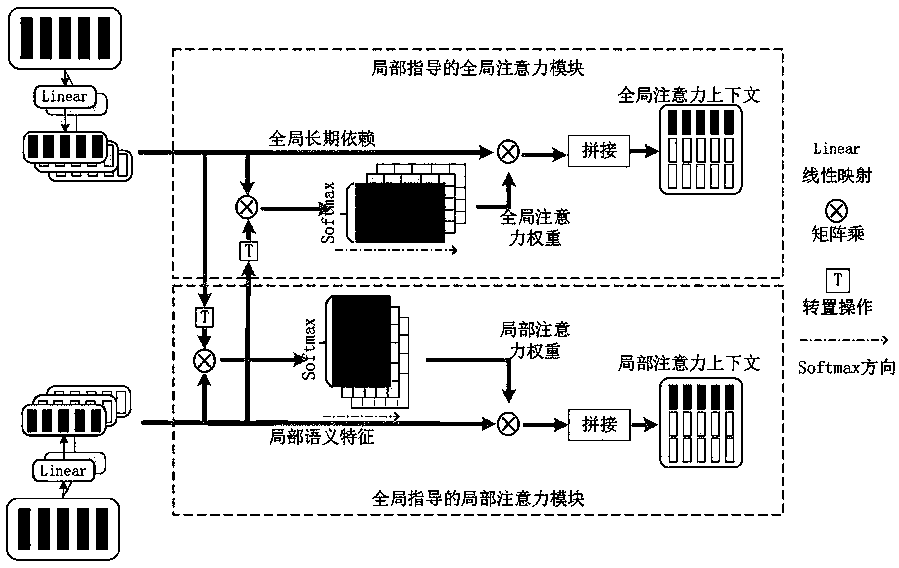

[0074] like figure 1 As shown, the present embodiment discloses a text classification method based on a local and global mutual attention mechanism, and the method includes the following steps:

[0075] Step S1. Obtain a text data set, preprocess the data, and map each word in the text sequence into a word vector.

[0076] Get sixteen datasets from benchmark text classification datasets like SUBJ, TREC, CR, 20Newsgroups, MovieReview and Amazon product reviews, given dataset Among them, W n =w 1 ,w 2 ,...w T is a text sequence, y n is its corresponding label, T is the length of the text sequence, and N is the number of samples in the dataset. make x i ∈ R d is the i-th word w in the text sequence i The corresponding d-dimensional word vector, here uses a 300-dimensional pre-trained word2vec word vector, the input text sequence can be expressed as an embedding matrix:

[0077]

[0078] in is a concatenation operation, and x 1:T ∈ R T×d .

[0079] Step S2, usin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com