Human behavior recognition method based on multi-feature fusion

A technology of multi-feature fusion and recognition method, which is applied in the field of multi-feature fusion human behavior recognition, can solve problems such as inability to effectively obtain time information, cumbersome operation, and poor recognition effect, so as to improve the accuracy and speed of behavior recognition Practicality, the effect of fast matching recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] To facilitate the understanding of those skilled in the art, the present invention will be further described below in conjunction with the accompanying drawings.

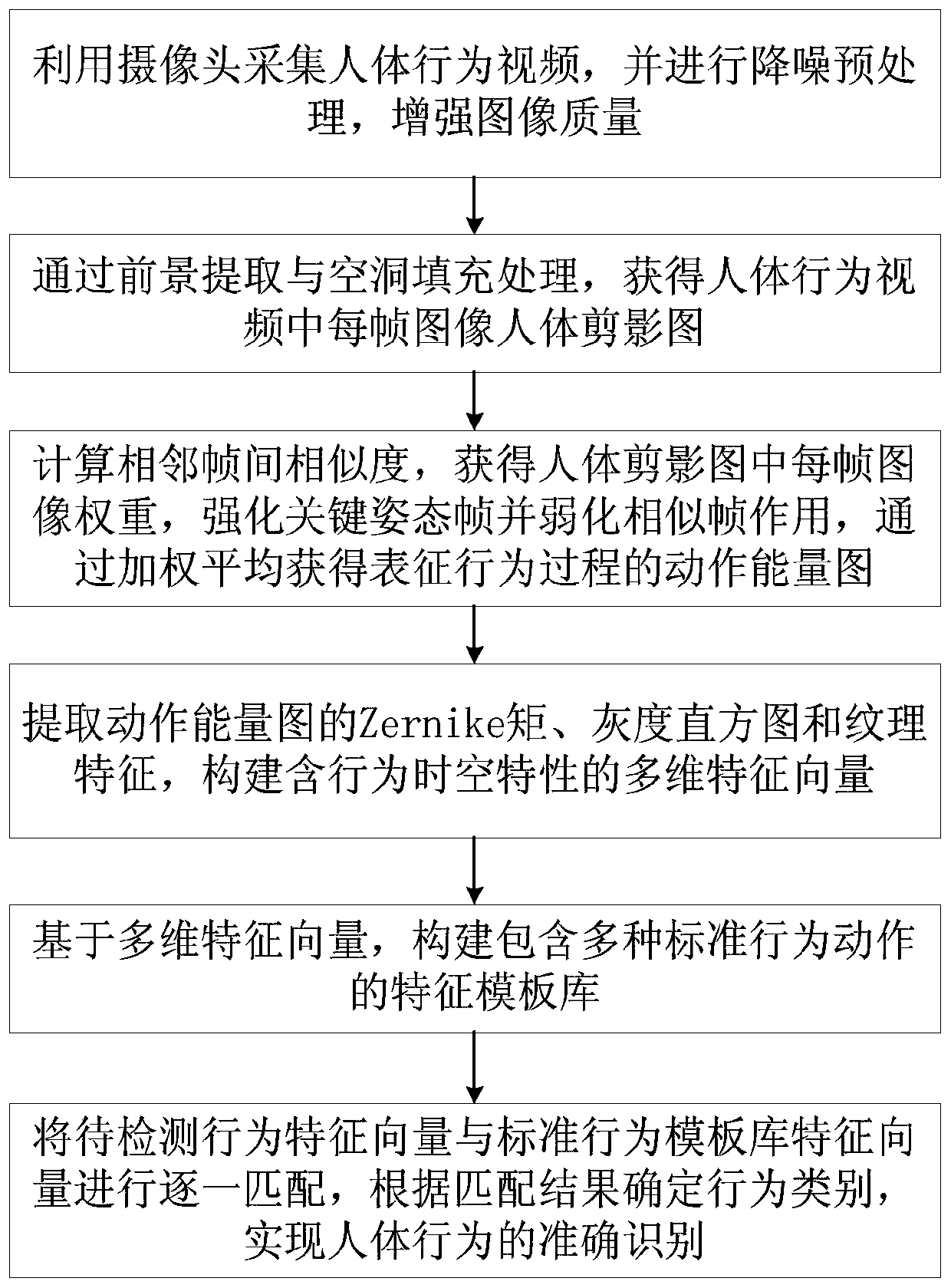

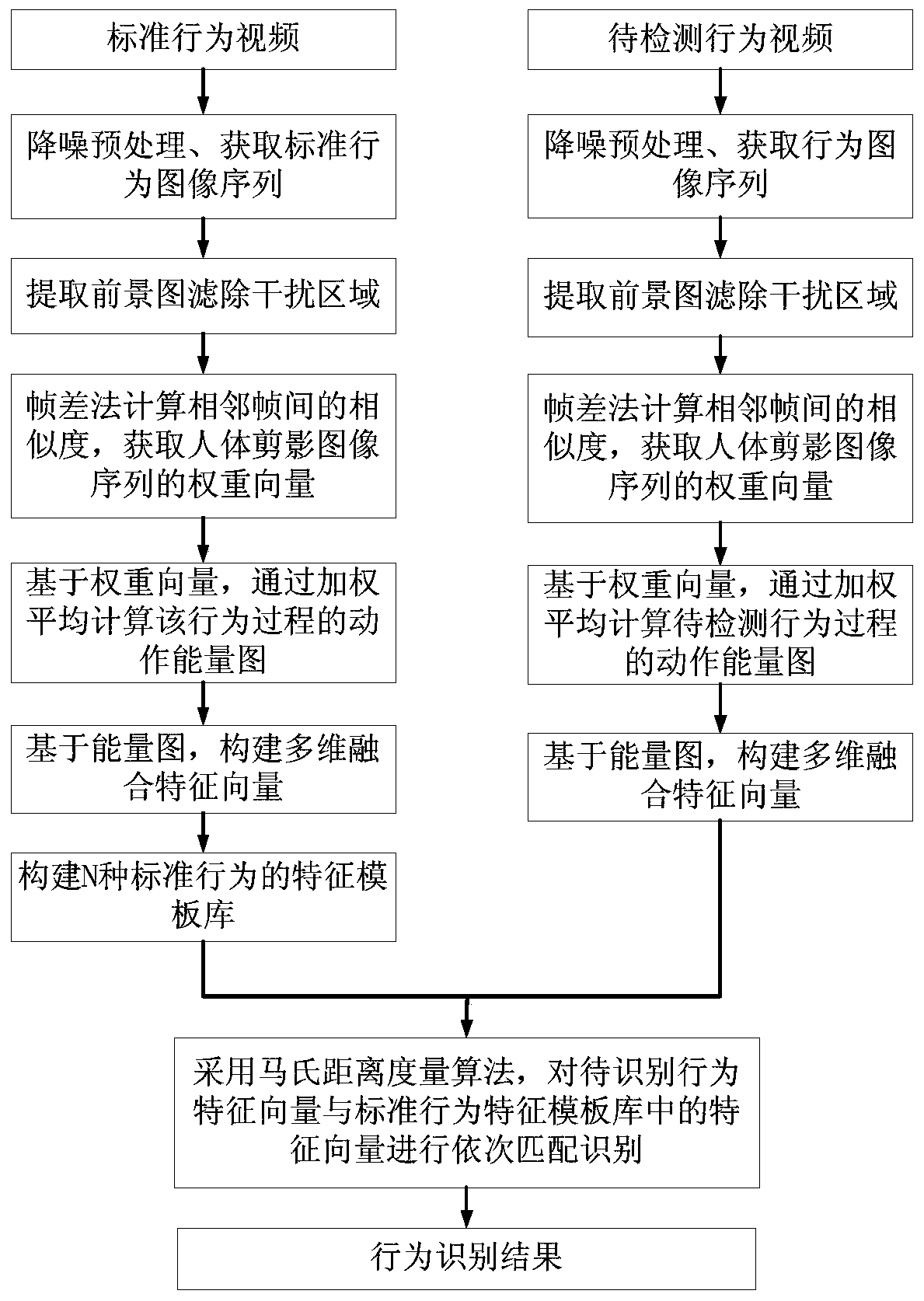

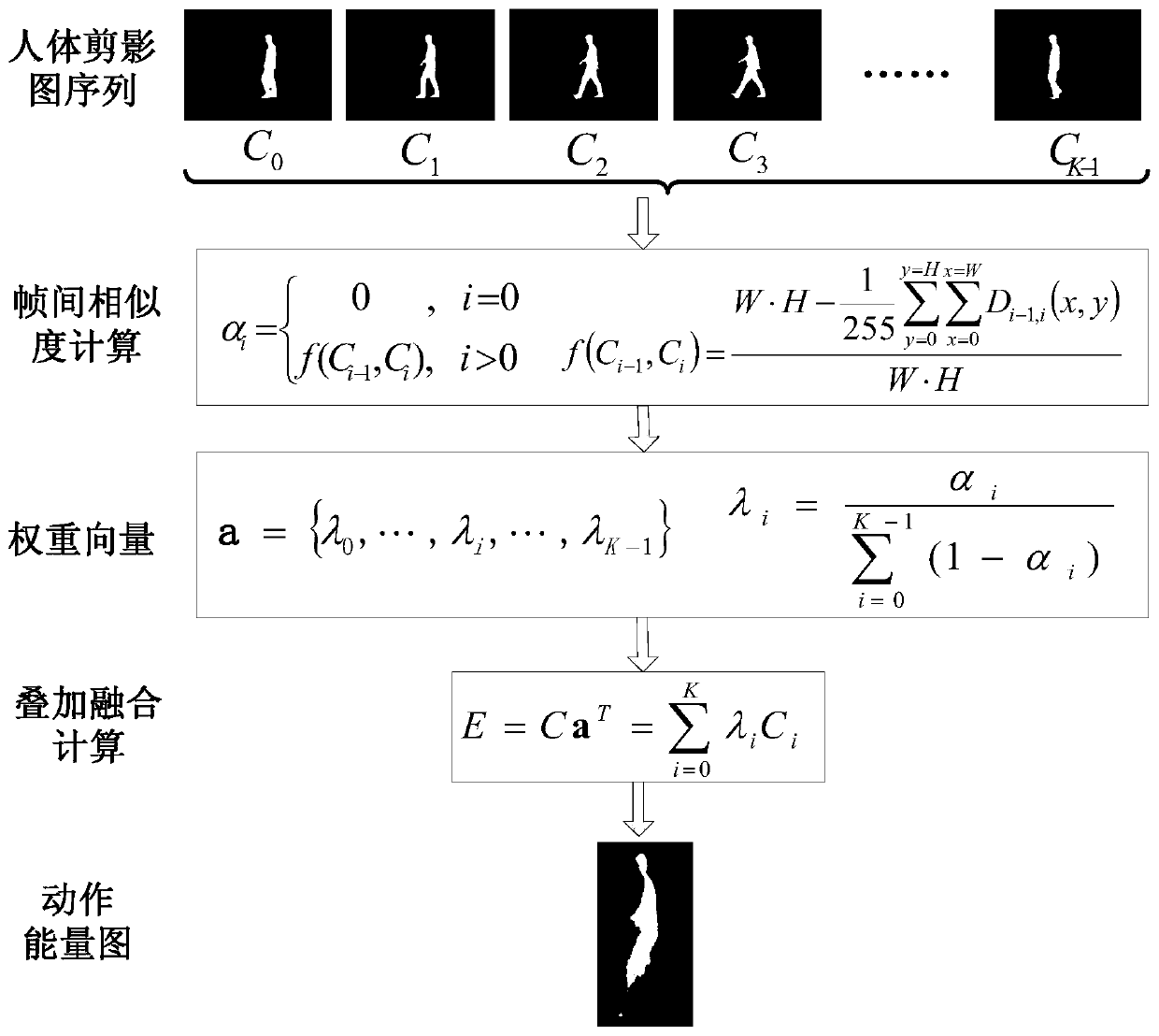

[0026] Such as figure 1 As shown, the present invention discloses a multi-feature fusion human behavior recognition method, comprising the following steps: using a camera to collect human behavior video, extracting the foreground image of each frame of image and performing hole filling and interference filtering to obtain a sequence of human silhouette images ;Calculate the similarity between adjacent frames in the image sequence to obtain the weight of each frame of the image representing the behavior posture; according to each frame of the image in the human silhouette image sequence and its corresponding weight, obtain the action energy map representing the behavior process through weighted average; extract the action The Zernike moment, gray histogram and texture features of the energy map form a multi-di...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com