A Step Size Adaptive Adversarial Attack Method Based on Model Extraction

An adaptive, step-length technology, applied in biological neural network models, character and pattern recognition, instruments, etc., to achieve good attack effects and strong non-black box attack capabilities.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

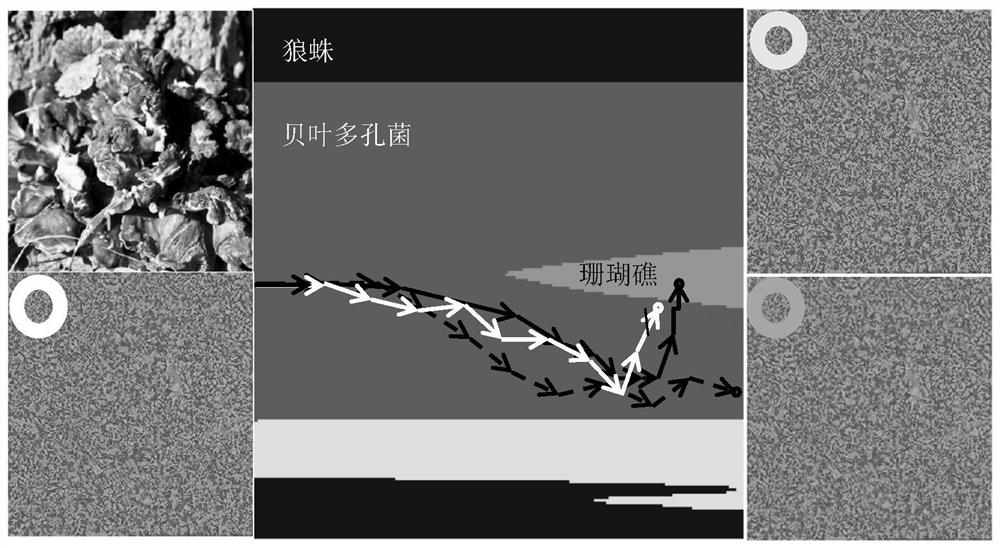

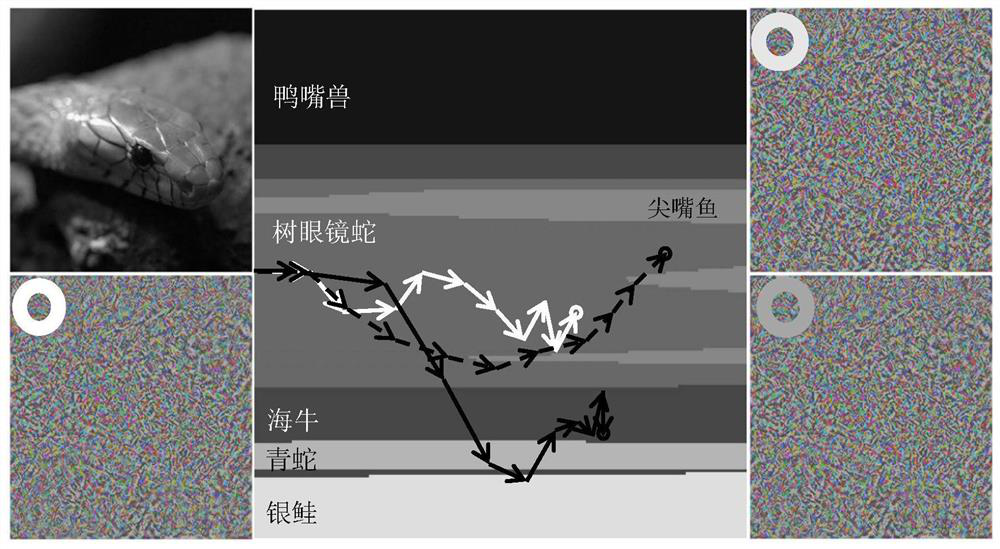

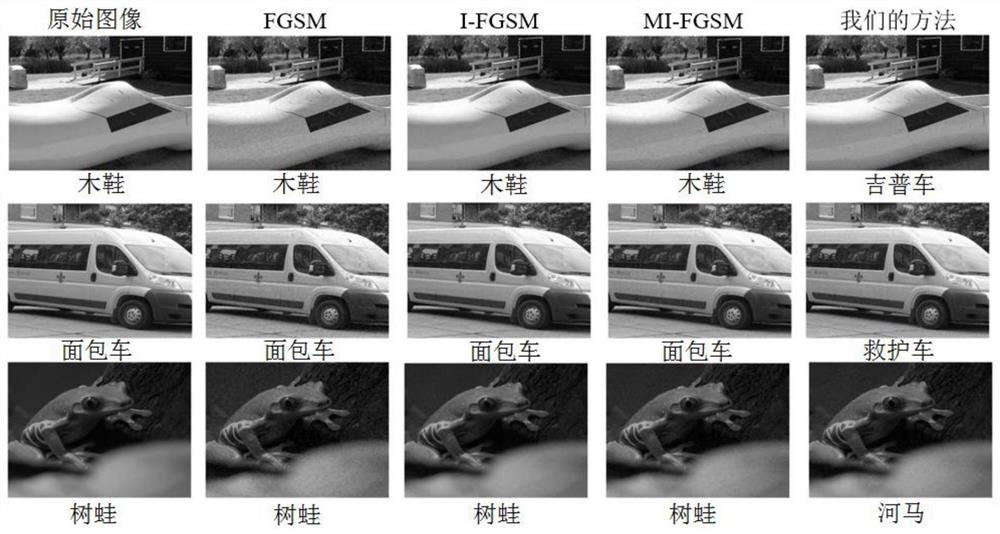

Image

Examples

Embodiment Construction

[0037] Embodiments of the present invention will be further described in detail below in conjunction with the accompanying drawings.

[0038]Here, Inception-v3 is selected as the target model, and the target model is attacked by an adversarial sample construction method that adaptively adjusts the noise step size.

[0039] Step 1. Form the collected pictures and label information into pairs, where the categories are 0~n-1, that is, there are n categories in all images, specifically including the following processing:

[0040] (1-1) Use the ImageNet large-scale image classification dataset to form the image collection IMG:

[0041]

[0042] where x i represents an image, N d Indicates the total number of images in the image collection IMG;

[0043] (1-2) Construct the image description set GroundTruth corresponding to each image in the image set IMG:

[0044]

[0045] Among them, y i Indicates the category number corresponding to each image, N d Indicates the total...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com