Directed attack anti-patch generation method and device

A patch and counter-sample technology, applied in neural learning methods, biological neural network models, instruments, etc., can solve the problems of weak migration ability and low success rate of the model's focus area, so as to improve the effect of targeted attacks, improve the success rate, and facilitate the effect of implementation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

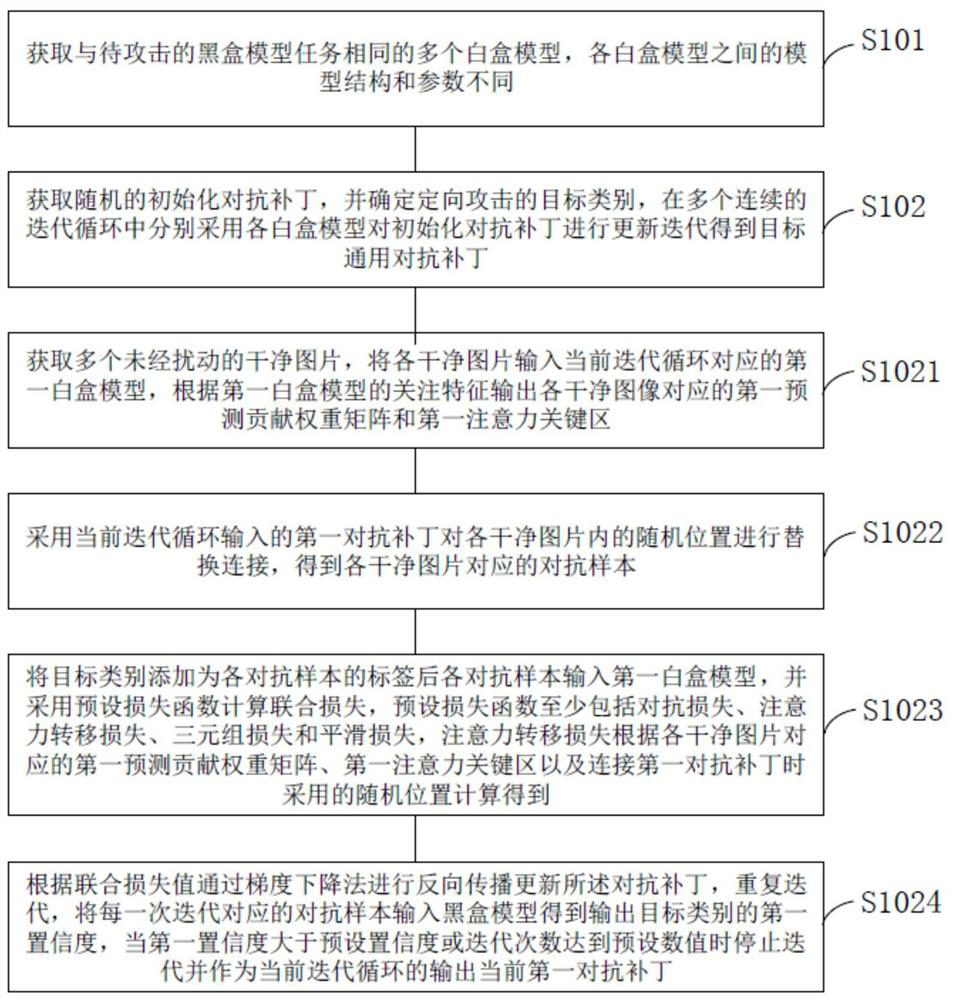

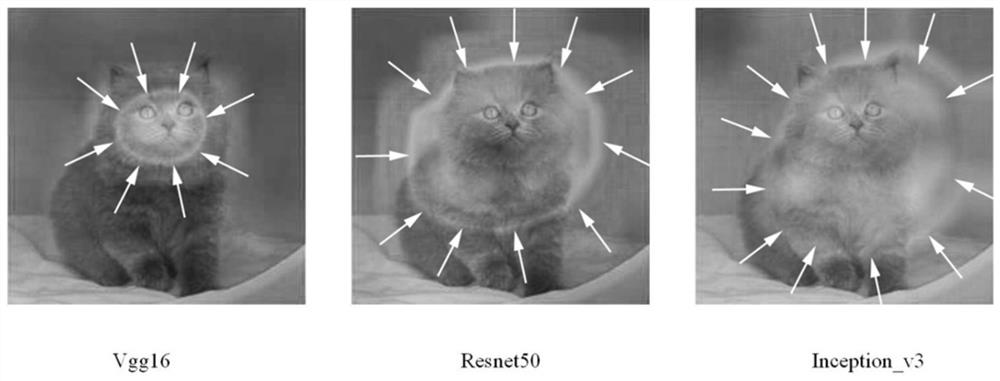

[0104] This embodiment provides a directed attack anti-patch generation method, which is used to conduct directed attacks on black-box models that perform specific tasks, such as image 3 and Figure 4 As shown, the specific steps include:

[0105] 1. Obtain multiple white-box models with the same task as the black-box model to be attacked, and the model structure and parameters of each white-box model are different.

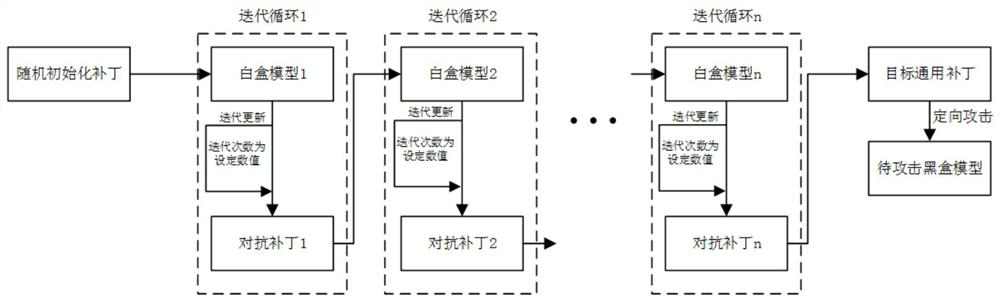

[0106] 2. Obtain a random initial anti-patch, and determine the target category of the targeted attack, and use each white box model to update and iterate the initial anti-patch in multiple consecutive iteration cycles to obtain the target general anti-patch. Through multiple white-box models with known structures and parameters, the anti-patch is continuously iteratively updated, so that the final anti-patch can be universal to all white-box models, that is, it can realize the orientation of the black-box model to be attacked under this task attack.

[0107]...

Embodiment 2

[0114] On the basis of Example 1, such as Figure 5 and Figure 6 As shown, in each iterative cycle, the adversarial samples of each update iteration are input to the black-box model to be attacked to output the first confidence degree about the target category, and the first confidence degree is used as the update stop condition. When the first When the confidence reaches the preset confidence, the update in the current iterative cycle is stopped, and the current first confrontation patch is output.

[0115] The advantage of the present invention is that most of the existing attack methods generate pixel-level micro-perturbations superimposed on the original image, so it is difficult to realize in the physical world, and the anti-patch generated by the present invention can be printed out and applied to In the physical world, it has certain practical significance. The existing methods ignore the features of common concern between models, and do not use the features of commo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com