Multi-field neural machine translation method based on self-attention mechanism

A machine translation and attention technology, applied in the field of neural machine translation, can solve the problems of ambiguity, translation performance matching limitation, high maintenance cost, etc., and achieve the effect of improving translation effect.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments, so that those skilled in the art can better understand the present invention and implement it, but the examples given are not intended to limit the present invention.

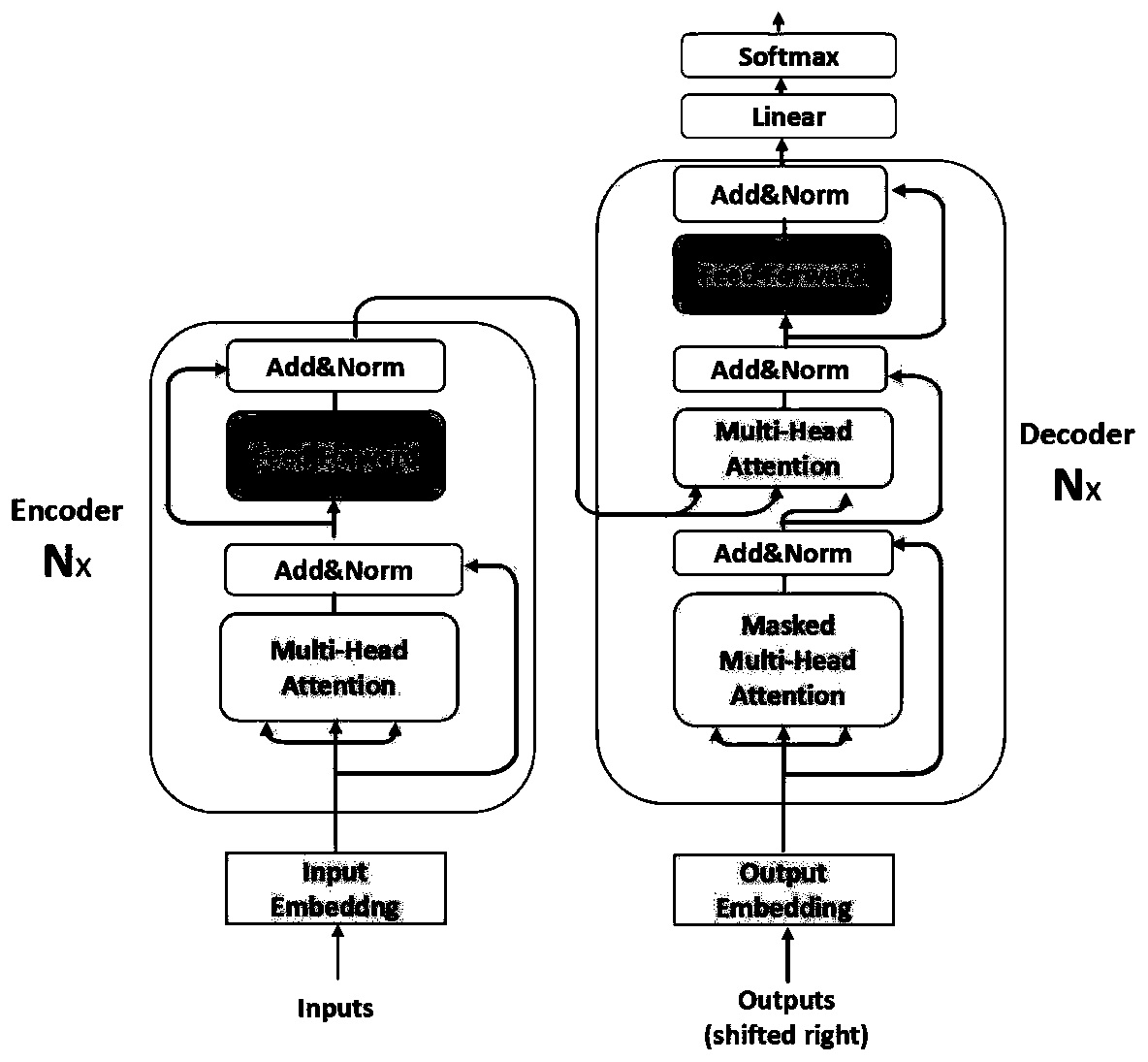

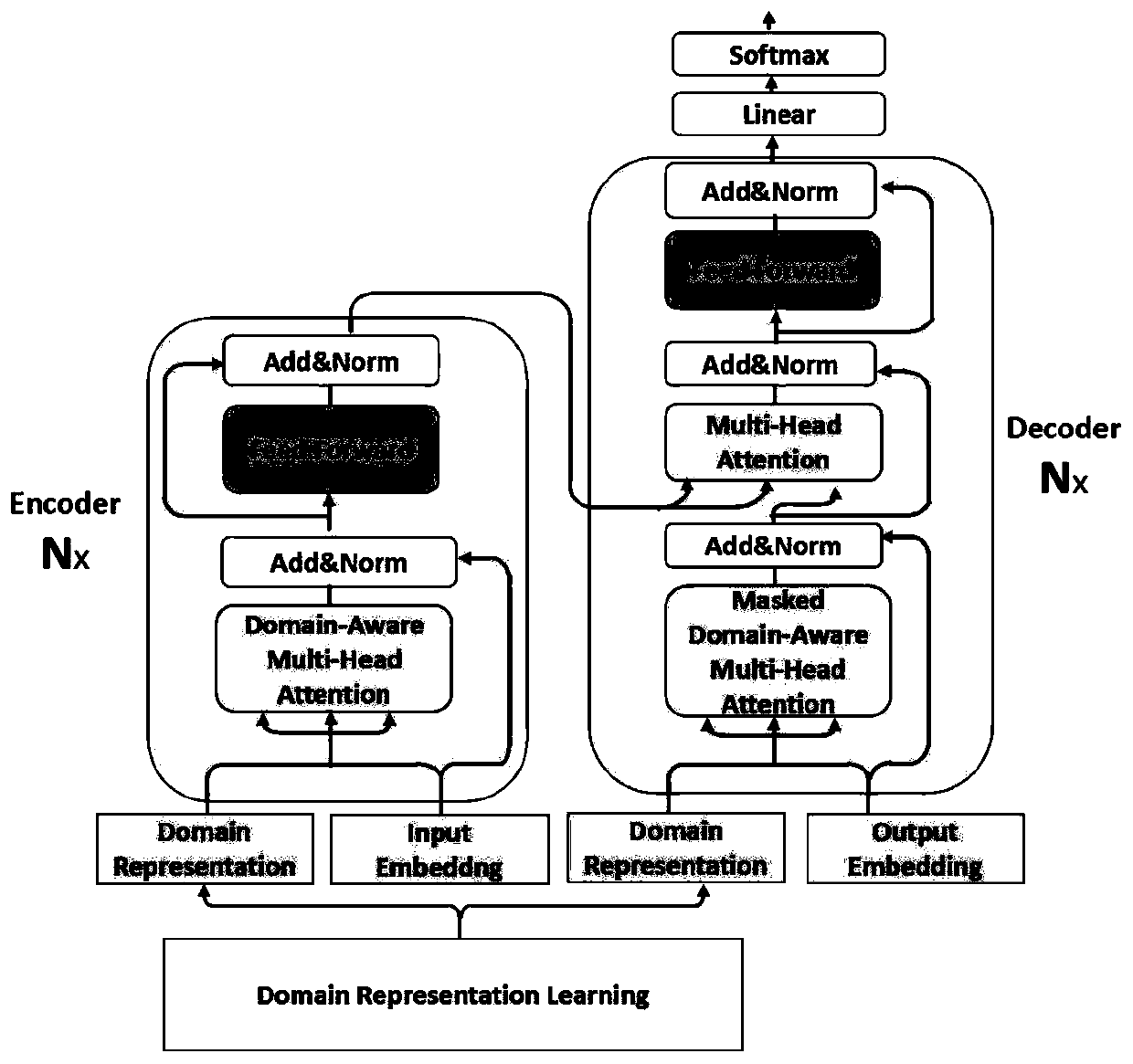

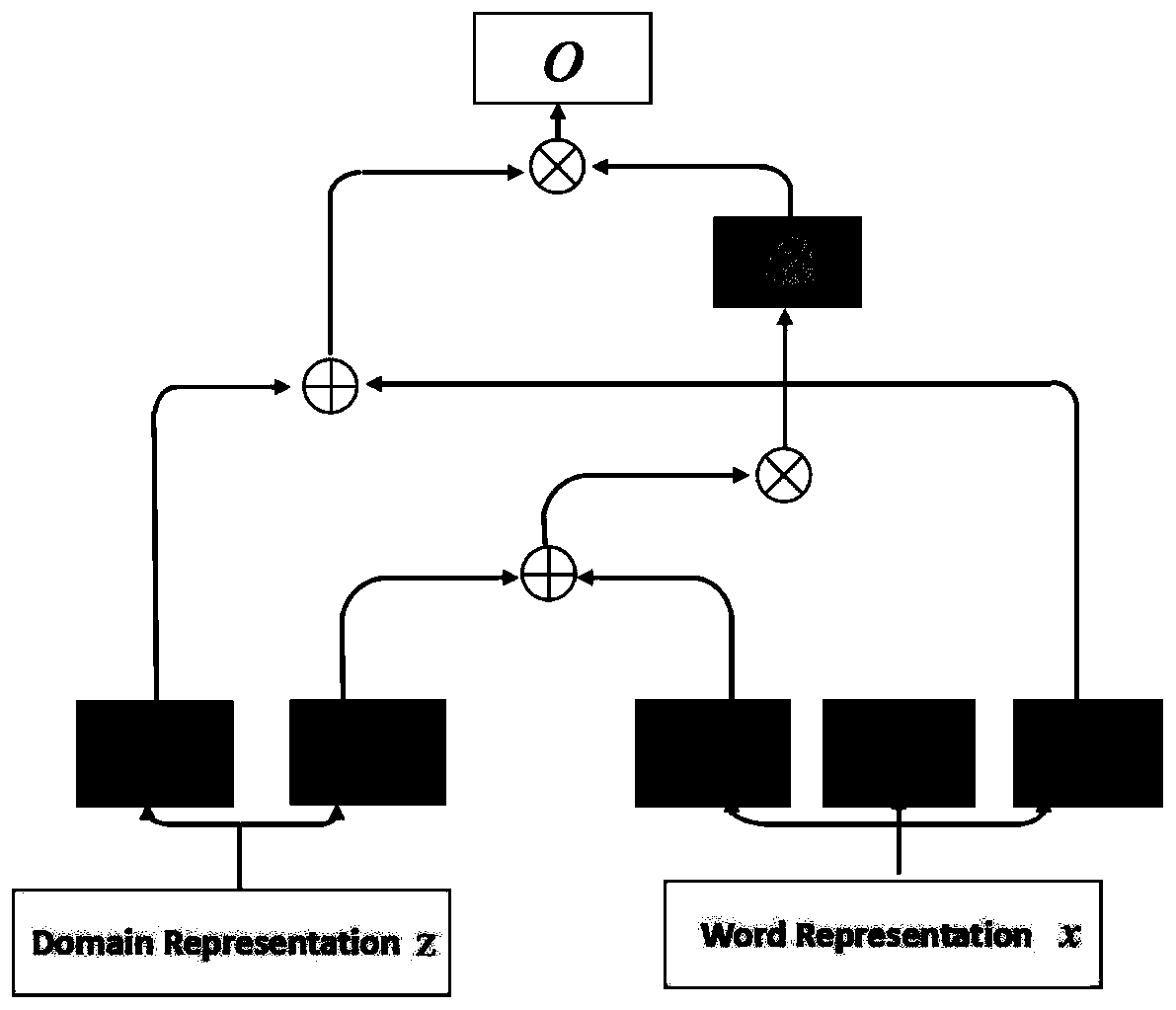

[0051] Background: Transformer model for neural machine translation based on self-attention

[0052] Transformer is a fully attention-based encoder-decoder model. Transformer has two significant advantages over attention-based recurrent neural network neural machine translation models: 1) It completely avoids recurrent networks and maximizes parallel training. 2) Using the advantages of the attention mechanism, it can obtain long-distance dependence.

[0053] 1. Encoder

[0054] In an encoder, several identical layers are stacked on top of each other. Each layer contains two sublayers, a multi-head attention layer and a position-wise feed-forward layer, which are connected with...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com