Human -computer interaction methods, devices, controllers and interactive devices

A technology for human-computer interaction and interactive equipment, which is applied to coin-operated equipment with instrument control, coin-operated equipment and instruments for distributing discrete items, etc. Problems such as the low degree of item association, to achieve the effect of enhancing interactivity and strong interactivity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

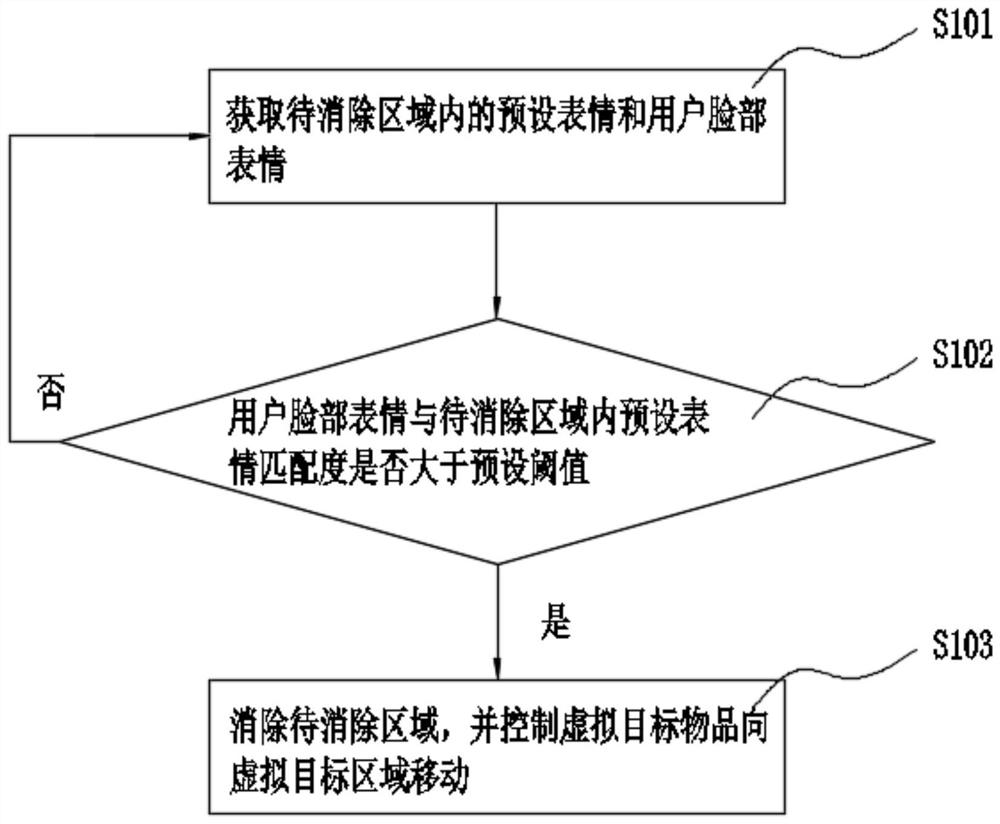

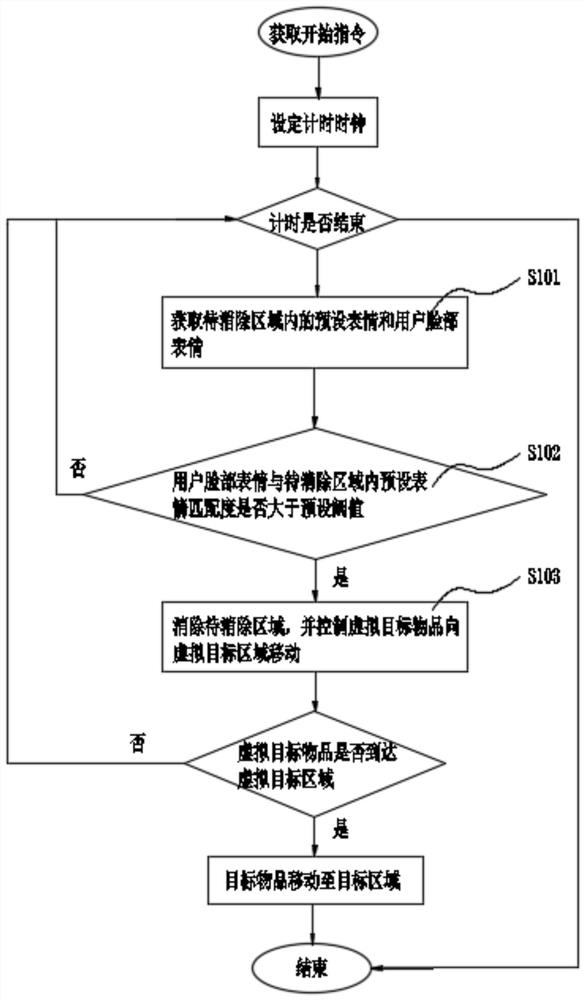

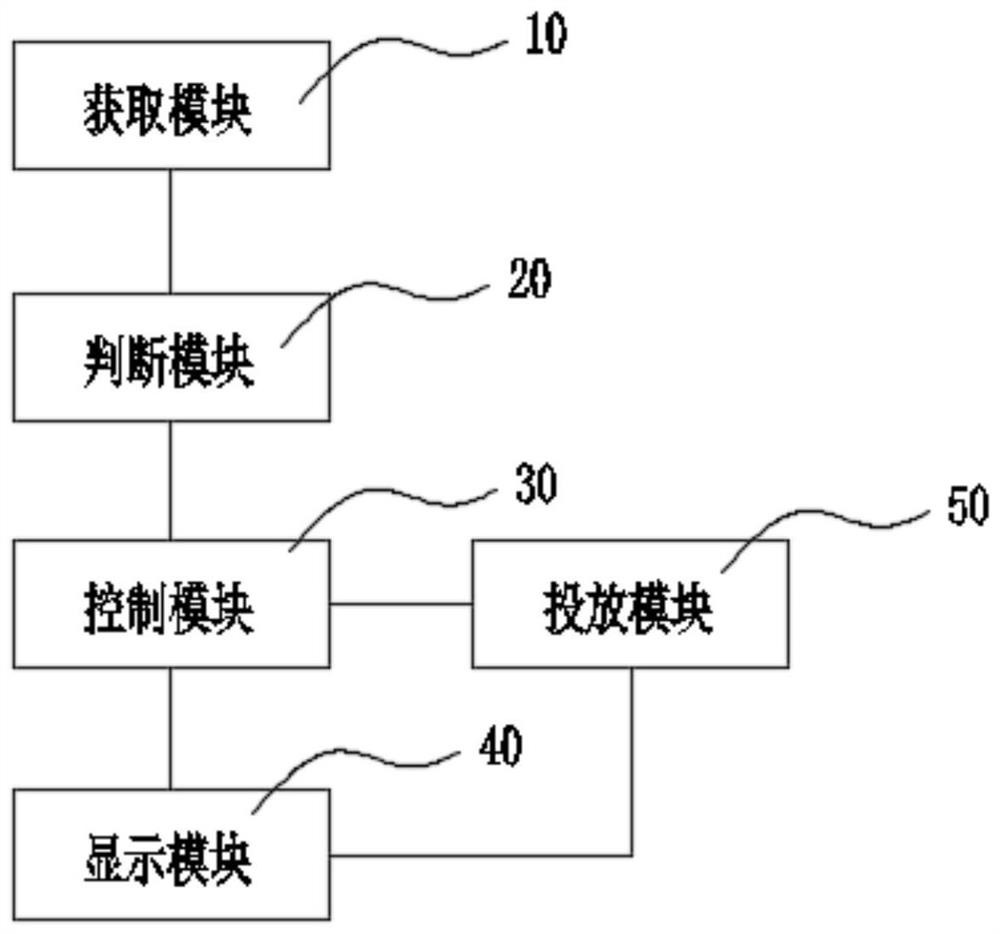

[0048] Please also refer to figure 1 and figure 2, the human-computer interaction method provided by the present invention will now be described. The human-computer interaction method is applicable to an interactive device for controlling the target item to move to the target area. The interactive device includes a display 1 and a delivery component 2 capable of accommodating the target item. The target item and the target area are respectively in A virtual target item and a virtual target area are mapped on the display 1, and there are multiple areas to be eliminated between the virtual target item and the virtual target area. Specifically, the virtual target item and the virtual target area are directly displayed on the On the display 1, the target item is stored inside the delivery component 2, and the delivery component 2 can move the target item to the target area. In this embodiment, the relationship between the target item and the target area is also mapped on the dis...

Embodiment approach

[0063] The method for judging whether the matching degree between the user's facial expression and the preset expression in the area to be eliminated is greater than the preset threshold is introduced below. As an optional implementation, the method mainly includes the following steps

[0064] First, obtain the feature point set of the preset expression; specifically, select specific features from the face of the preset expression, such as eye features, mouth features or cheek features, and form the above features into a feature point set , the feature point set can be determined when the preset expression is preset, or the feature point set can be obtained after a specific preset expression is selected.

[0065] Secondly, extract the face feature point set in the user's facial expression; Specifically, the user's facial expression is collected by the image acquisition device 3 and then the user's facial expression collected by the image acquisition device 3 is analyzed, and th...

Embodiment 2

[0068] As another embodiment of the present invention, please refer to figure 1 and figure 2 , the difference between this embodiment and Embodiment 1 lies in the following parts, and the human-computer interaction method provided by this embodiment will now be described.

[0069] The human-computer interaction method is applicable to an interactive device for controlling the target item to move to the target area. The interactive device includes a display 1 and a delivery component 2 capable of accommodating the target item. The target item and the target area are respectively in A virtual target item and a virtual target area are mapped on the display 1, and there are multiple areas to be eliminated between the virtual target item and the virtual target area. Specifically, the virtual target item and the virtual target area are directly displayed on the On the display 1, the target item is stored inside the delivery component 2, and the delivery component 2 can move the ta...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com