A key frame extraction method of gesture images based on deep learning

A technology of deep learning and extraction methods, applied in neural learning methods, instruments, biological neural network models, etc., can solve problems such as limitations, achieve good robustness, reduce complexity, and reduce the amount of parameters.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] The present invention will be further described below in conjunction with drawings and embodiments.

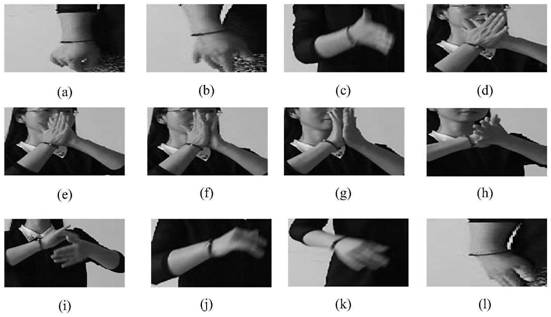

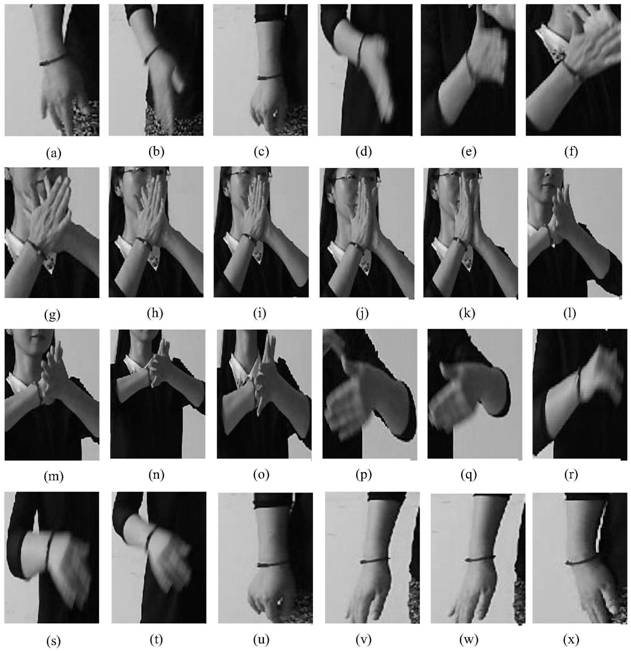

[0045] The present invention is mainly aimed at the key frame extraction situation in the gesture video. Since the recognition object of the present invention is a self-defined gesture action, a dynamic gesture video database is self-built in the specific implementation. Part of the data sets used in the specific implementation are as follows: figure 2 As shown, the figure shows a partial gesture video frame image converted from one of the gesture videos, and the image is saved in .jpg format, and the final picture size is 1280×720.

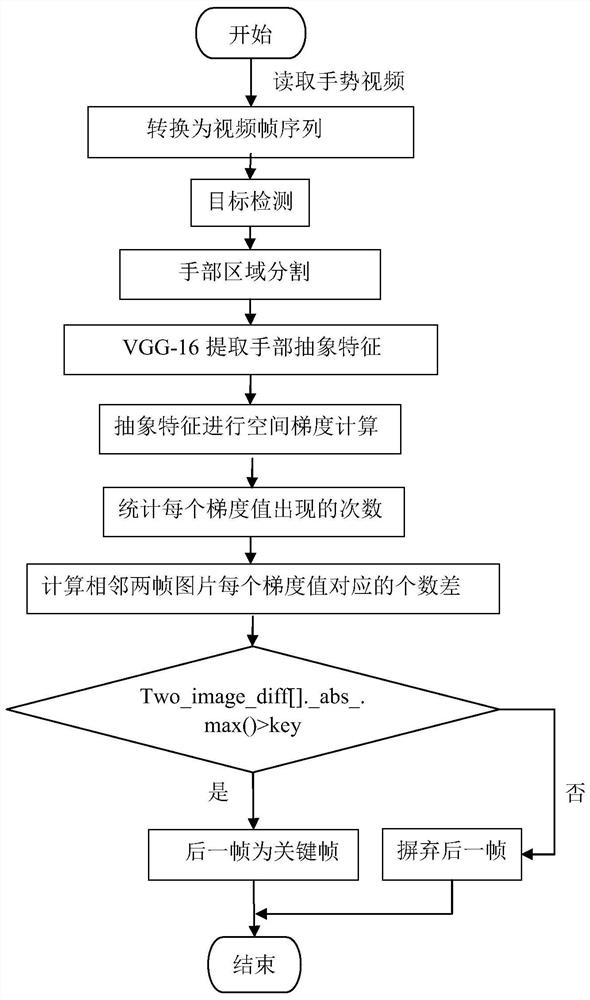

[0046] likefigure 1 As shown, the method of the present invention first converts the gesture video into a gesture video frame image, detects the gesture target area through the Mobilenet-SSD target detection model, segments the marked gesture target frame, and obtains the hand image. Extract the abstract features of the hand area through ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com