Image processing method and device

An image processing and image technology, applied in the field of robotics, can solve the problems of time-consuming data collection, large amount of data, physical loss of robots, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

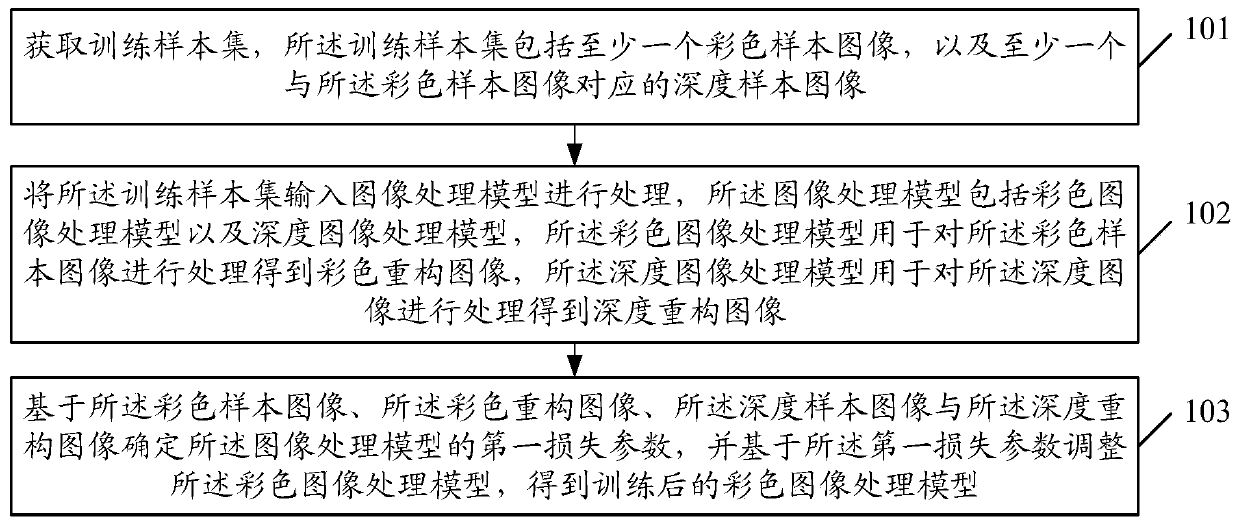

[0062] Such as figure 1 As shown, the image processing method may specifically include the following:

[0063] Step 101: Obtain a training sample set, the training sample set includes at least one color sample image, and at least one depth sample image corresponding to the color sample image;

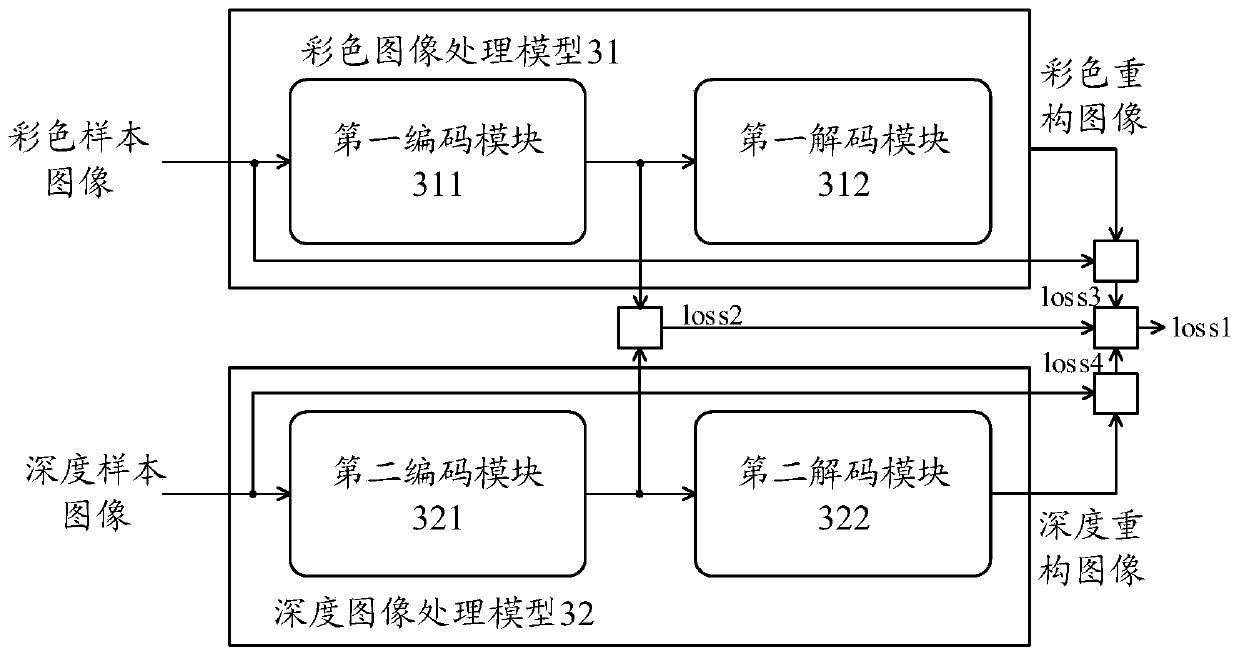

[0064] Step 102: Input the training sample set into an image processing model for processing, the image processing model includes a color image processing model and a depth image processing model, and the color image processing model is used to process the color sample image to obtain a color image Reconstructing an image, the depth image processing model is used to process the depth image to obtain a depth reconstruction image;

[0065] Step 103: Determine a first loss parameter of the image processing model based on the color sample image, the color reconstructed image, the depth sample image, and the depth reconstructed image, and adjust based on the first loss parameter The color ...

Embodiment 2

[0105] In order to better reflect the purpose of this application, on the basis of Embodiment 1 of this application, further illustrations are made, such as Figure 4 As shown, after obtaining the trained color image processing model, the image processing method also includes:

[0106] Step 401: Input the color sample image into the first encoding module of the trained color image processing model, and output the encoded second color sample image;

[0107] Here, the trained color image processing model also includes: a first encoding module and a first decoding module.

[0108] Step 402: Determine a fifth loss parameter of the control model based on the encoded second color sample image and the state label of the robot;

[0109] In practical applications, the training sample set further includes: at least one state label of the robot corresponding to the color sample image.

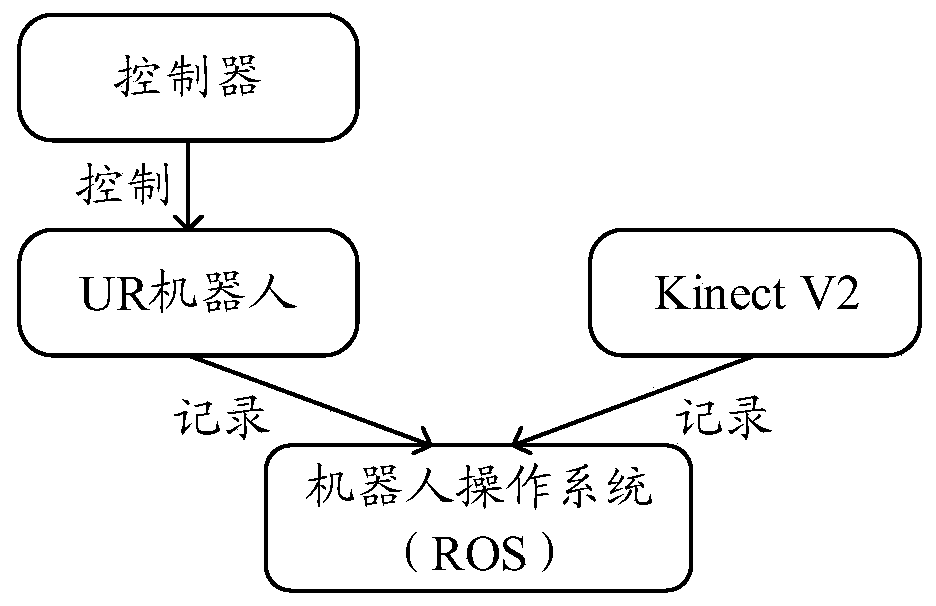

[0110] Specifically, the image acquisition device collects the color sample image and the depth samp...

Embodiment 3

[0122] In order to better reflect the purpose of this application, on the basis of Embodiment 1 of this application, further illustrations are made, such as Figure 5 As shown, after obtaining the trained color image processing model and the trained control model, the image processing method also includes:

[0123] Step 501: Input the color sample image into the first encoding module of the trained color image processing model, and output the encoded second color sample image;

[0124] Here, the trained color image processing model also includes: a first encoding module and a first decoding module.

[0125] Step 502: Input the depth sample image into the second encoding module of the trained depth image processing model, and output the encoded second depth sample image;

[0126] Here, the trained depth image processing model also includes: a second encoding module and a second decoding module.

[0127] In practical applications, the first encoding module and the second encod...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com