Improved target detection method based on residual network

A target detection and residual technology, applied in the field of image recognition, can solve the problems of weak feature extraction ability and inability to obtain good detection results, and achieve the effect of ensuring real-time performance and small amount of calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] The specific implementation manner of the present invention will be described below in conjunction with the accompanying drawings.

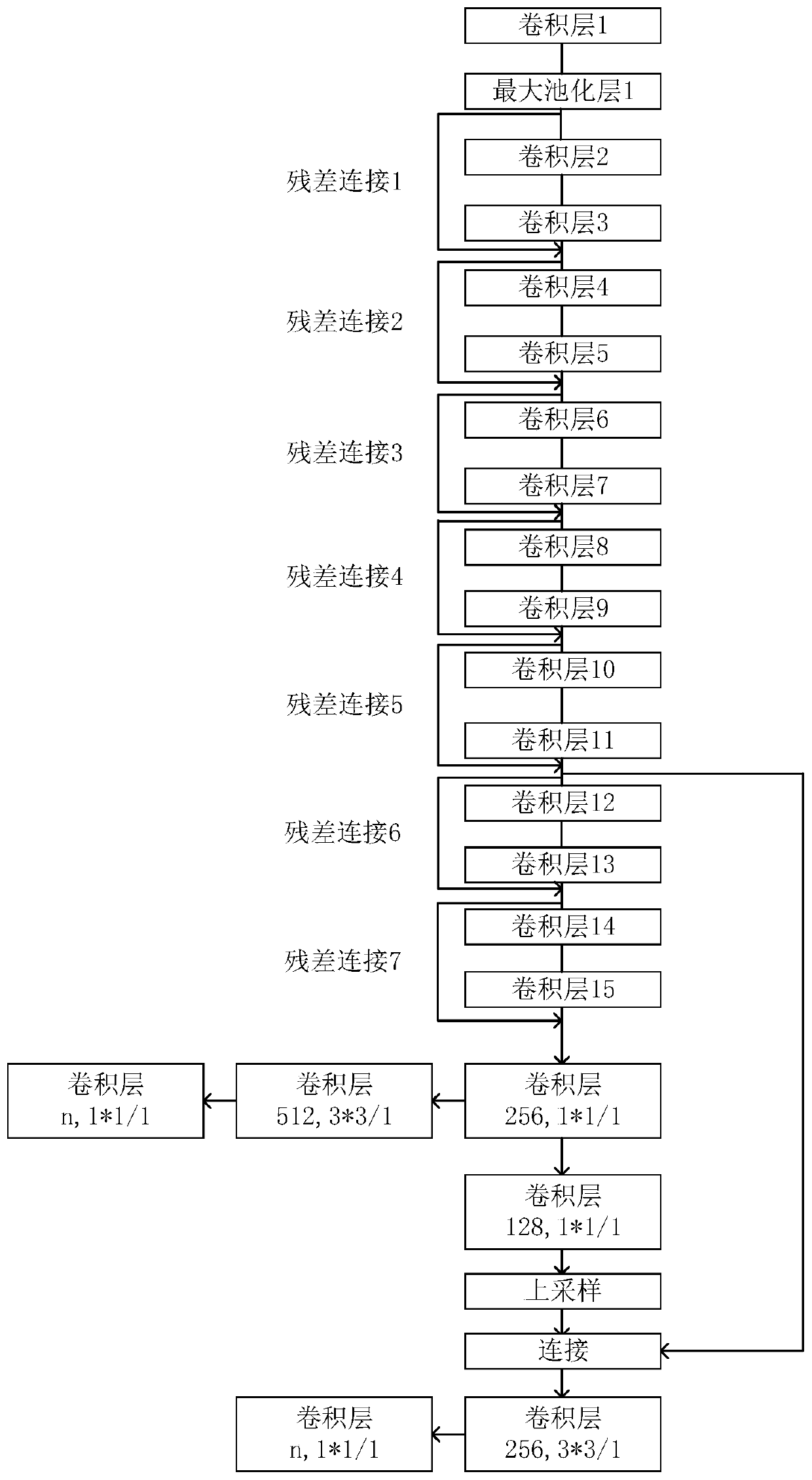

[0050] The principle of the YOLOV3-tiny algorithm is to extract features through continuous convolution and other operations, and finally divide the picture into a 13*13 grid. For each grid unit, three anchor boxes are used to predict that the center point falls on the grid unit. The detection box of the object in .

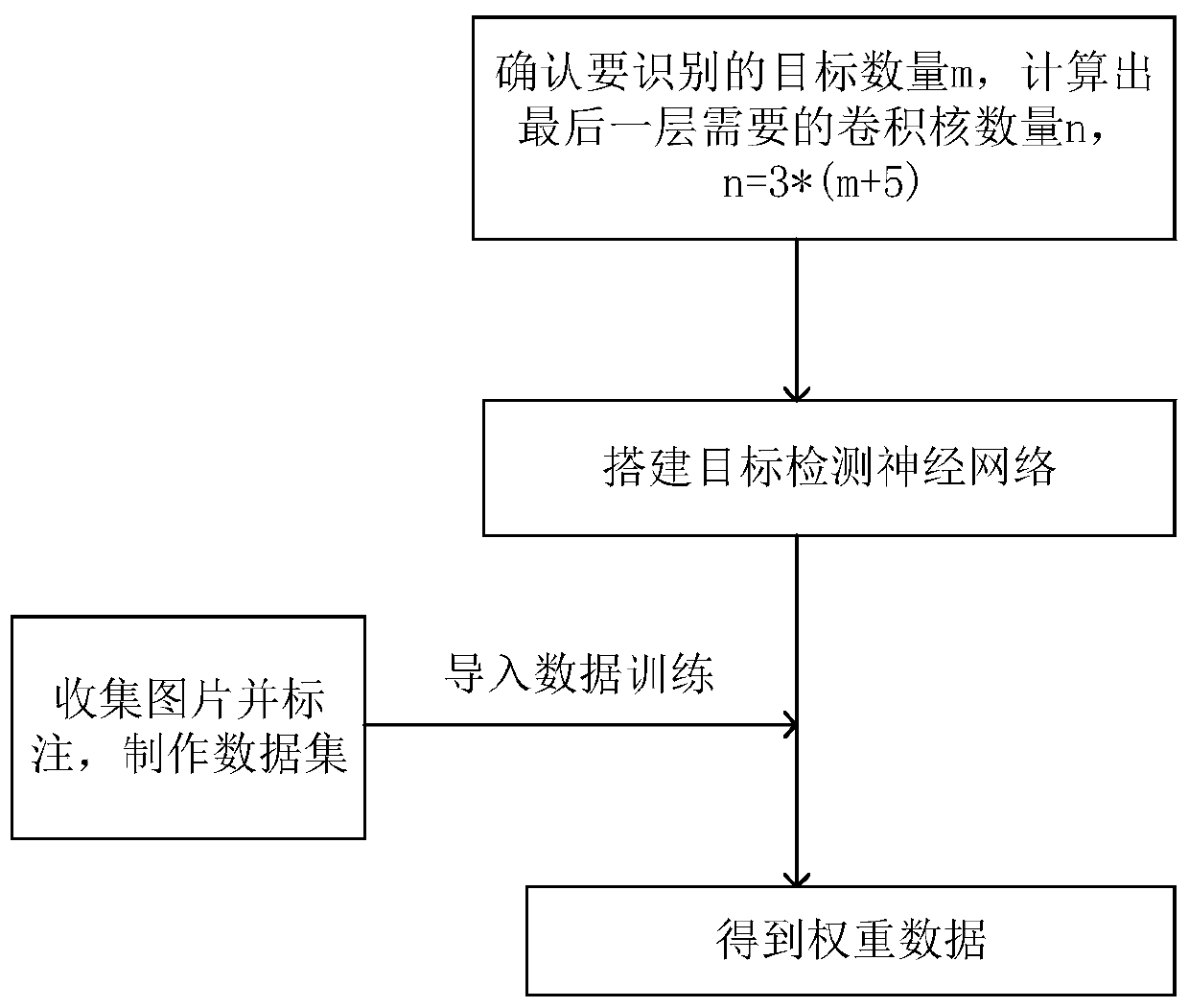

[0051] The overall flow chart of the present invention is as figure 1 shown.

[0052] The first step is to confirm the number of targets to be recognized m, then the number of filters in the last layer is n=3*(m+5), where "3" represents 3 anchor boxes, and "5" represents the center point x of the detection frame , the 5 quantities of y coordinate, width and height, and confidence.

[0053] The second step is to collect pictures containing the target, and mark the position of the target in each picture, and form a data set ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com