Image description method based on convolutional neural network, computer readable storage medium and electronic equipment

A convolutional neural network and image description technology, applied in neural learning methods, biological neural network models, calculations, etc., can solve the problem of time-consuming calculations that cannot process sequence signals in parallel, achieve accurate description, improve computing efficiency, and accurately image content effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0061] Below in conjunction with accompanying drawing and specific embodiment the content of the present invention is described in further detail:

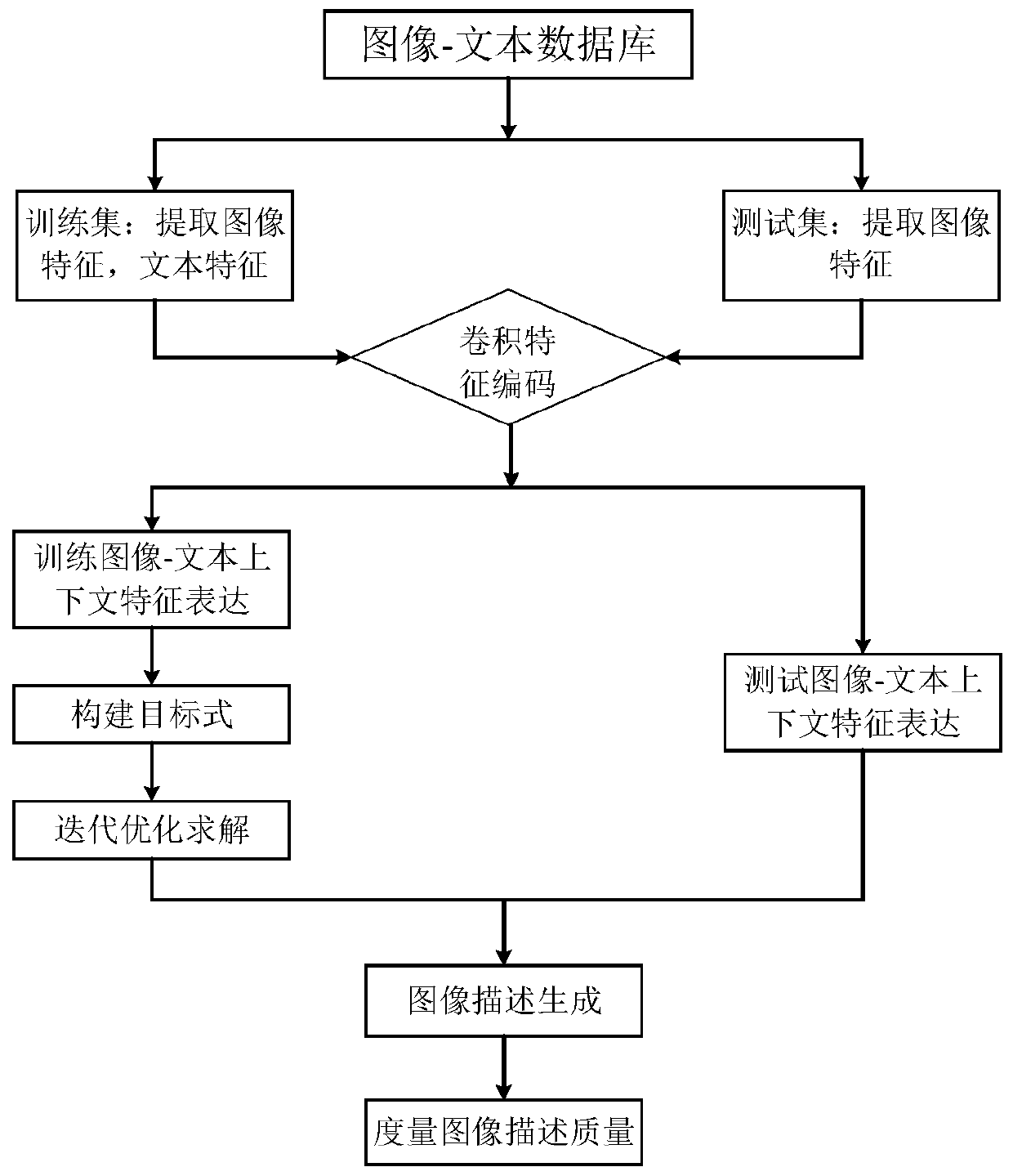

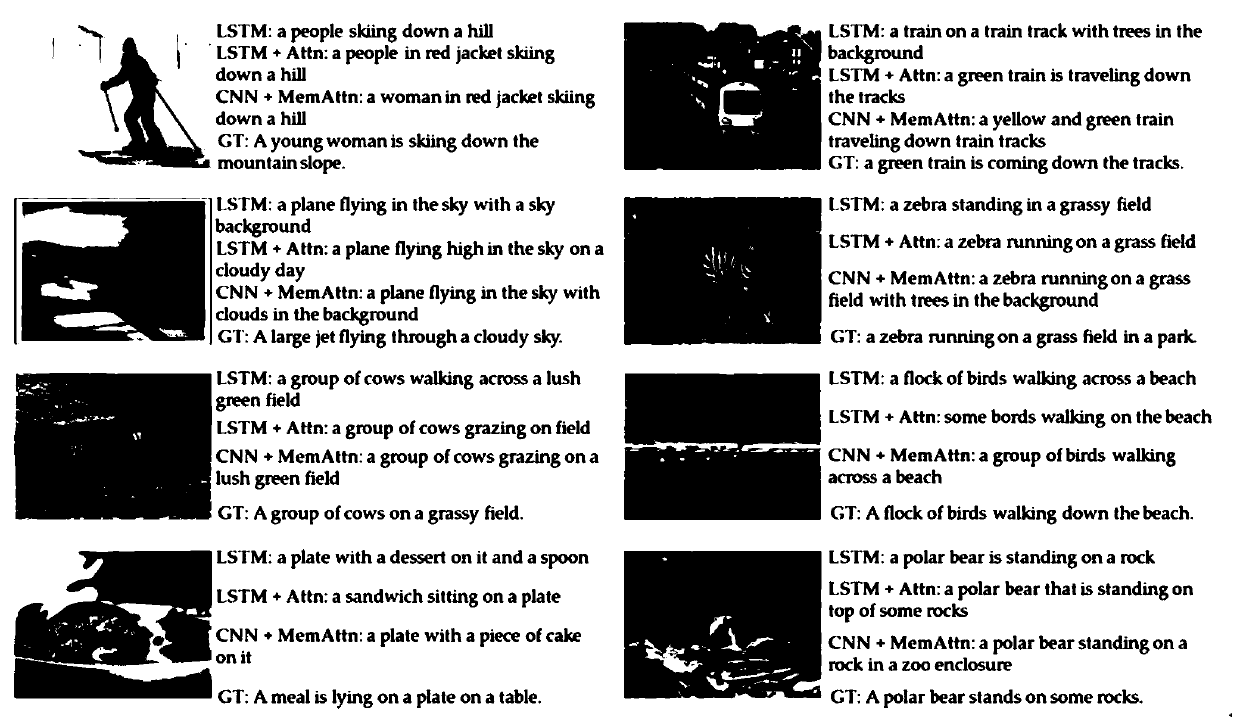

[0062] The invention discloses a method for image description (illustration generation or sentence generation) based on a convolutional neural network, which automatically generates a section of descriptive text from a picture, and mainly solves the problem that the existing recurrent neural network (Recurrent Neural Network, RNN) method cannot be parallelized Dealing with sequence signal issues. The implementation steps are: (1) pre-train the convolutional neural network in the dataset ImageNet; (2) use the pre-trained convolutional neural network to extract global features and local features in the image-text dataset; (3) integrate The image features and description sentence features of the image-text training set are input to the multimodal recurrent neural network to learn the mapping relationship between the image and text; (...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com