Voice synthesis model training method, and voice synthesis method and device

A speech synthesis and model training technology, which is applied in the computer field, can solve the problems of unsatisfactory speech synthesis, complicated training process, flat sound quality, etc., and achieve the effect of reducing pronunciation errors, ensuring correct rate, and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

preparation example Construction

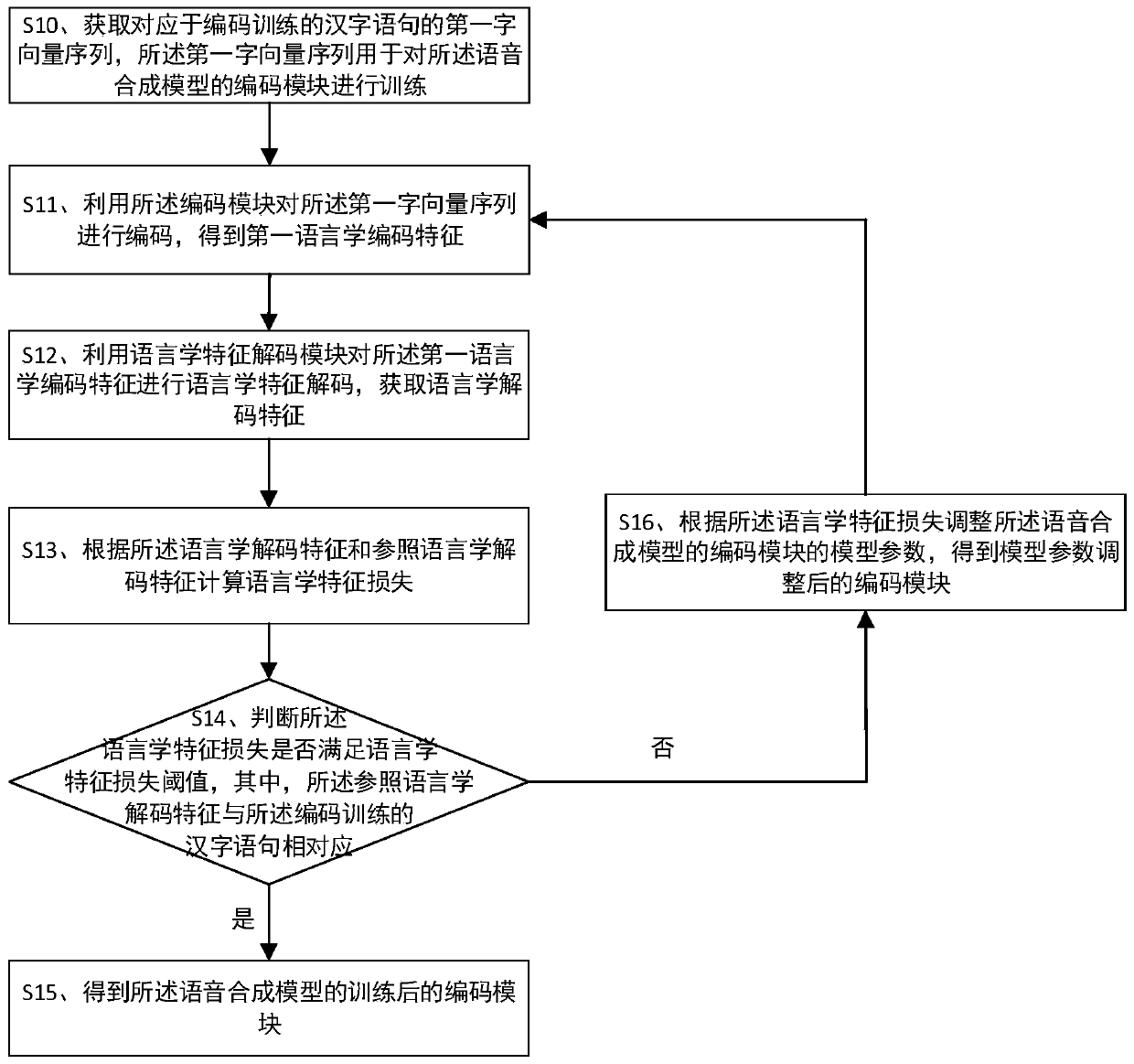

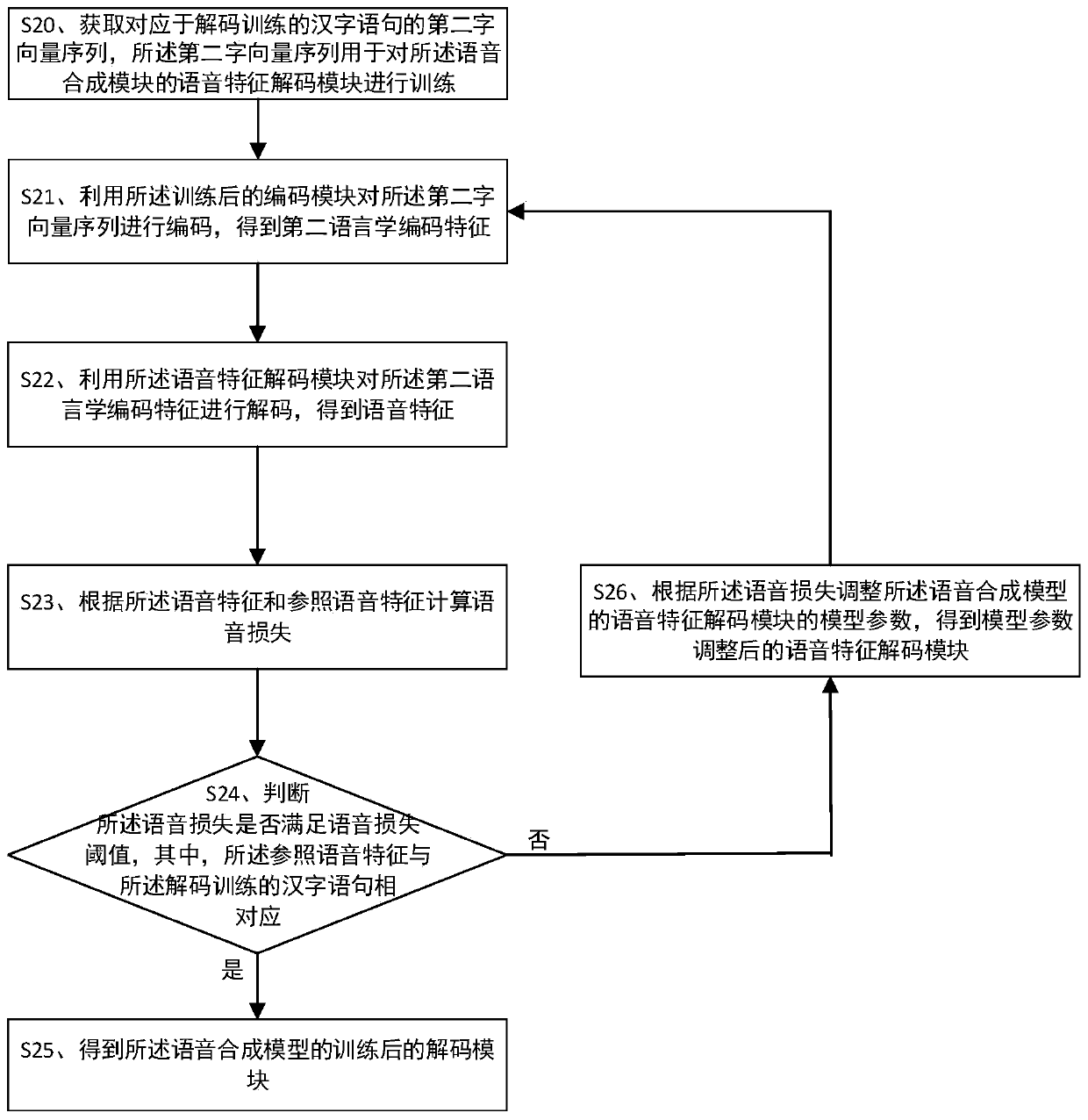

[0114] As shown in the figure, the speech synthesis method provided by the embodiment of the present invention includes:

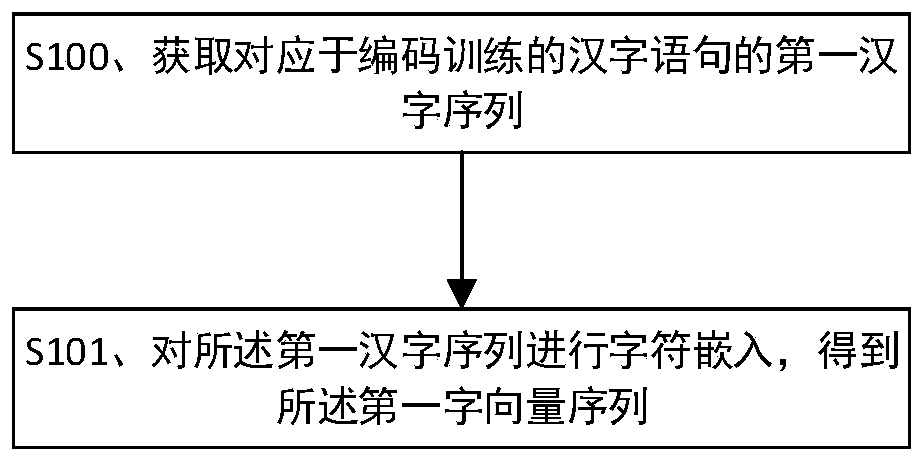

[0115] Step S30: Obtain the third word vector sequence of the Chinese character sentence to be speech synthesized.

[0116] The specific content of step S30 can refer to figure 1 Step S10, which will not be repeated here.

[0117]Step S31: Encoding the third character vector sequence by using the trained encoding module to obtain a third linguistic encoding feature.

[0118] After obtaining the third character vector sequence, use the trained encoding module to encode it, so as to obtain triphonetic encoding features.

[0119] The specific content of step S31 can refer to figure 1 Step S11, which will not be repeated here.

[0120] Step S32: Using the trained speech feature decoding module to decode the third linguistic coding feature to obtain a third speech feature.

[0121] After obtaining the third linguistic coding feature, use the trained speech...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com