Method for fusing multiple paths of videos and three-dimensional GIS scene

A technology of multi-channel video and fusion method, which is applied in the field of fusion of multi-channel video and 3D GIS scene to achieve the effect of improving rapid positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

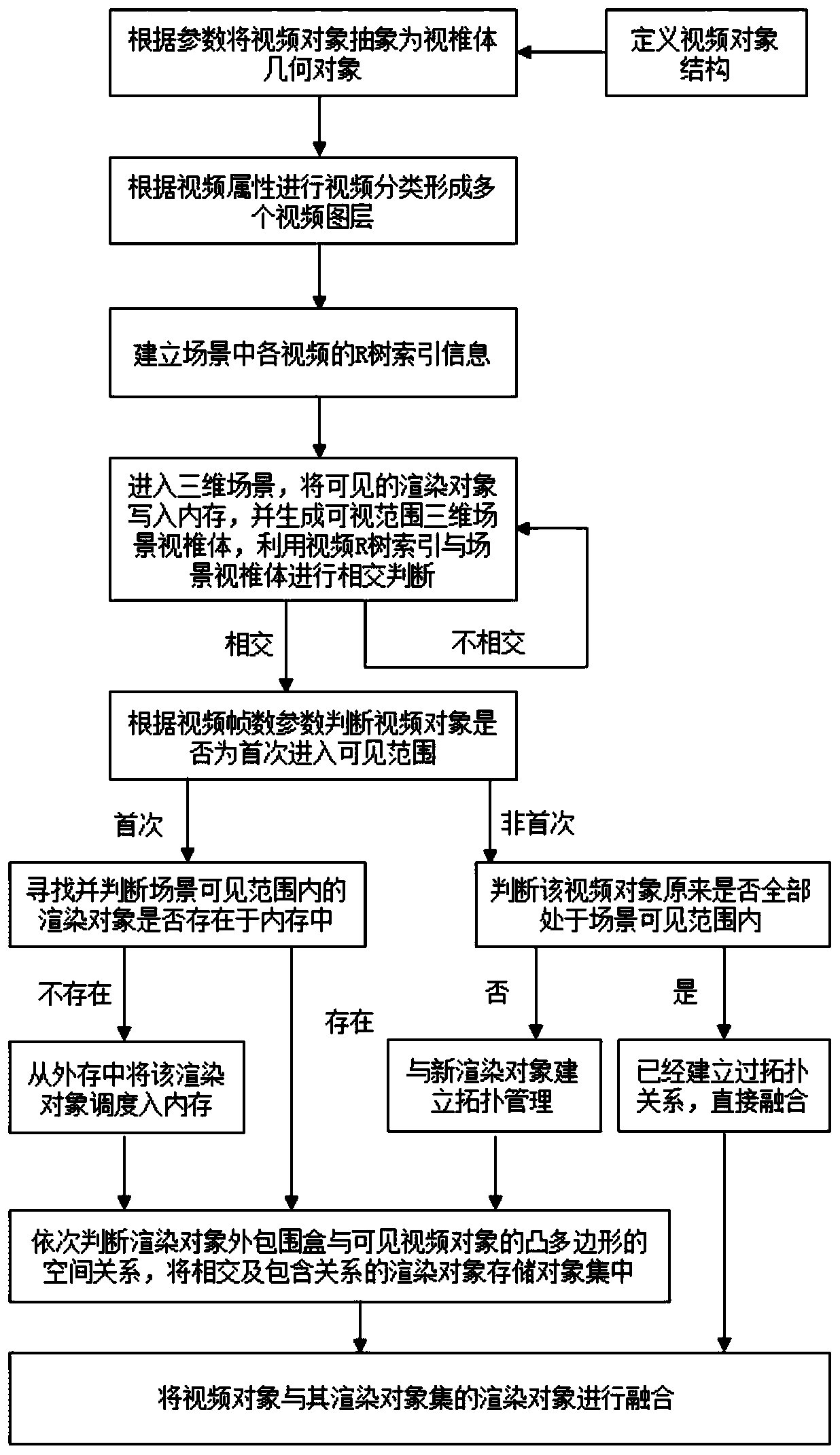

[0036] Such as figure 1 As shown, this embodiment provides a fusion method of multi-channel video and 3D GIS scene, including the following steps,

[0037] S1. Define the data structure in the video object, and assign initial values to each parameter of each video object;

[0038] S2. Determine the spatial position information, attitude information, and observable area of the camera of the video object in the scene, and abstract the video object into a frustum geometric object according to the above information;

[0039] S3. According to the attribute information of the camera, classify all the frustum geometric objects in the scene to form multiple video layers; the attribute information of the camera is such as the ownership attribute of the camera: public security, urban management, traffic, etc.;

[0040] S4. Establishing R-tree index information of all video objects under each video layer in the scene;

[0041] S5. Enter the visible range of the 3D scene, store the ...

Embodiment 2

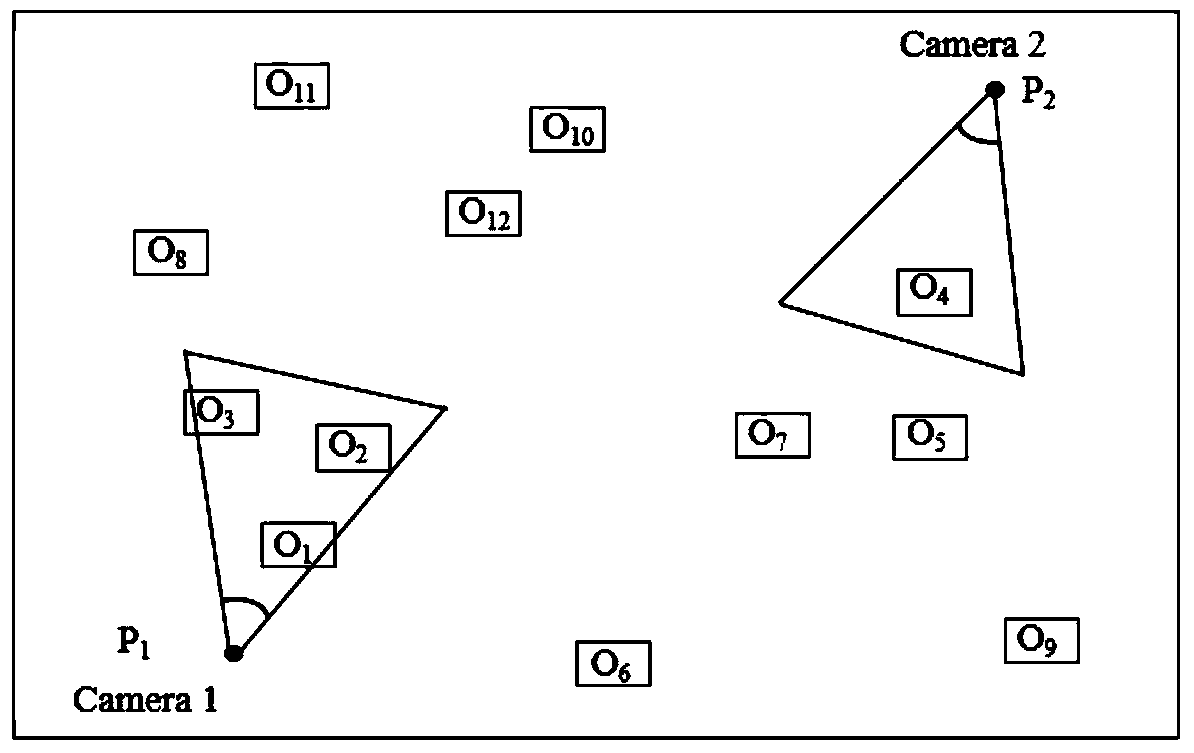

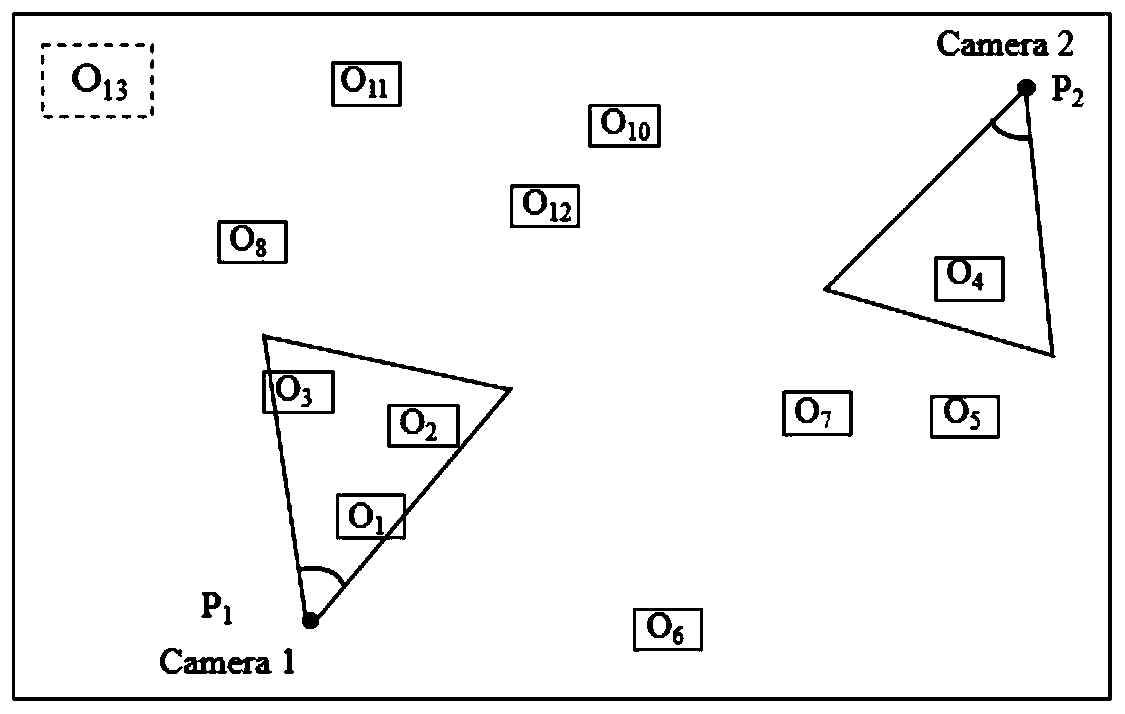

[0064] Such as Figure 2 to Figure 7 As shown, in this embodiment, the fusion effect of the present invention is compared and illustrated in combination with the present invention and the original virtual-real fusion method based on video projection.

[0065] In this embodiment, the original virtual-real fusion method based on video projection refers to projecting video frame images into a 3D scene using projection texture technology, which is similar to adding a slide projector to a 3D GIS scene, using frame georeferencing information to position and orient it, and then project images onto objects in the scene. It mainly includes 2 steps:

[0066] Step 1: The determination of objects to be fused and rendered within the video range is the most basic and critical step to realize the correct fusion of video and 3D GIS scene. (1) First, set a virtual depth camera at the position of the camera, and set the pose of the depth camera according to the coordinates of the camera, the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com