Multi-model knowledge distillation method and device, electronic equipment and storage medium

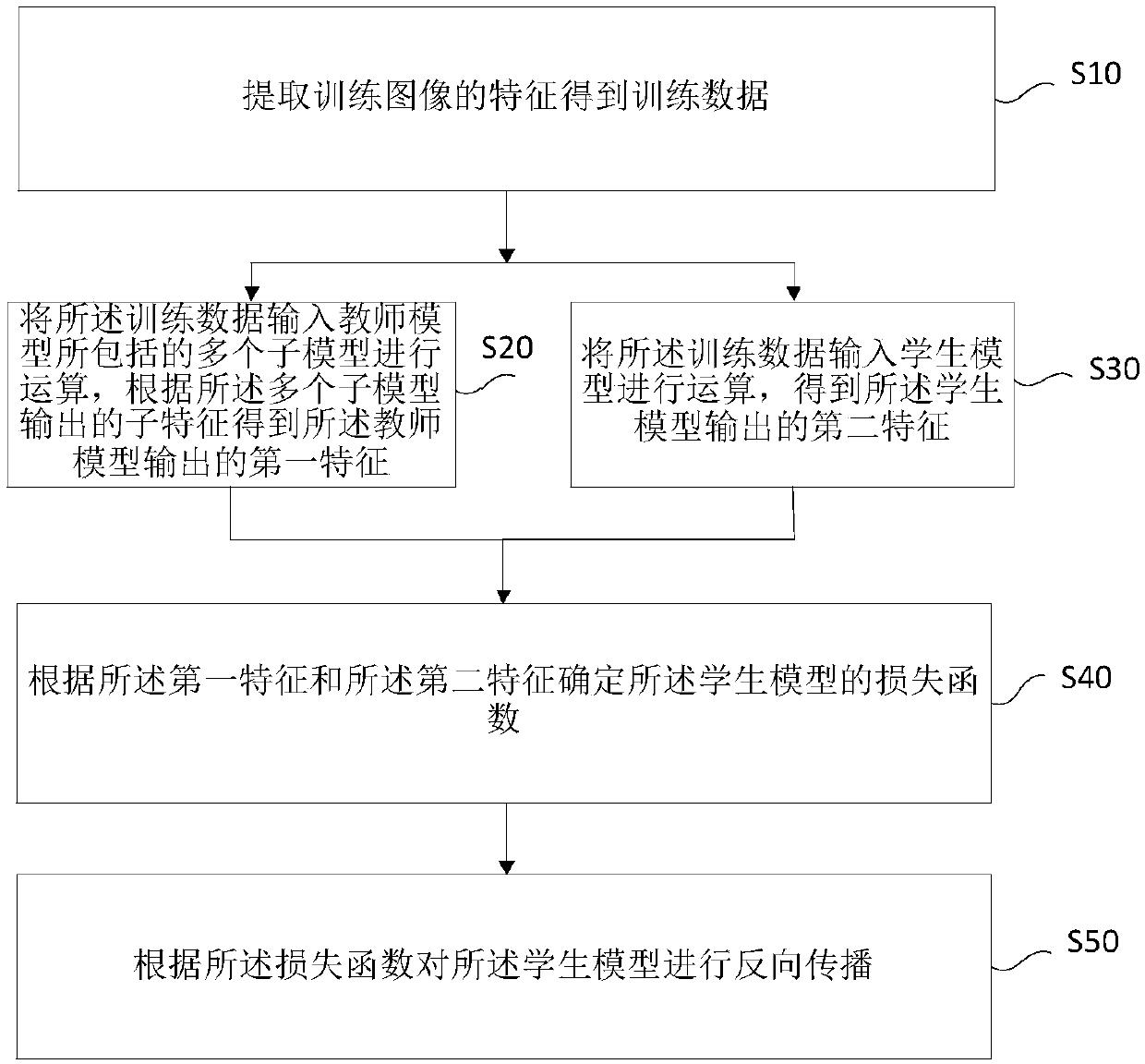

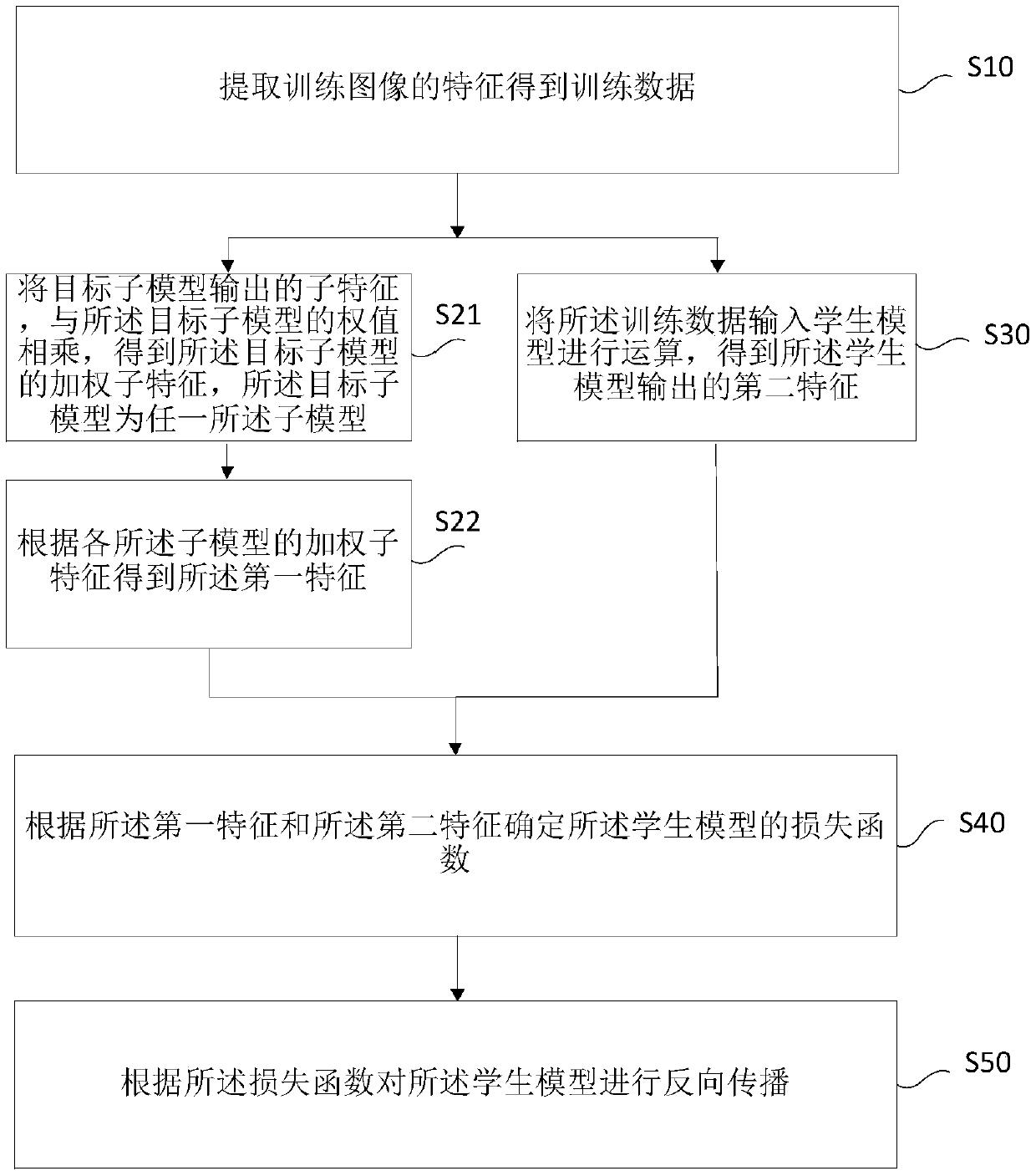

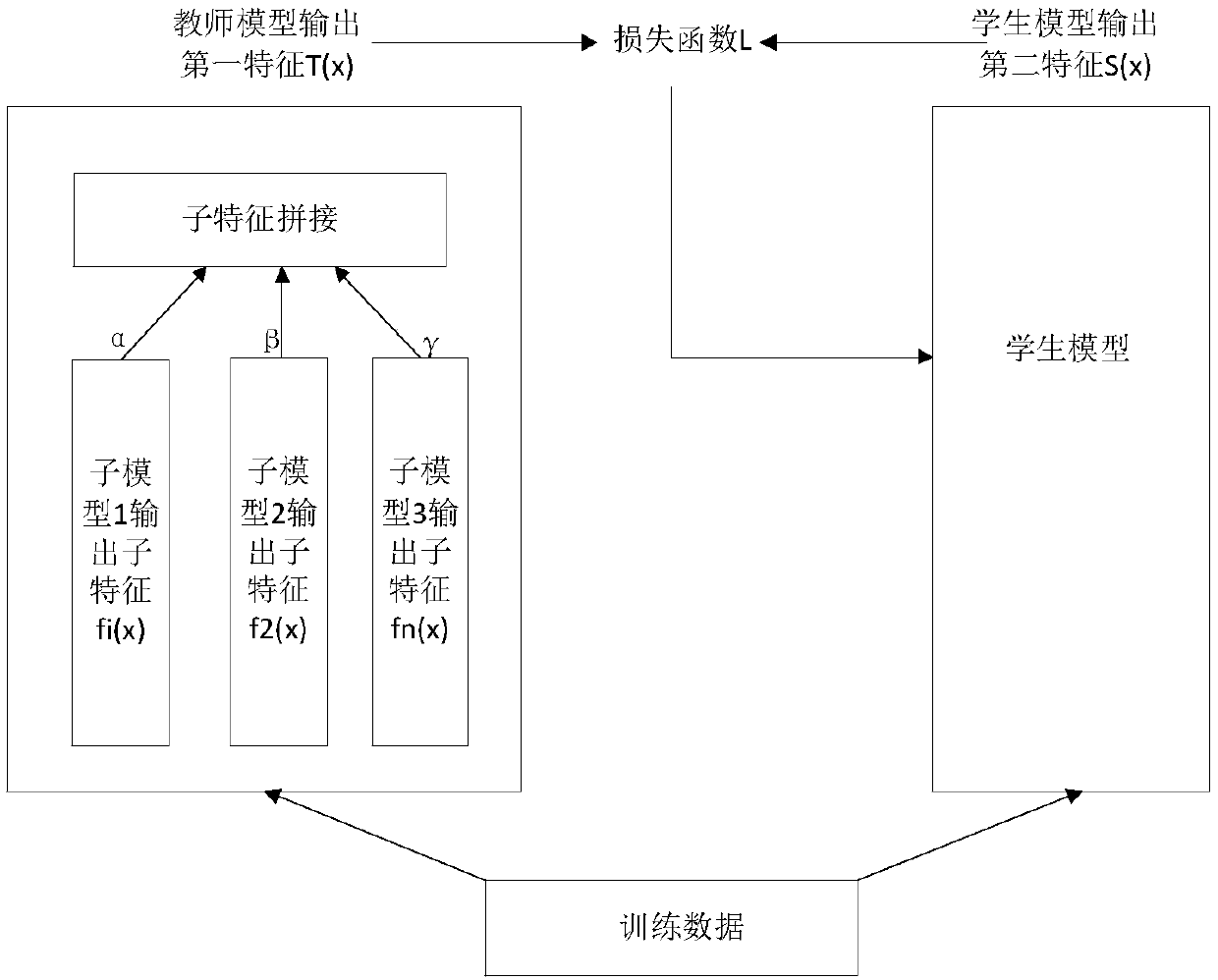

A distillation method and multi-model technology, applied in the field of machine learning, can solve the problems of long consumption, high occupation and low efficiency, and achieve the effect of overcoming limited expression ability and improving model accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] Various exemplary embodiments, features, and aspects of the present disclosure will be described in detail below with reference to the accompanying drawings. The same reference numbers in the figures indicate functionally identical or similar elements. While various aspects of the embodiments are shown in drawings, the drawings are not necessarily drawn to scale unless specifically indicated.

[0051] The word "exemplary" is used exclusively herein to mean "serving as an example, embodiment, or illustration." Any embodiment described herein as "exemplary" is not necessarily to be construed as superior or better than other embodiments.

[0052] The term "and / or" in this article is just an association relationship describing associated objects, which means that there can be three relationships, for example, A and / or B can mean: A exists alone, A and B exist simultaneously, and there exists alone B these three situations. In addition, the term "at least one" herein mean...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com