Video classification method and device

A video classification and video technology, applied in the field of data processing, can solve the problems of low efficiency and accuracy of video classification methods, achieve the effect of solving low efficiency and accuracy and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

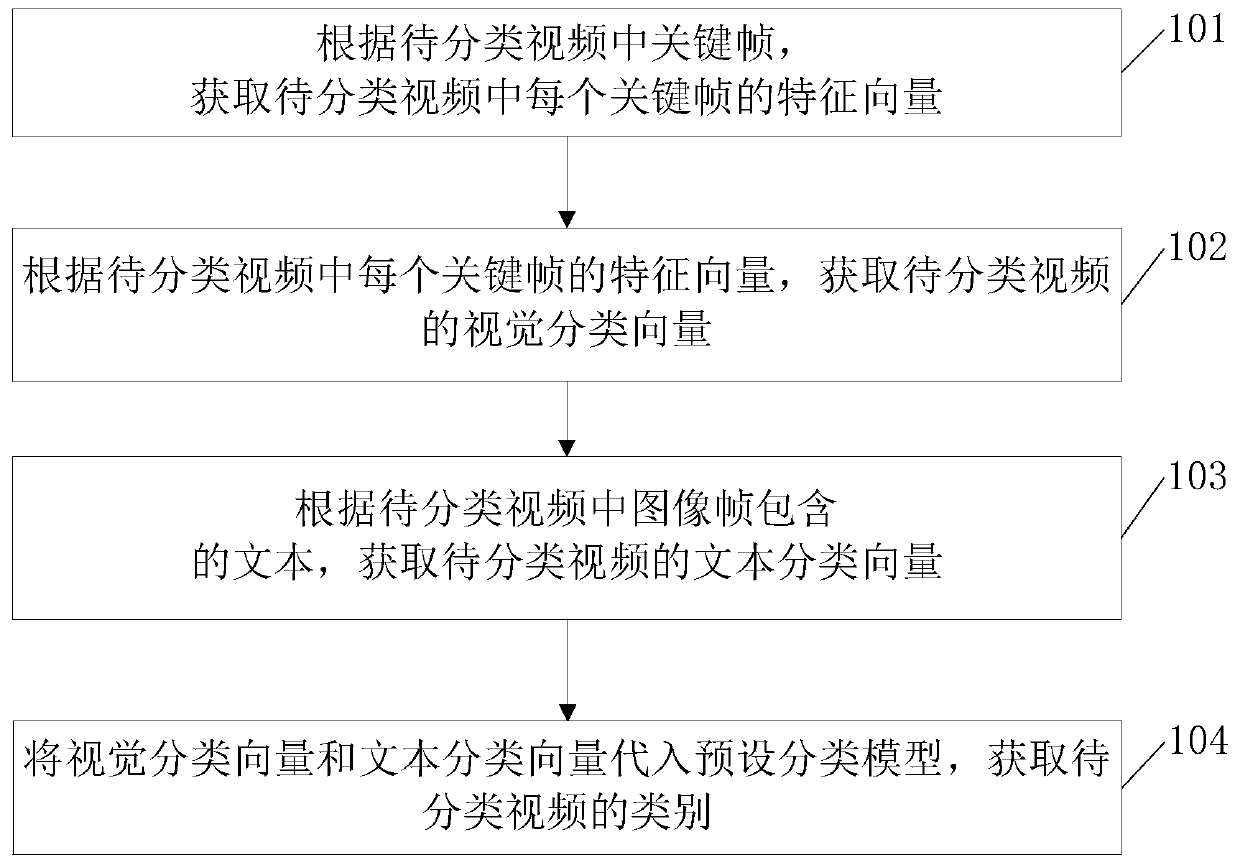

[0020] Such as figure 1 As shown, the present invention provides a video classification method, comprising:

[0021] Step 101, according to the key frames in the video to be classified, the feature vector of each key frame in the video to be classified is obtained.

[0022] In this embodiment, the key frame in step 101 is also called an I frame (Intra-coded frame), which is a frame in which the image data is completely preserved in the compressed video. When decoding the key frame, only this frame is needed The image data can be decoded. Since the similarity between each key frame in the video to be classified is small, multiple key frames can be used to fully characterize the video to be classified; by extracting the feature vector of the key frame, the accuracy of classifying the video image to be classified can be improved .

[0023] Specifically, the process of obtaining the feature vector through step 101 includes: extracting key frames from the video to be classified ...

Embodiment 2

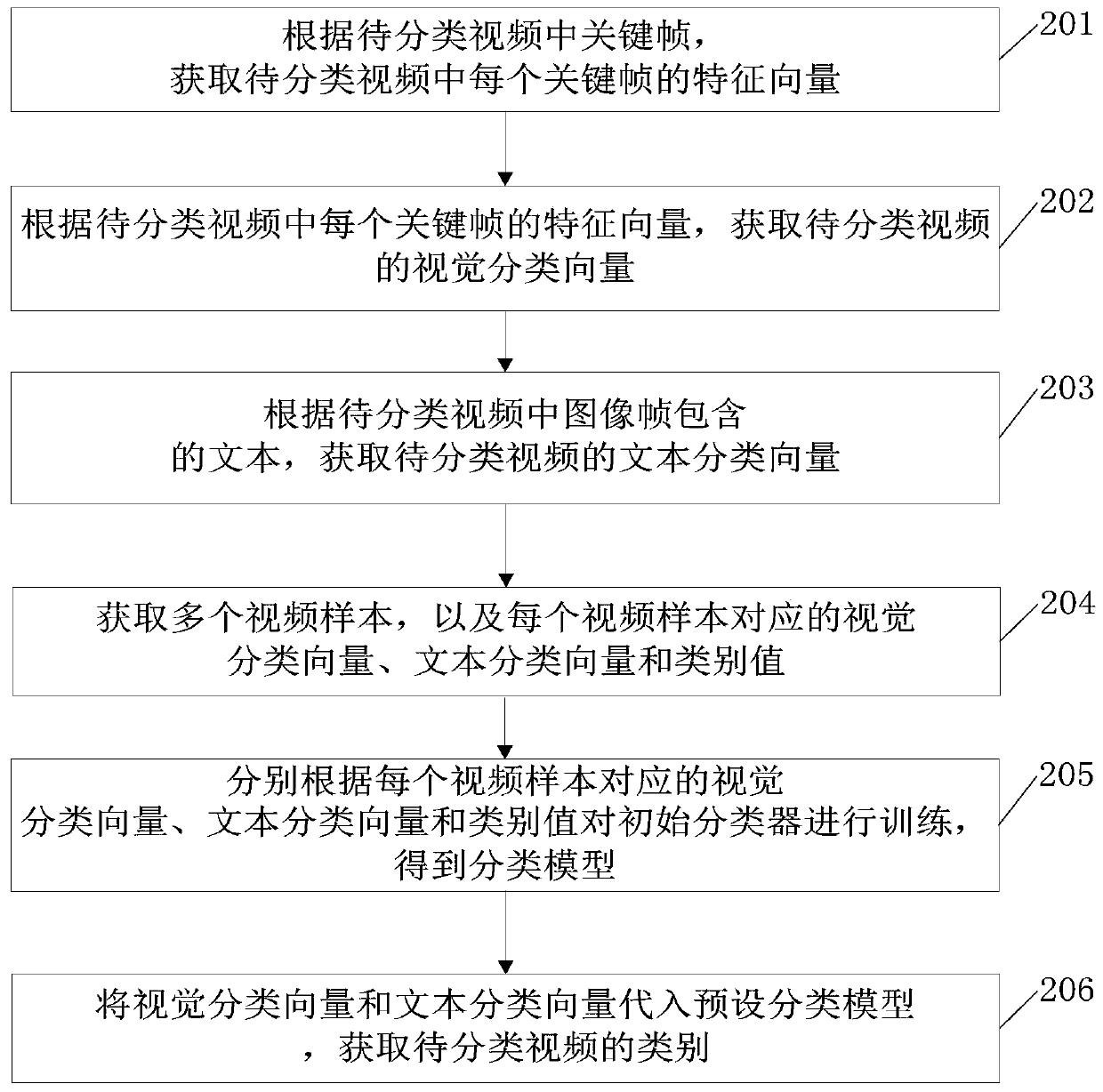

[0032] Such as figure 2 As shown, the embodiment of the present invention provides a video classification method, including:

[0033] Step 201 to step 203, obtain visual classification vector and text classification vector, this process and figure 1 Steps 101 to 103 shown are similar and will not be repeated here.

[0034] Step 204, acquiring a plurality of video samples, and a visual classification vector, a text classification vector and a category value corresponding to each video sample.

[0035]In step 205, the initial classifier is trained according to the visual classification vector, text classification vector and category value corresponding to each video sample to obtain a classification model.

[0036] In this embodiment, the initial classifier in step 205 may use a convolutional neural network model, or other models, which are not limited here.

[0037] Step 206, substituting the visual classification vector and the text classification vector into the preset cl...

Embodiment 3

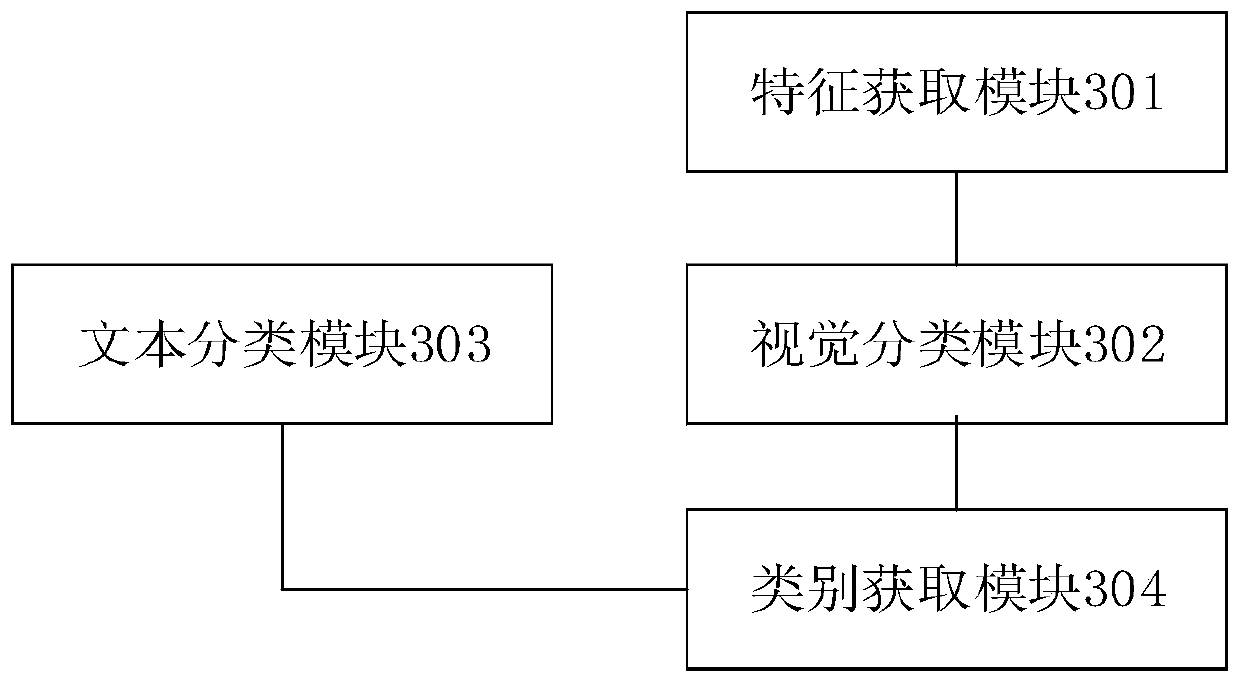

[0040] Such as image 3 As shown, the embodiment of the present invention provides a video classification device, including:

[0041] The feature acquisition module 301 is used to obtain the feature vector of each key frame in the video to be classified according to the key frame in the video to be classified;

[0042] The visual classification module 302 is connected with the feature acquisition module, and is used to obtain the visual classification vector of the video to be classified according to the feature vector of each key frame in the video to be classified;

[0043] The text classification module 303 is used for obtaining the text classification vector of the video to be classified according to the text contained in the image frame in the video to be classified;

[0044] The category acquisition module 304 is connected to the visual classification module and the text classification module respectively, and is used for substituting the visual classification vector an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com