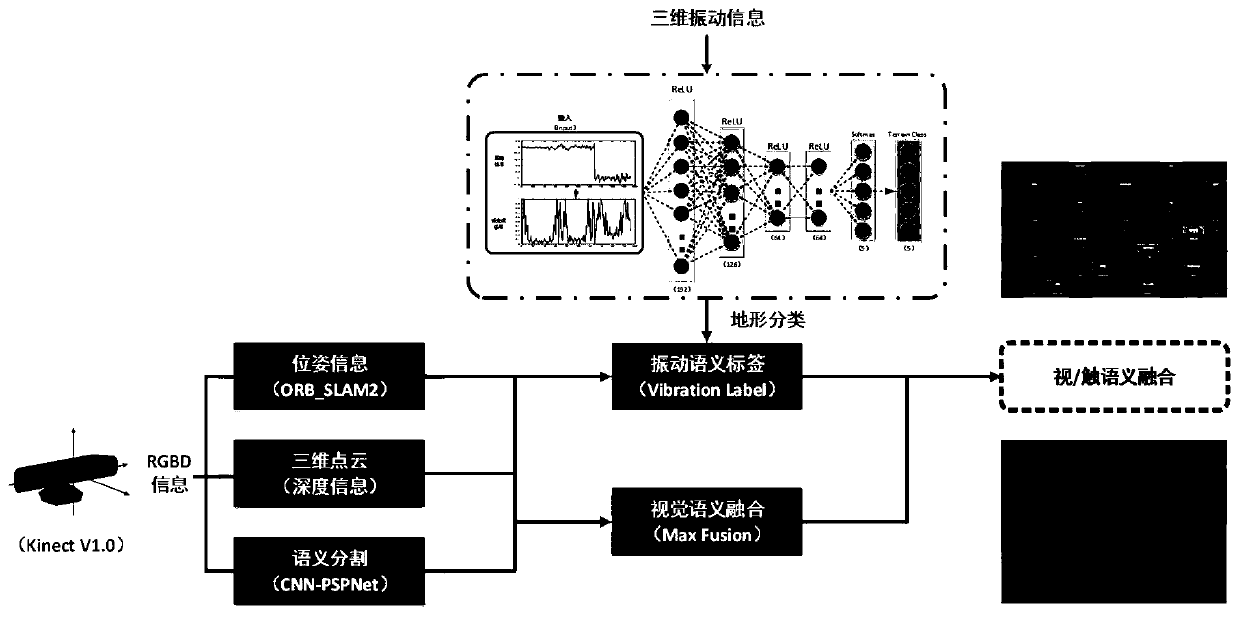

Terrain semantic perception method based on vision and vibration tactile fusion

A semantic and visual technology, applied in the field of terrain semantic perception based on vision and vibrotactile fusion, can solve the problem of insufficient terrain semantic perception ability, and achieve the effect of reliable perception ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

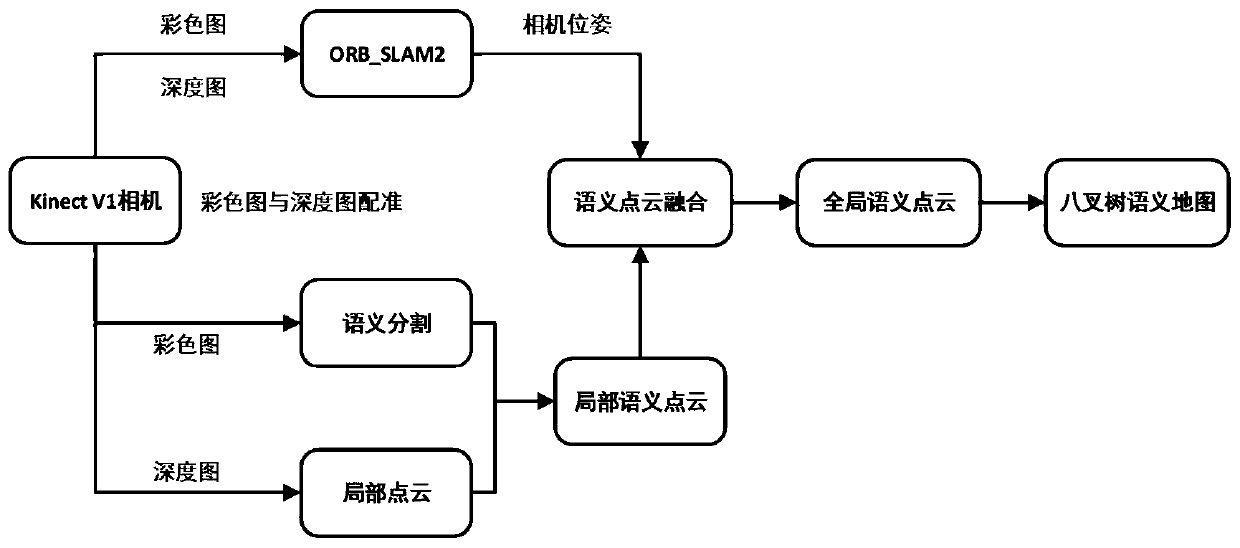

Method used

Image

Examples

Embodiment

[0119] 1. Experimental settings

[0120] The Blue Whale XQ unmanned vehicle platform is selected as the experimental test platform, and it is equipped with the Kinect V1.0 depth vision camera, and its internal reference is f x =517.306408,f y =516.469215,c x = 318.643040,c y =255.313989, the tangential distortion coefficient is k 1 =0.262383,k 2 =-0.953104, the radial distortion coefficient is p 1 =-0.005358,p 2 = 0.002628, p 3 =1.163314, then the effective depth range can be calculated by the following formula:

[0121]

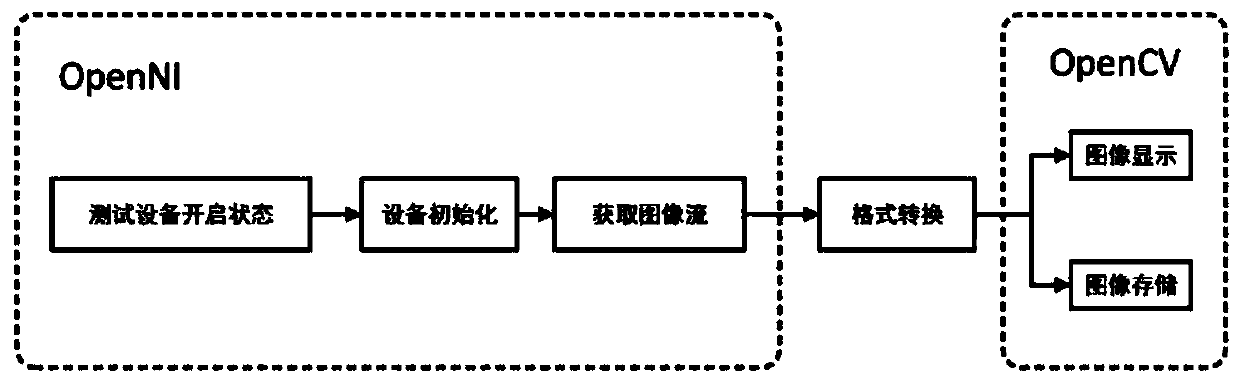

[0122] During the physical test, the acquisition frequency of color image and depth image of Kinect V1.0 camera is 30Hz, the acquisition frequency of vibration sensor is 100Hz, the frequency of feature vector is 1.6Hz, and the operating frequency of ORB_SLAM2 is 15Hz.

[0123] In addition, this experiment sets the depth scale of the point cloud as DepthMapFactor=1000; the number of ORB feature points extracted from a single frame image is nFeature...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com