Construction method of semi-supervised neural machine translation model based on word-to-word translation

A translation model and machine translation technology, applied in neural learning methods, biological neural network models, natural language translation, etc., can solve problems such as unsupervised translation models cannot translate normally, and achieve the effect of improving translation quality and translation performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

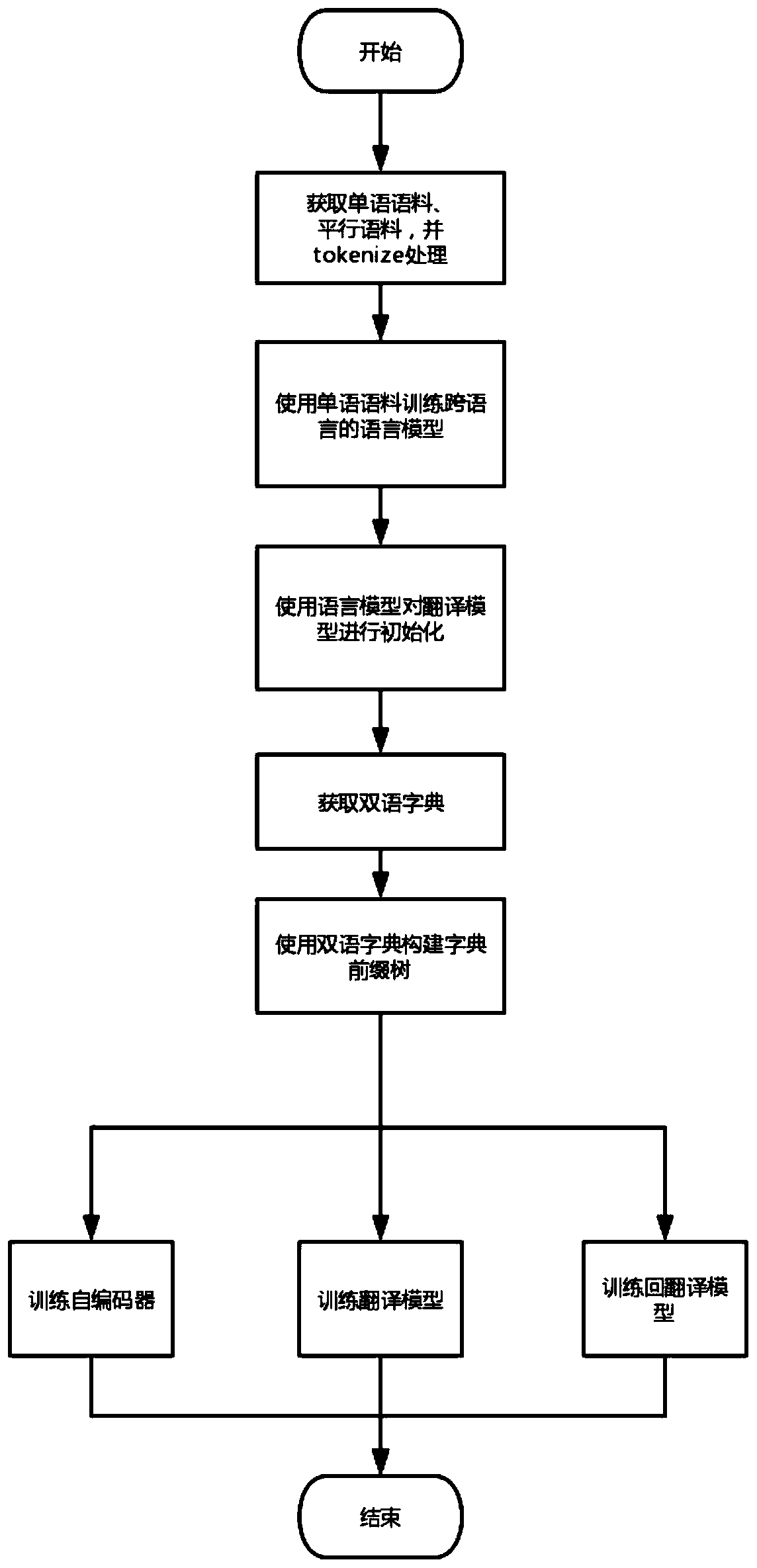

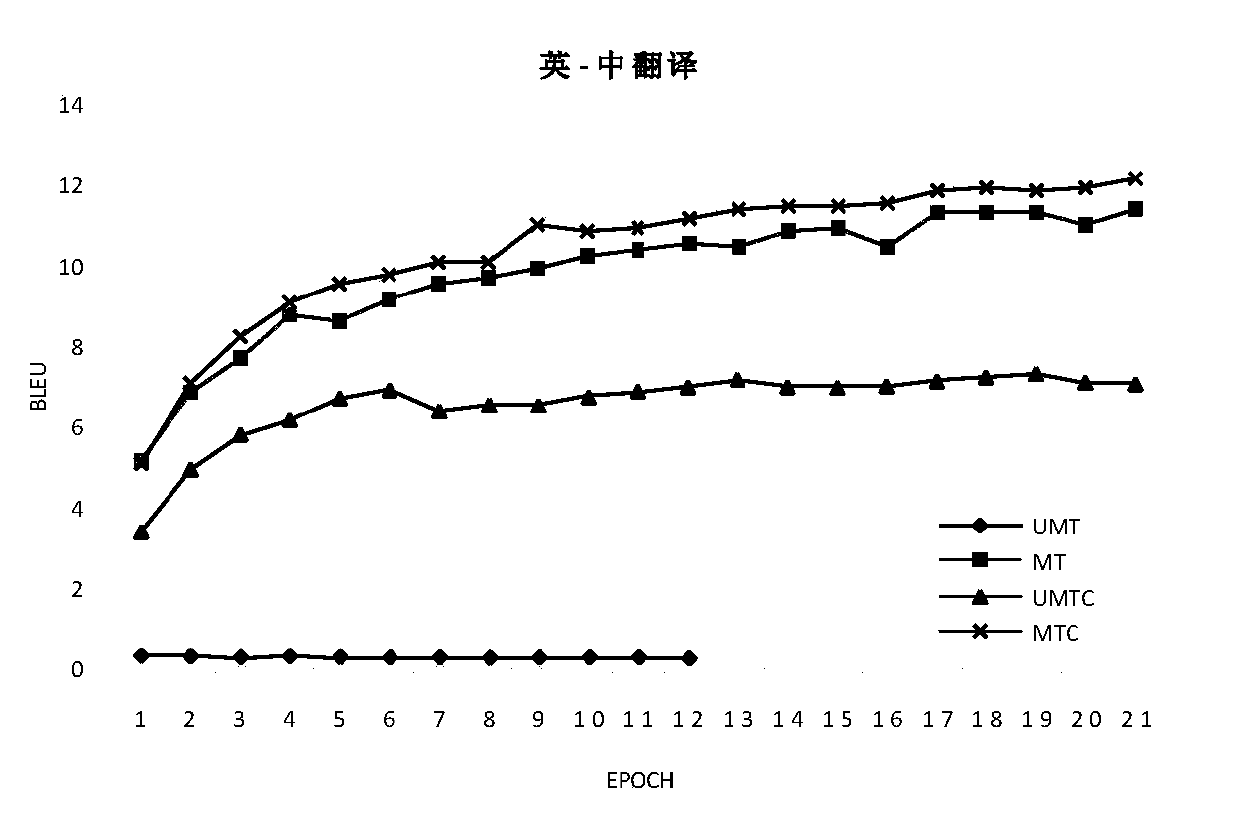

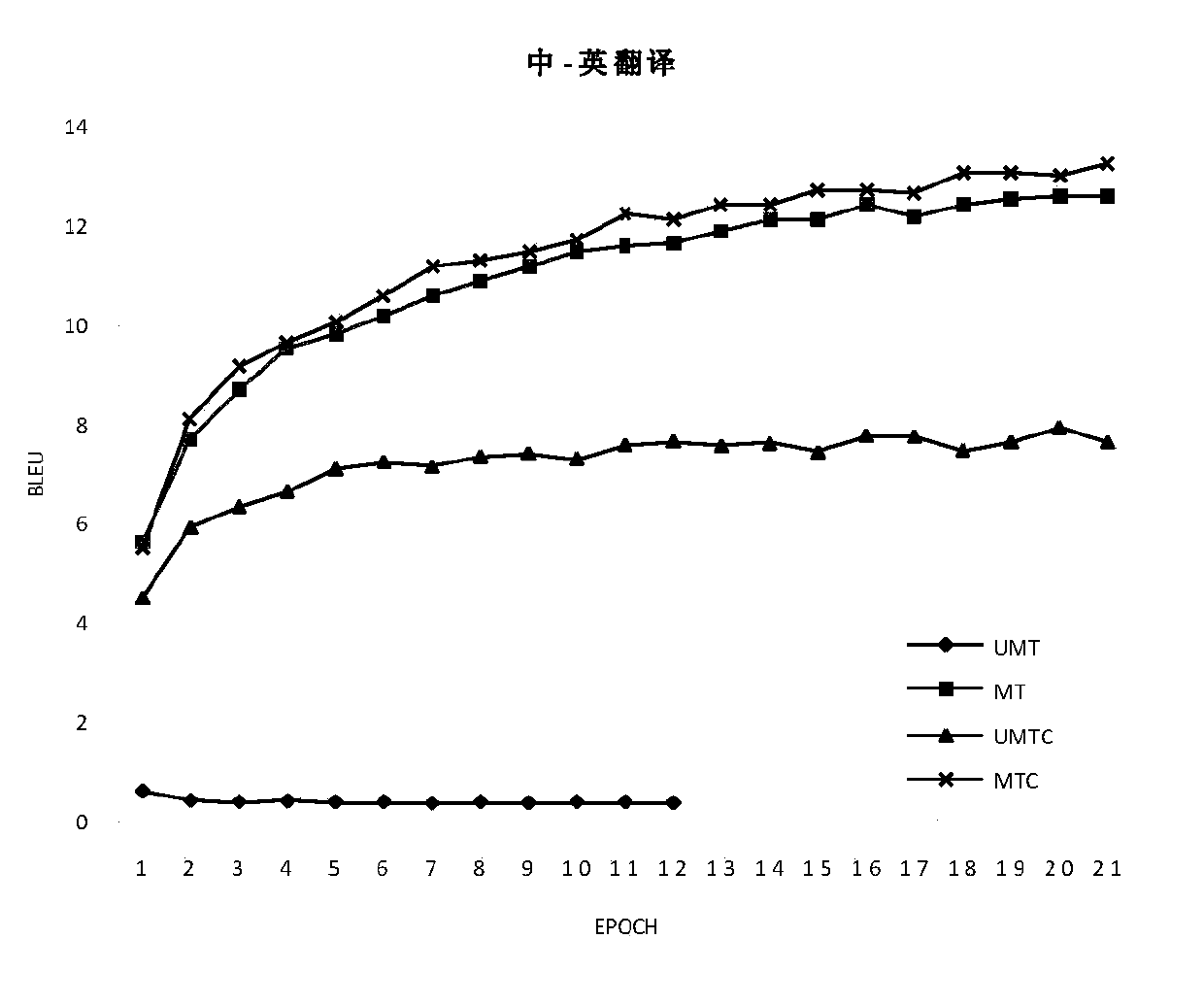

[0042] Embodiment 1: as Figure 1-3 As shown, based on the construction method of the semi-supervised neural machine translation model of word-to-word translation, the specific steps of the method are as follows:

[0043] Step1. Obtain the monolingual corpus of the source language and the target language, and the parallel corpus of the source language and the target language, and tokenize them;

[0044] Step2. Use the monolingual corpus of the source language and the target language to train a cross-language language model:

[0045] L lm =E x~S [-logP s→s (x|C(x))]+E y~T [-logP t→t (y|C(y))]

[0046] Among them, S represents the monolingual corpus of the source language, T represents the monolingual corpus of the target language, x and y represent a single sentence of the monolingual corpus of the source language and the monolingual corpus of the target language respectively; C(x) and C(y) represent the sentence Adding noise, that is, deleting, replacing, and exchanging...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com