Audio feature extraction method and device thereof, training method and electronic equipment

A technology of audio features and extraction methods, applied in speech analysis, instruments, etc., can solve the problems of complex detection environment and low detection accuracy, and achieve the effect of improving accuracy and good recognition and classification.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

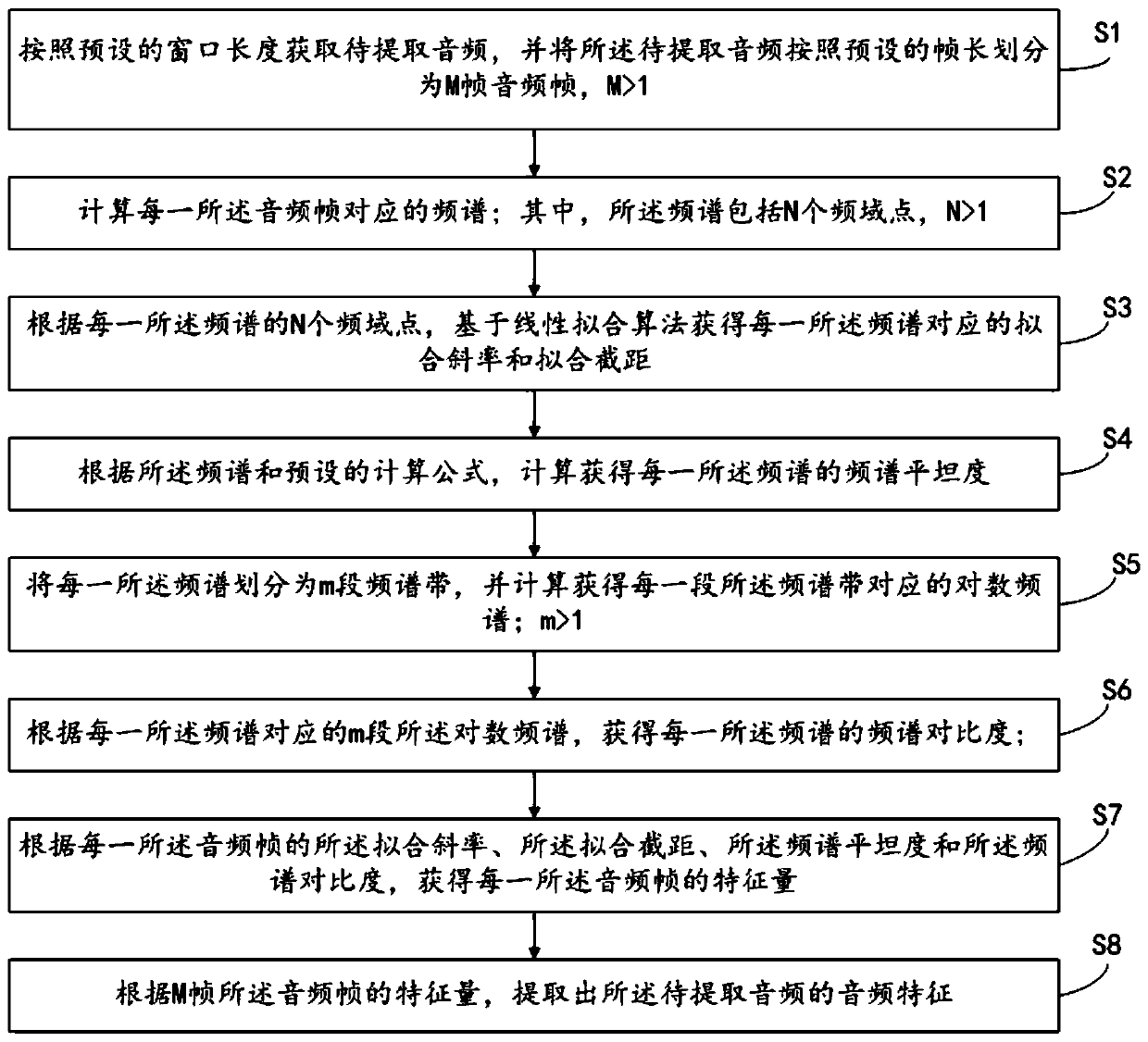

[0065] The present invention provides an audio feature extraction method, please refer to figure 1 , figure 1 It is a schematic flowchart of a preferred embodiment of an audio feature extraction method provided by the present invention; specifically, the method includes:

[0066] S1. Acquire the audio to be extracted according to the preset window length, and divide the audio to be extracted into M audio frames according to the preset frame length, M> 1;

[0067] S2. Calculate the frequency spectrum corresponding to each audio frame; wherein the frequency spectrum includes N frequency domain points, N> 1;

[0068] S3. Obtain a fitting slope and a fitting intercept corresponding to each frequency spectrum based on a linear fitting algorithm based on the N frequency domain points of each frequency spectrum;

[0069] S4. Calculate and obtain the spectral flatness of each frequency spectrum according to the frequency spectrum and a preset calculation formula;

[0070] S5. Divide each of t...

Embodiment 2

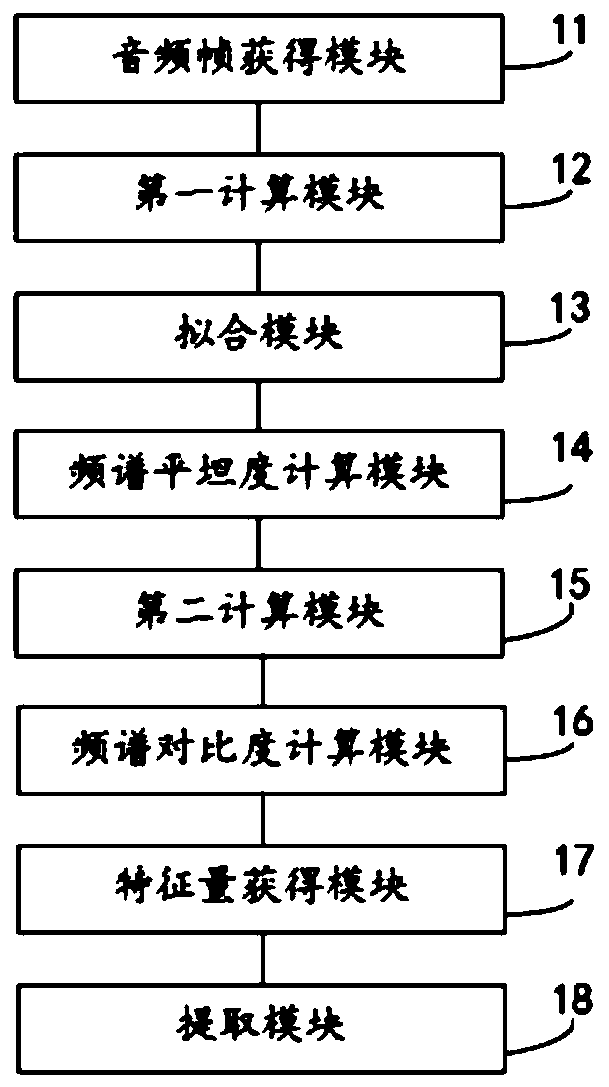

[0114] The invention also provides an audio feature extraction device, please refer to figure 2 , figure 2 It is a schematic structural diagram of a preferred embodiment of an audio feature extraction device provided by the present invention; specifically, the device includes:

[0115] The audio frame obtaining module 11 is configured to obtain the audio to be extracted according to a preset window length, and divide the audio to be extracted into M audio frames according to the preset frame length, M> 1;

[0116] The first calculation module 12 is configured to calculate the frequency spectrum corresponding to each audio frame; wherein, the frequency spectrum includes N frequency domain points, N> 1;

[0117] The fitting module 13 is configured to obtain a fitting slope and a fitting intercept corresponding to each frequency spectrum based on a linear fitting algorithm based on the N frequency domain points of each frequency spectrum;

[0118] The spectrum flatness calculation modul...

Embodiment 3

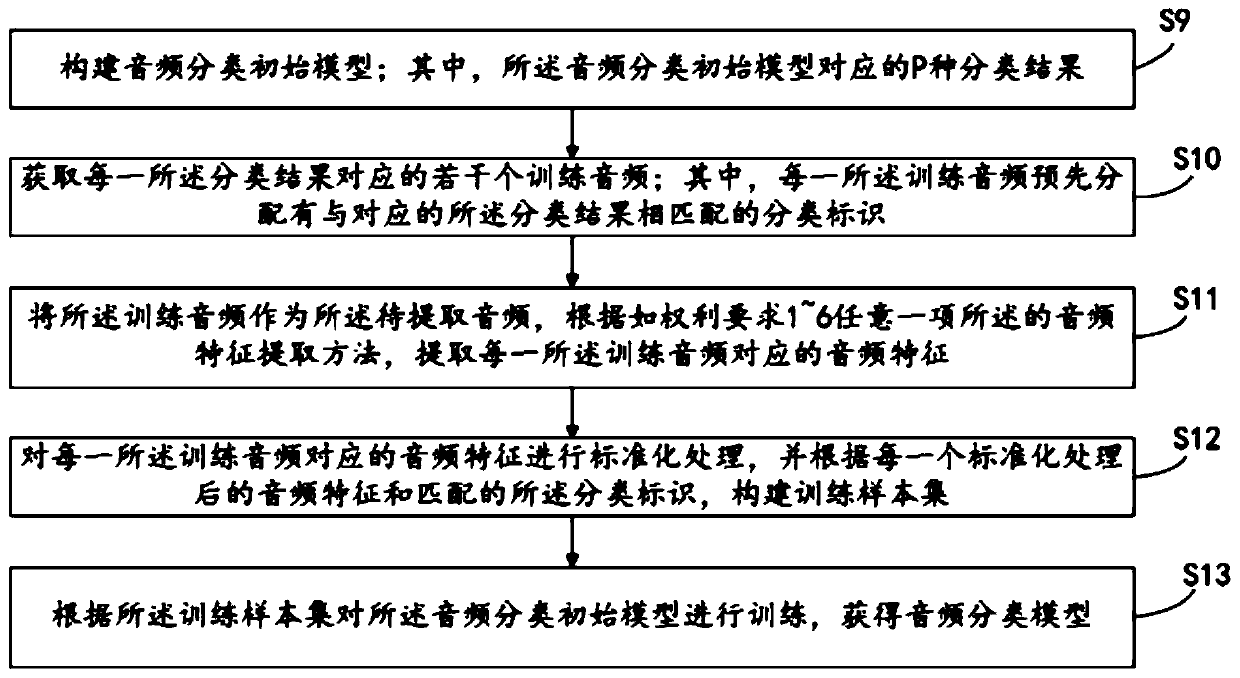

[0145] The present invention also provides a training method of audio classification model, please refer to image 3 , image 3 It is a schematic flowchart of a preferred embodiment of an audio classification model training method provided by the present invention; specifically, the method includes:

[0146] S9. Construct an initial audio classification model; wherein, P types of classification results corresponding to the audio classification initial model;

[0147] S10. Acquire several training audios corresponding to each of the classification results; wherein each of the training audios is pre-allocated with a classification identifier that matches the corresponding classification result;

[0148] S11. Using the training audio as the audio to be extracted, and extracting an audio feature corresponding to each training audio according to any one of the audio feature extraction methods provided in the first embodiment;

[0149] S12: Perform standardized processing on the audio featur...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com