Video processing method, video searching method and terminal equipment

A processing method and a search method technology, applied in the field of image processing, can solve the problems of poor correlation between keywords and fragments, inaccurate description, etc., and achieve the effects of strong correlation, high description accuracy, and improved accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

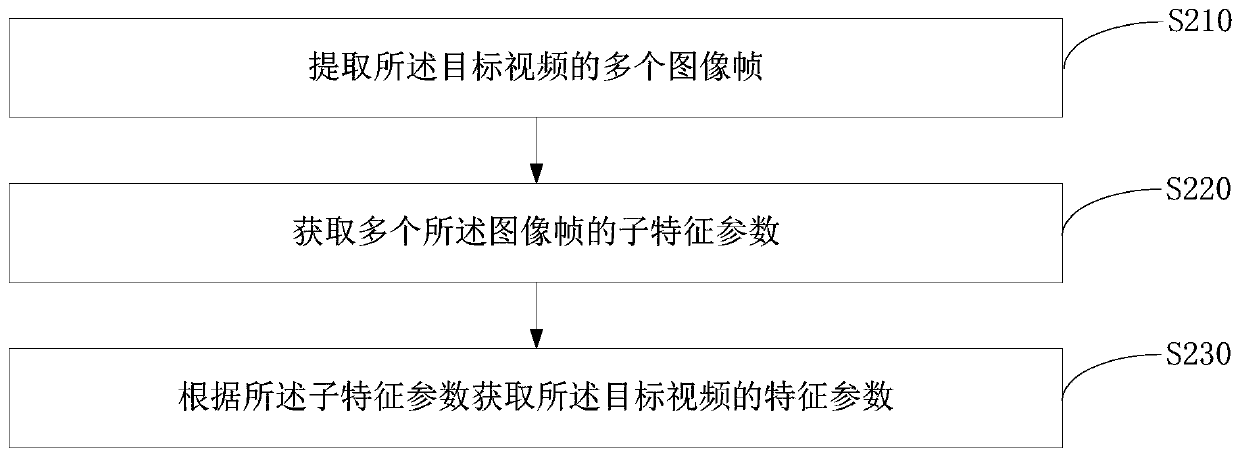

[0111] refer to image 3 , image 3 It is a schematic flowchart of the second embodiment of the video processing method of the present invention, image 3 It is also a detailed flowchart of step S200. Based on the first embodiment above, step S200 includes:

[0112] Step S210, extracting multiple image frames of the target video;

[0113] Step S220, acquiring multiple sub-feature parameters of the image frames;

[0114] Step S230, acquiring feature parameters of the target video according to the sub-feature parameters.

[0115] In this embodiment, multiple image frames are extracted from the target video at a predetermined frame rate, which can reduce the number of video frames processed by the terminal device, thereby improving the efficiency of acquiring the content of the target video.

[0116] The sub-feature parameters of each image frame can be identified one by one. Since the image frame loses sound information, furthermore, the sub-feature parameters include at le...

no. 4 example

[0141] refer to Image 6 , Image 6 It is a schematic flow chart of the fifth embodiment of the video processing method of the present invention, Image 6 also for Figure 5 The detailed flowchart of step S320, based on the fourth embodiment above, step S320 includes:

[0142] Step S321, comparing the human body features and preset human body features, and obtaining a comparison result;

[0143] Step S322, acquiring preset human body characteristics corresponding to the human body characteristics according to the comparison result;

[0144] Step S323, acquiring the identity information according to the preset human body characteristics corresponding to the human body characteristics.

[0145] After acquiring the human body features of the person information in the target video, the human body features may include one or more of facial features, iris features, and body shape features. The preset human body features correspond to the human body features. If the human body f...

no. 6 example

[0163] refer to Figure 8 , Figure 8 It is a schematic flowchart of the seventh embodiment of the video processing method of the present invention, Figure 8 also for Figure 7 The detailed flowchart of step S130, based on the sixth embodiment above, step S130 includes:

[0164]Step S131, respectively extracting image blocks in grayscale images corresponding to adjacent image frames, where the image blocks extracted in the adjacent image frames have the same position and size;

[0165] In this embodiment, image blocks are respectively extracted from grayscale images corresponding to adjacent image frames, wherein the coordinates of the upper left corner of the image block are randomly generated, and the size of the image block is also randomly generated. It can be understood that the positions and sizes of the image blocks extracted in adjacent image frames are the same, which is beneficial for subsequent comparison.

[0166] Step S132, obtaining the number of pixels in e...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com