Modified real-time emotion recognition method and system based on eye movement data

An emotion recognition and data technology, applied in the field of emotion recognition, can solve problems such as inability to accurately recognize emotions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

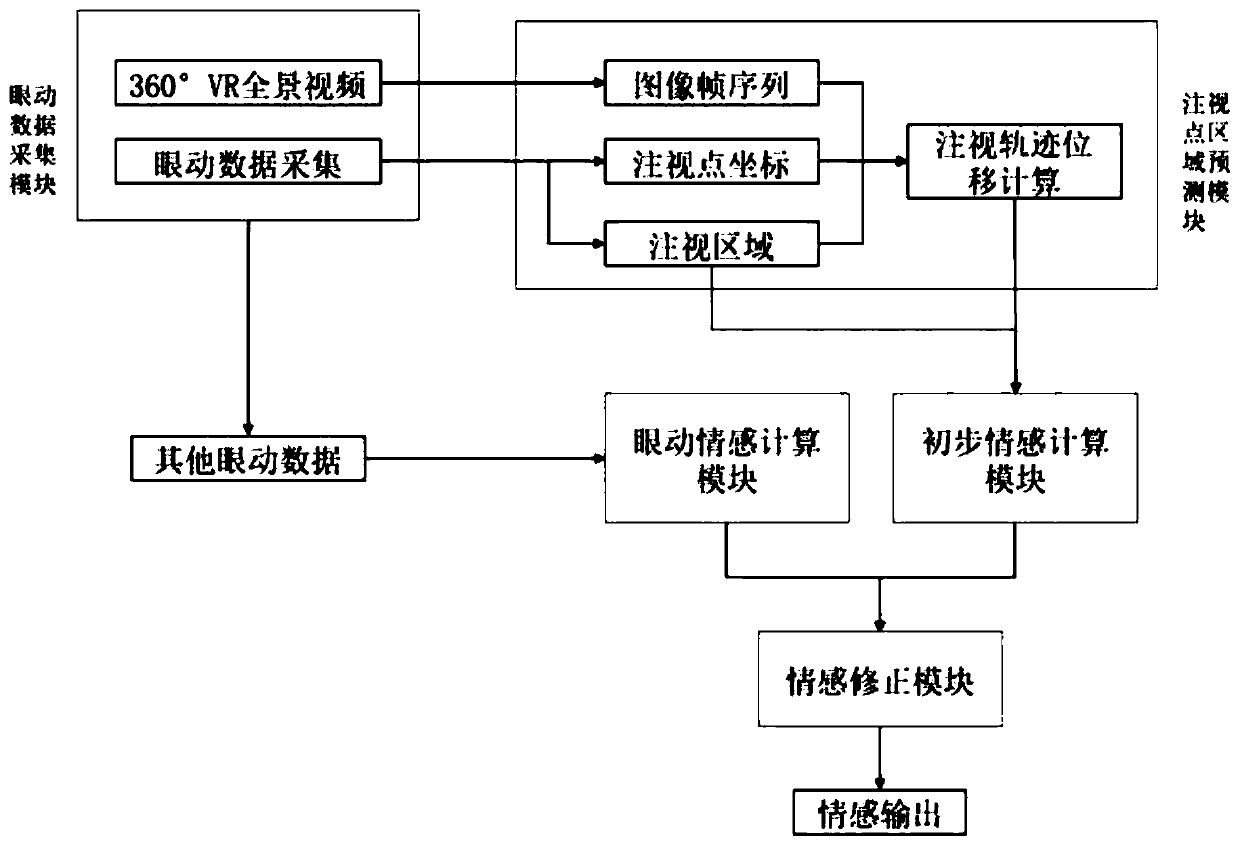

[0045] Such as figure 1 As shown, a modified real-time emotion recognition method based on eye movement data includes the following steps:

[0046] S1 integrates an eye movement data collection module in a head-mounted VR device. The user wears the VR device to independently explore the content of the 360° panoramic video, collects the user's eye movement data in the process in real time, and obtains the video frame sequence at the same time;

[0047] The eye movement data collection module in this embodiment is specifically an eye movement film, and the eye movement data includes eye patterns, pupil radius, pupil position in the image, upper and lower eyelid distances, fixation points (smooth and non-smooth), etc.

[0048] The beneficial effects of adopting the above solution are: VR immersive experience makes users more immersive, users are less susceptible to interference from the external environment, integrated eye tracking module in the VR headset, the collected data is real-ti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com