Three-dimensional modeling method and system based on 3D visual sensor

A visual sensor, 3D modeling technology, applied in the field of robotics, can solve problems such as inaccurate positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

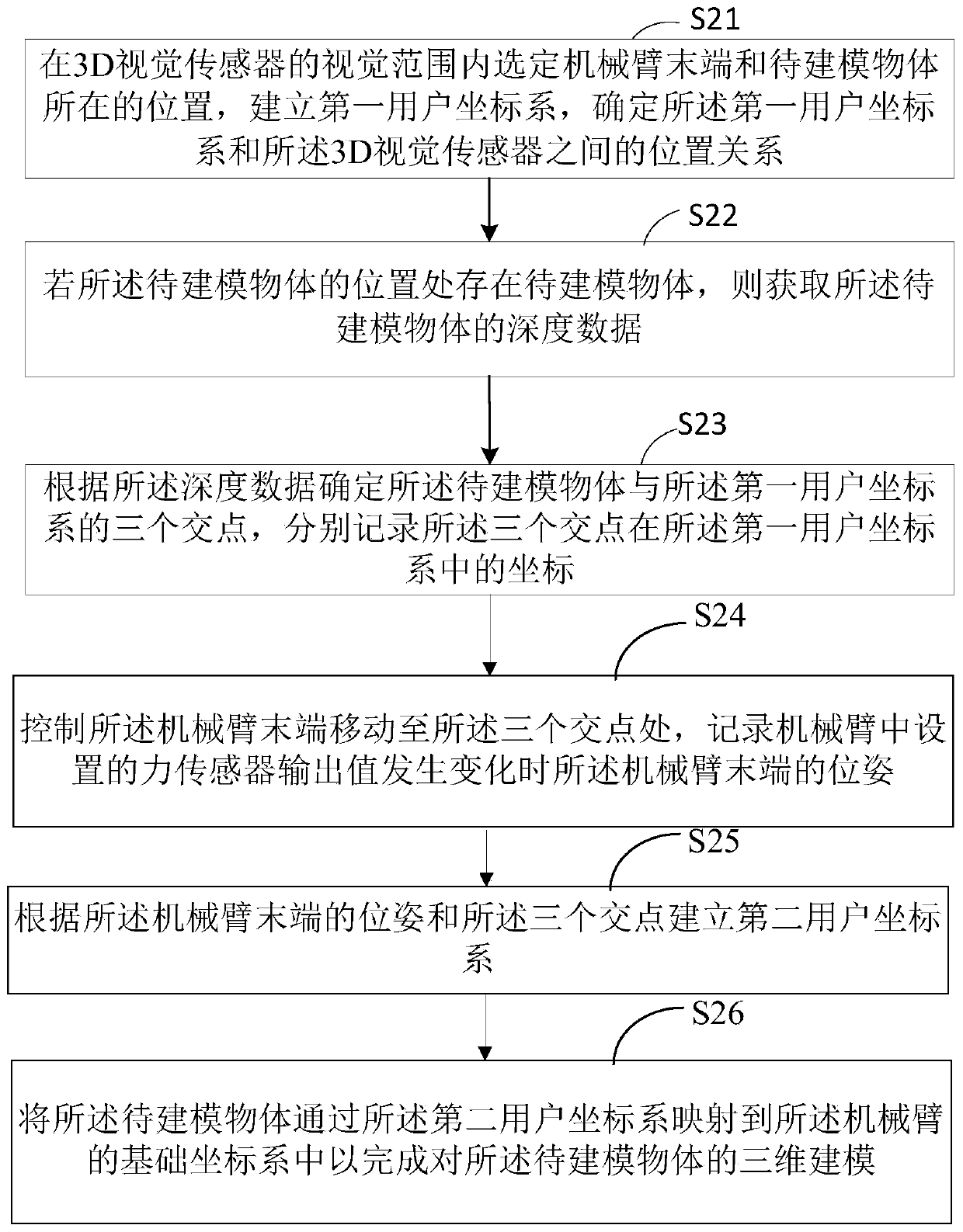

[0057] figure 2 It shows a schematic diagram of the implementation flow of a 3D visual sensor-based three-dimensional modeling method provided by the embodiment of the present application, including steps S21-step S24, wherein:

[0058] Step S21, select the position of the end of the robotic arm and the object to be modeled within the visual range of the 3D vision sensor, establish a first user coordinate system, and determine the position between the first user coordinate system and the 3D vision sensor relation.

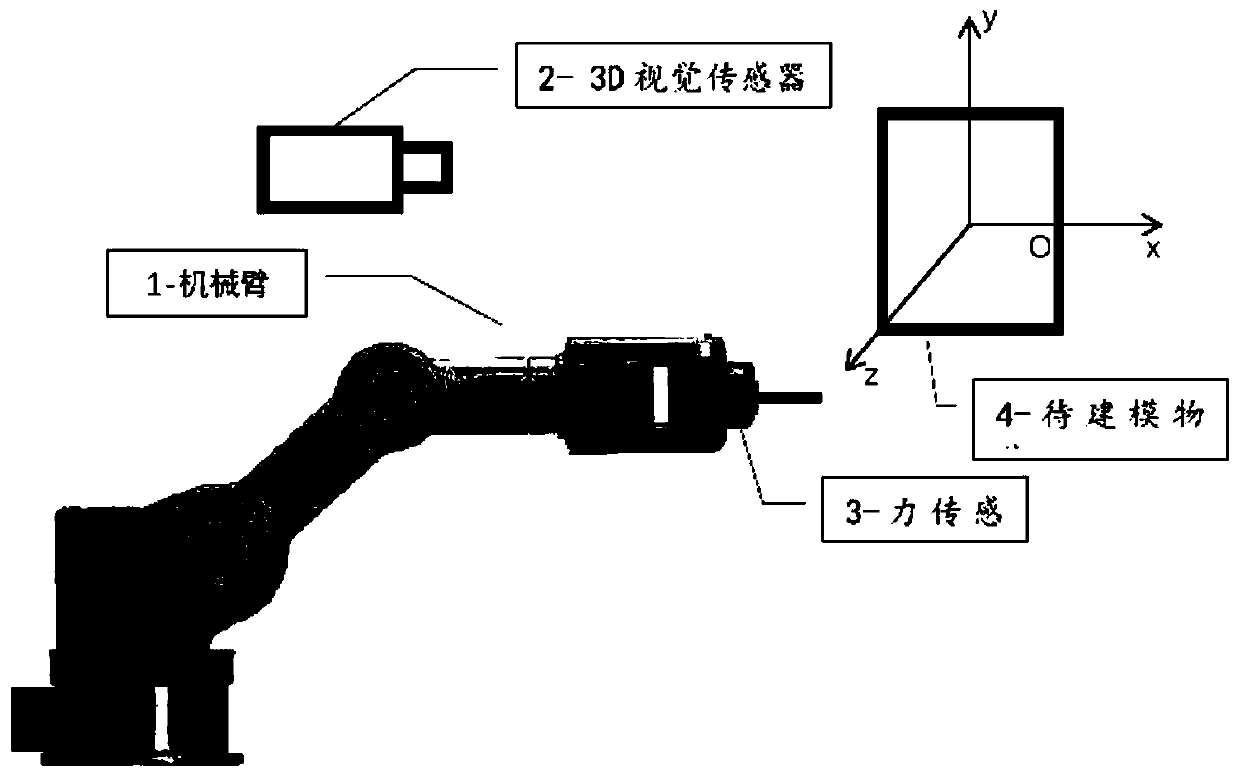

[0059] In the modeling method provided by this application, the modeling scene is first set up, specifically, the positions of the end of the manipulator and the object to be modeled are selected within the modeling range, or the end of the manipulator equipped with a three-dimensional force sensor and the position of the object to be modeled are selected. The model objects are respectively fixed at a point within the field of view of the 3D vision sensor, and th...

Embodiment 2

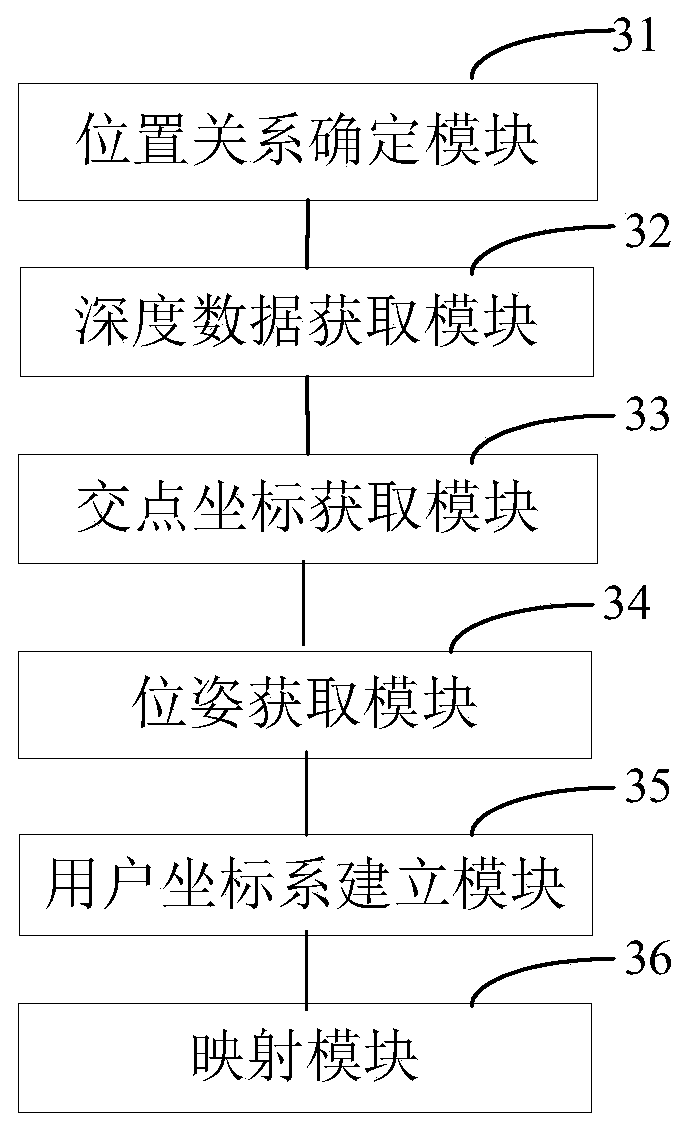

[0089] image 3 A schematic structural diagram of a 3D modeling system based on a 3D vision sensor provided in another embodiment of the present application is shown, and the system includes:

[0090] The position relationship determination module 31 is used to select the position of the end of the mechanical arm and the object to be modeled within the visual range of the 3D vision sensor, establish a first user coordinate system, and determine the first user coordinate system and the 3D vision The positional relationship between the sensors;

[0091] A depth data acquisition module 32, configured to acquire depth data of the object to be modeled when there is an object to be modeled at the position of the object to be modeled;

[0092] An intersection coordinate acquisition module 33, configured to determine three intersection points between the object to be modeled and the first user coordinate system according to the depth data, and respectively record the three intersecti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com