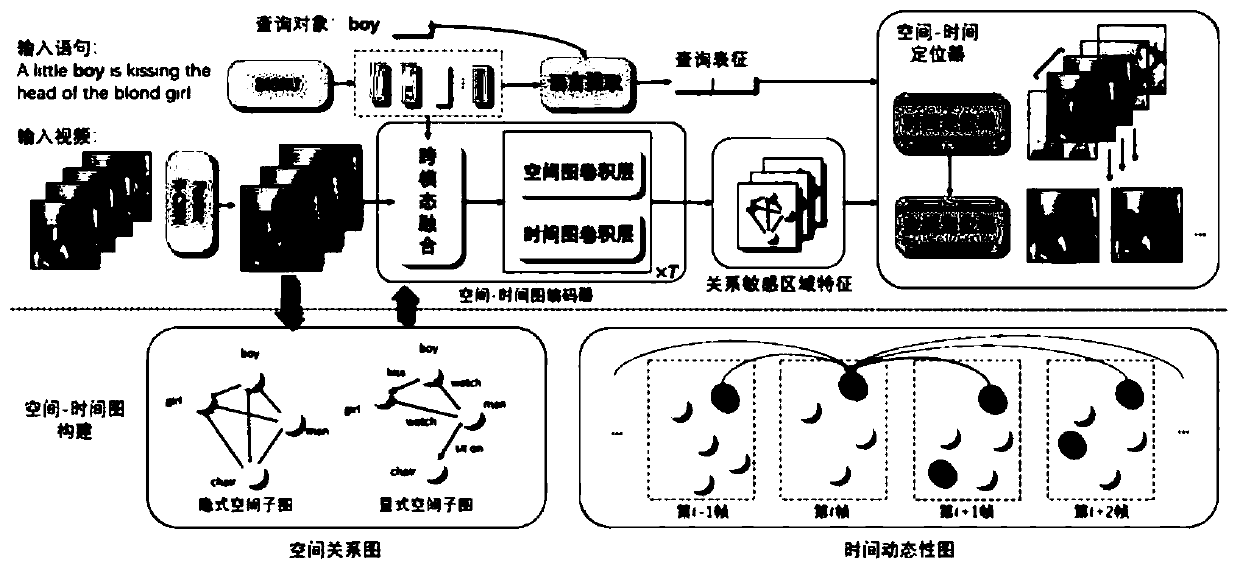

Method for solving polymorphic statement video positioning task by using space-time graph reasoning network

A technology of video positioning and time graph, which is applied in the field of natural language visual positioning, and can solve problems such as inability to solve multi-morphic sentence video positioning tasks

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

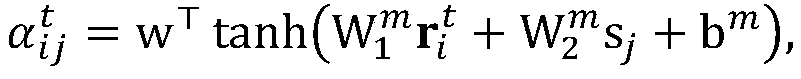

Method used

Image

Examples

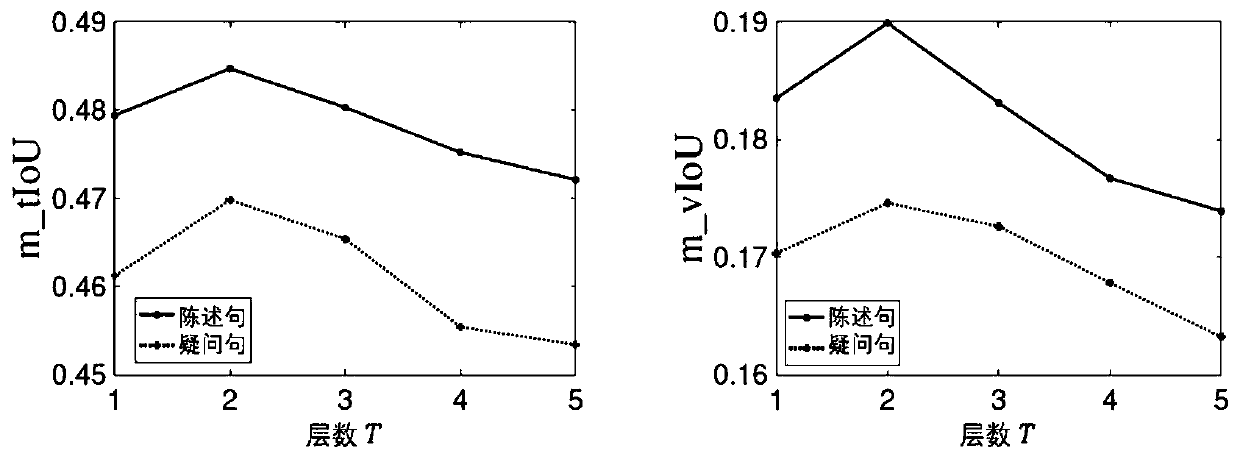

Embodiment

[0114] The present invention increases the Sentence annotation, a large-scale spatio-temporal video localization dataset VidSTG is built and validated on the VidSTG dataset. VidOR is the largest existing video dataset containing object relations, containing 10,000 videos and fine-grained annotations of objects and relations among them. VidOR annotates 80 object categories with dense bounding boxes and annotates 50 relation predicate categories (8 spatial relations and 42 action relations) between objects, expressing relations as triplets , Each triplet is associated with a temporal boundary and a space-time conduit (to which the subject and object belong). Select the appropriate triplet based on VidOR and describe the subject or object with multiple forms of sentences. Using VidOR as the base dataset has many advantages. On the one hand, laborious annotation of bounding boxes can be avoided. On the other hand, relations in triples can be simply incorporated into annotated ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com