Pedestrian generation method based on pedestrian mask and multi-scale discrimination

A multi-scale, pedestrian technology, applied in neural learning methods, character and pattern recognition, biological neural network models, etc., can solve the problems of blurred pedestrian outline and background boundary, unclear pedestrian image, etc., and achieve clear pedestrian outline and background boundary. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

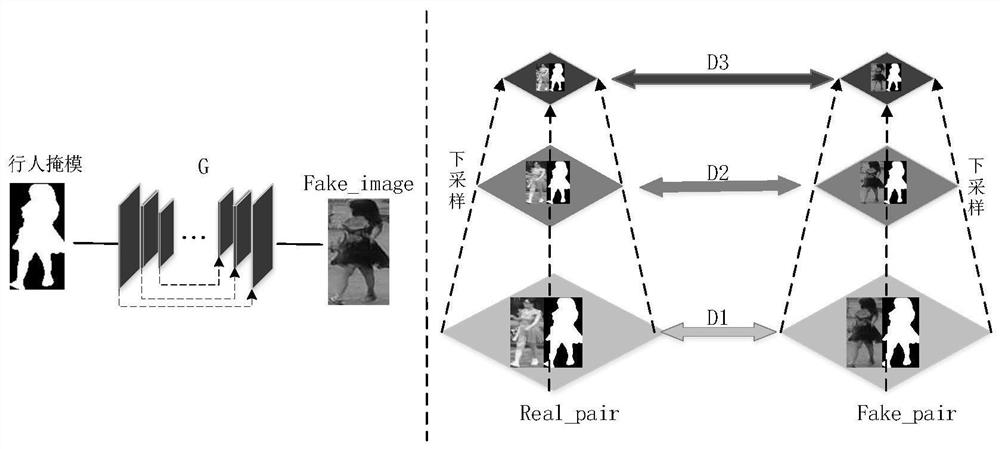

[0043] This embodiment provides a pedestrian generation method based on pedestrian mask and multi-scale discrimination, such as figure 1 As shown, a network structure including a generator and three discriminators is constructed in this method. Feed the pedestrian mask and noise into the generator to generate pedestrian images. The discriminator receives the pedestrian mask and the pedestrian image, judges the authenticity of the image pair, and feeds the discriminative information to the generator through the loss function to guide the generator to generate pedestrians. Through the continuous game between the generator and the discriminator, finally generate Model pose features of pedestrians.

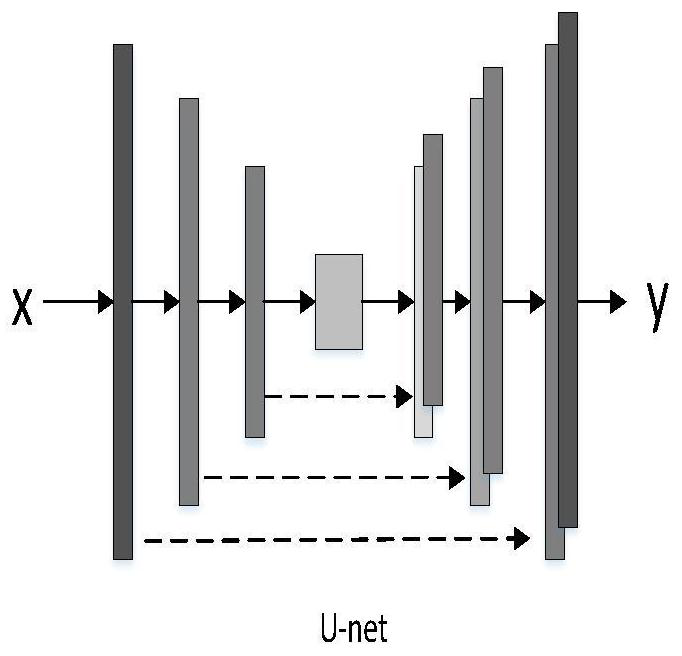

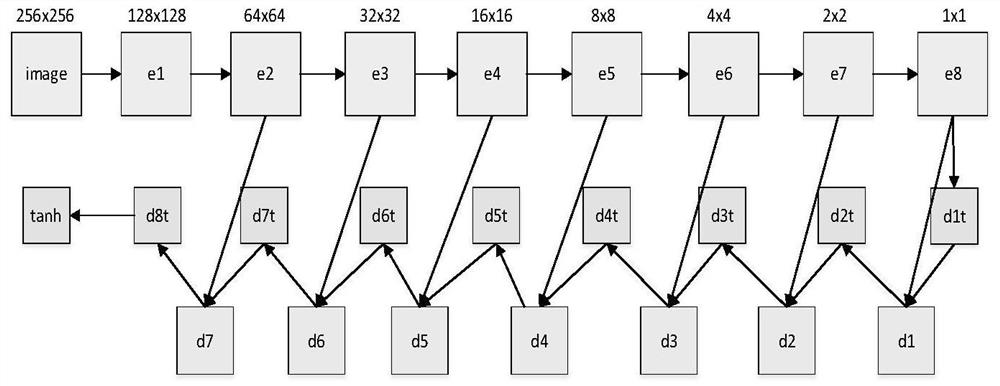

[0044]The generator uses u-Net structure, including encoder and decoder. Such as image 3 As shown, the encoder performs downsampling in the form of convolution to encode the input image; the decoder performs upsampling and restoration in the form of deconvolution to generate an im...

Embodiment 2

[0050] Further improvement based on Example 1, the loss function includes generative confrontation loss, L1 loss function, feature matching loss and total loss:

[0051] (1) The generative adversarial loss in this method guides the generation of images. Among them, G represents the generator, D represents the discriminator, s is the pedestrian mask, x is the real pedestrian, and z is the noise vector. G(s,z) is the generated image, D k Denotes the kth discriminator.

[0052] L GAN (G,D)=E s,x [logD(s,x)]+E s,z [log(1-D(s,G(s,z)))];

[0053]

[0054] (2) This method uses the L1 loss function to ensure the consistent mapping relationship between the input image and the generated image.

[0055] L L1 (G)=E s,x,z [||y-G(s,z)|| 1 ];

[0056] L GAN (G,D)=E (s,x) [logD(s,x)]+E s [log(1-D(s,G(s))];

[0057] (3) This method uses feature matching loss L FM (G,D k ) to improve the stability of network training; the main goal of this loss function is to extract features...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com