Multi-modal semantic fusion human-computer interaction system and method for virtual experiments

A virtual experiment and human-computer interaction technology, applied in the field of multi-modal semantic fusion human-computer interaction, can solve the problems of descent, single touch manipulation, high user visual channel load, and achieve the effect of stimulating learning interest

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043]In order to clearly illustrate the technical features of the solution of the present invention, the solution will be further elaborated below in conjunction with the accompanying drawings and through specific implementation methods.

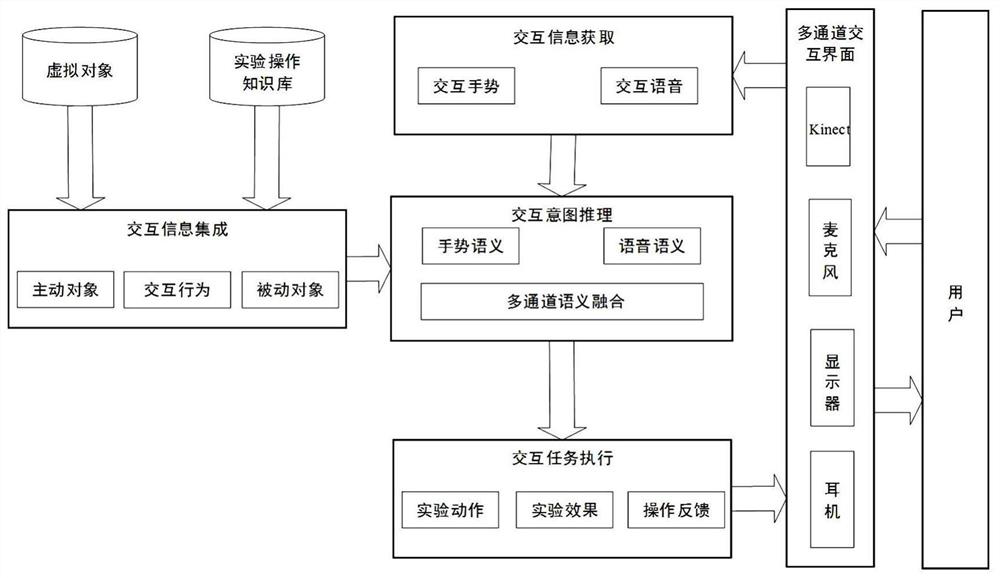

[0044] like figure 1 As shown in , a virtual experiment-oriented multi-modal semantic fusion human-computer interaction system includes an interactive information integration module, and also includes an interactive information acquisition module, an interactive intention reasoning module and an interactive task direct module, wherein the interactive information integration The module integrates virtual objects and experimental operation knowledge information into the virtual environment and provides a data basis for the interactive intention reasoning module, including active objects, interactive behavior knowledge rules and passive objects; the interactive information module uses a multi-modal fusion model to accurately identify The real ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com