Graph neural network application-oriented task scheduling execution system and method

A neural network and task scheduling technology, applied in the field of graph neural network applications, can solve problems such as low utilization of computing resources and inability to run graph neural networks efficiently, achieve efficient and fast execution, improve computing resource utilization, and improve utilization efficiency effect on performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

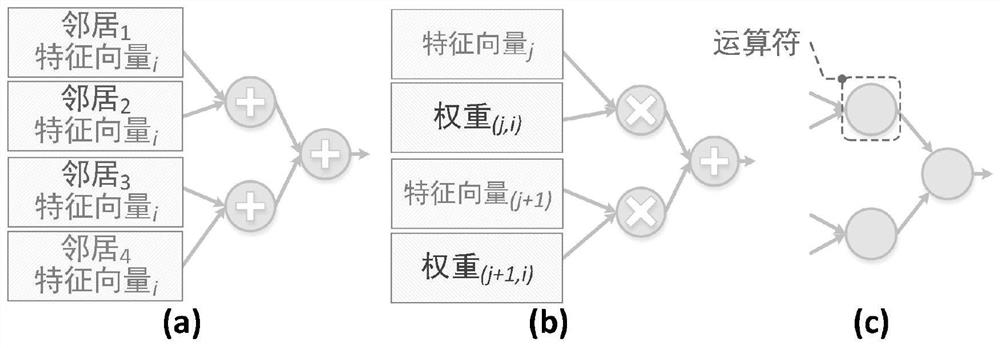

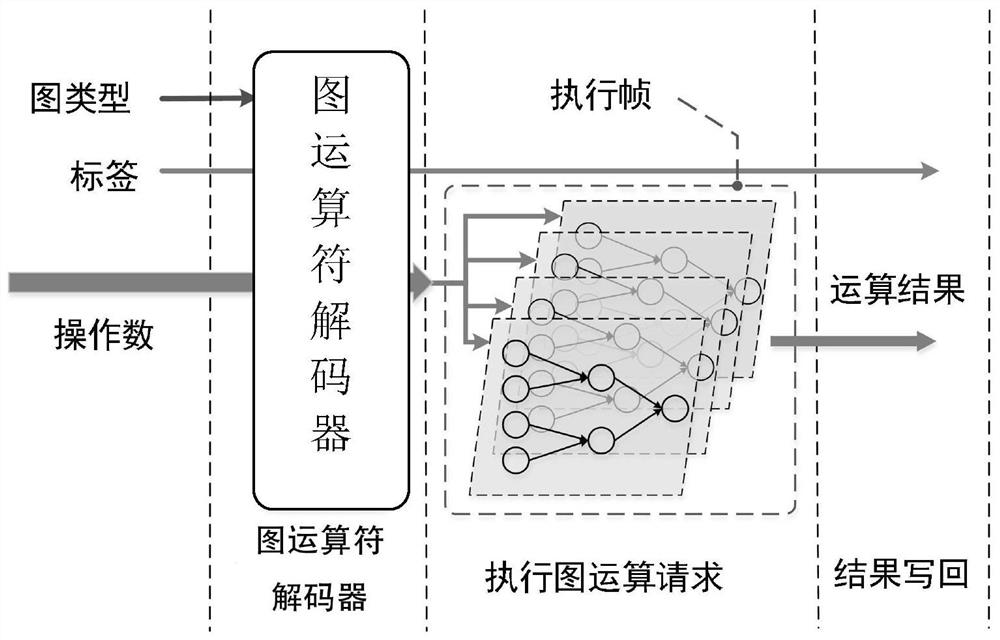

[0053] Example 1. The encoding methods corresponding to different types of graph operators in PE and the corresponding binary operation tree

[0054] The decoder in the PE performs input configuration and execution configuration on the execution frame of the graph operator according to the different encodings of the graph operator, and specifically sets the execution frame as an 8-input accumulation, maximum value, minimum value or multiply-add binary operation tree. Graph operators are coded as 2 bits, and 00, 01, 10, and 11 are interpreted as accumulation, maximum, minimum, and multiply-accumulate operations, respectively.

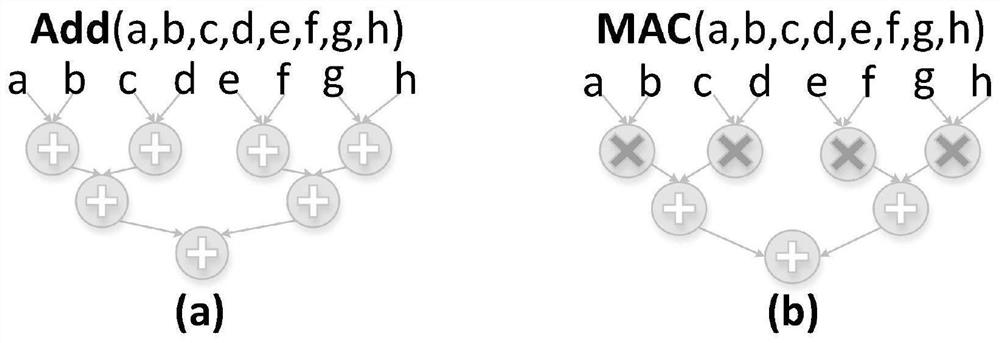

[0055] Codes 00 and 11 respectively correspond to 8-input accumulation binary operation tree and multiply-add binary operation tree as attached image 3 shown.

example 2

[0056] Example 2.32 Input accumulation operation mapping process

[0057] attached Figure 4 Mapping diagram for a 32-input accumulative computation graph. In the initialization phase, the scheduler divides the accumulative calculation graph according to the number of input operands of the original calculation graph, which is 32, and maps it into four graph operators with 8 valid inputs and an additional graph operator with 4 valid inputs. . Each graph operator corresponds to a dedicated label generated by the label generator TgGen, marking its node ID (VID), round ID (RID), graph operation ID (GID) in the current round and the output number of the current round (ONum) and remaining repetitions (RRT) information.

example 3

[0058] Example 3. Task scheduling and execution process

[0059] In this device, the scheduler completes the task scheduling work by scheduling the execution of the graph operator, the processing unit PE completes the execution work of the graph operator, and the graph operator cache module to be transmitted stores the pending graph operator processing request, graph operator Labels and operands, the emission unit fills the input operation for the graph operator, that is, if the number of inputs of the graph operator is less than 8, it fills up 8, which is called infinity padding in the minimum comparison operation, and in the maximum comparison operation Padded by infinitesimals, and 0-padded in multiply-add operations. The emit unit then emits the graph operator to process the request to the PE. attached Figure 5 The process of task scheduling and execution in the present invention is shown, and the specific steps are described as follows:

[0060] Step 501: The graph op...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com