Recycling and positioning method of underwater robot based on binocular vision

An underwater robot and binocular vision technology, which is applied in the direction of instruments, line-of-sight measurement, image analysis, etc., can solve the problems of underwater robot recovery work, image noise, color distortion, etc. Fast, high system frequency band, smooth output effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] The present invention will be further elaborated below in conjunction with the accompanying drawings of the description.

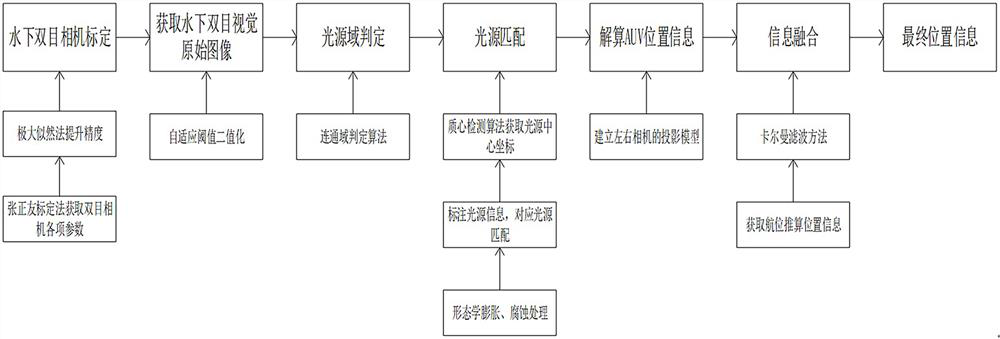

[0025] Such as Figures 1 to 6 As shown, the present invention is based on a binocular vision underwater robot recycling positioning method, specifically comprising the following steps:

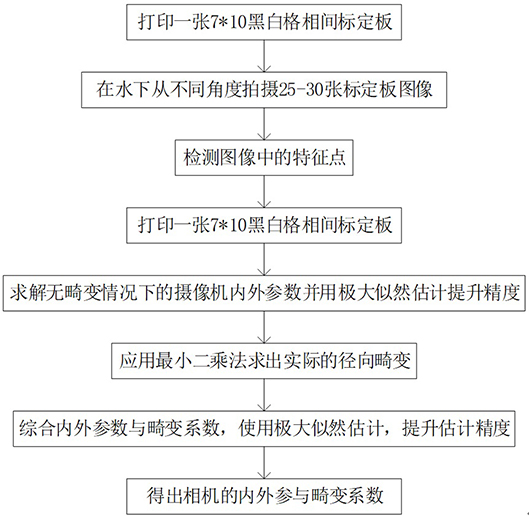

[0026] Step 1: Use two underwater CCD cameras to shoot the calibration plate to obtain the parameters of the binocular camera, including internal and external parameter matrices, distortion coefficients, and rotation and translation matrices between the cameras;

[0027] Apply Zhang Zhengyou’s planar calibration method to calibrate the basic parameters of the camera. First, print a 7*10 black and white grid calibration board and take several calibration board images from different angles underwater; detect the feature points in the image to solve the ideal infinite The internal and external parameters of the camera in the case of distortion and use the maximum lik...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com