Few-sample cross-modal hash retrieval common representation learning method

A learning method and cross-modal technology, applied in neural learning methods, unstructured text data retrieval, multimedia data retrieval, etc., can solve problems such as inability to capture data correlation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055] The accompanying drawings are for illustrative purposes only and cannot be construed as limiting the patent;

[0056] In order to better illustrate this embodiment, some parts in the drawings will be omitted, enlarged or reduced, and do not represent the size of the actual product;

[0057] It is understood by those skilled in the art that certain known structures and descriptions thereof may be omitted in the drawings.

[0058] The technical solutions of the present invention will be further described below in conjunction with the accompanying drawings and embodiments.

[0059] Such as figure 1 As shown, a few-shot cross-modal hash retrieval common representation learning method includes the following steps:

[0060] S1: Divide the dataset and preprocess the original image and text data;

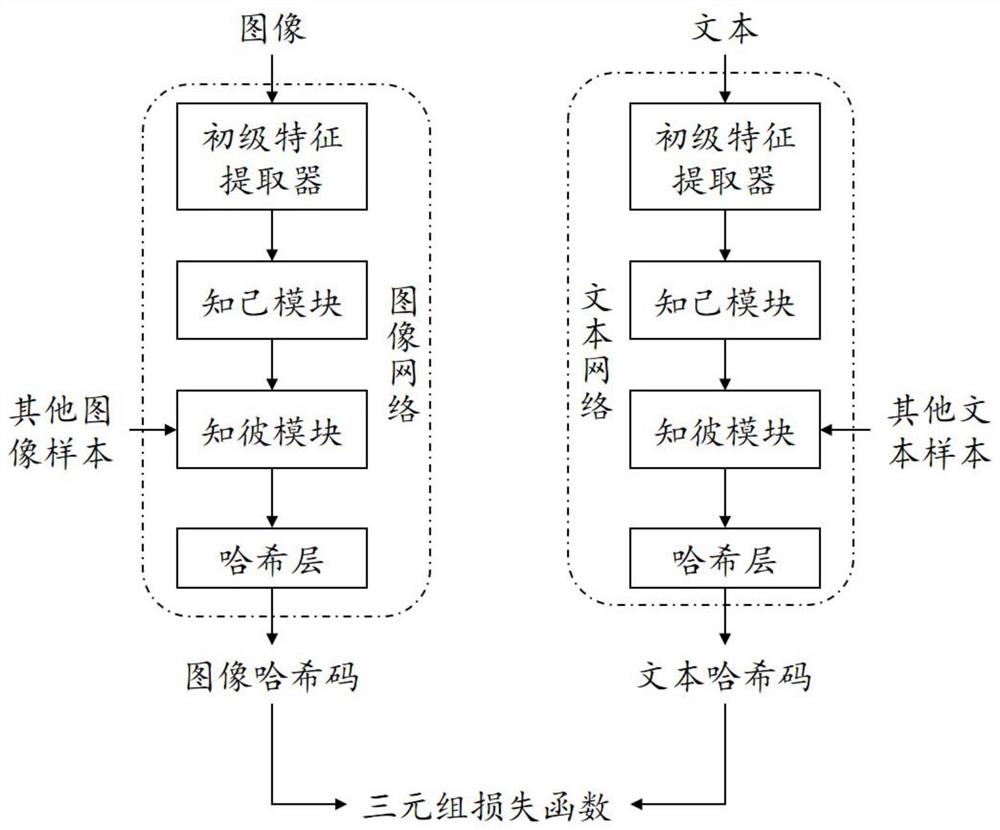

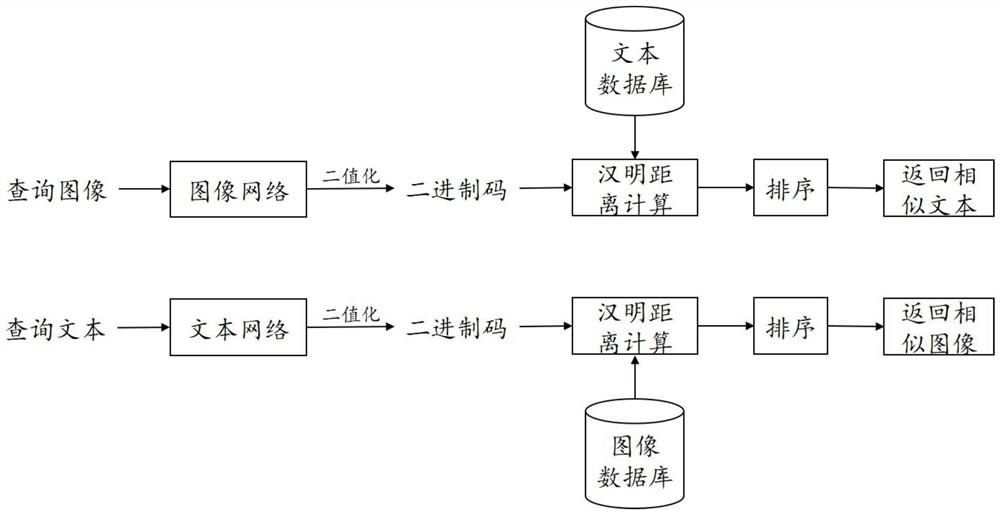

[0061] S2: Establish two parallel deep network structures, respectively extracting feature representations from preprocessed images and texts;

[0062] S3: Establish a hash layer...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com