Point cloud feature space representation method for laser SLAM

A space table and point cloud technology, applied in the direction of instruments, character and pattern recognition, computer components, etc., can solve complex scene recognition problems, do not consider local geometric features and semantic context features, scene fast static assumptions cannot be satisfied, etc. question

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

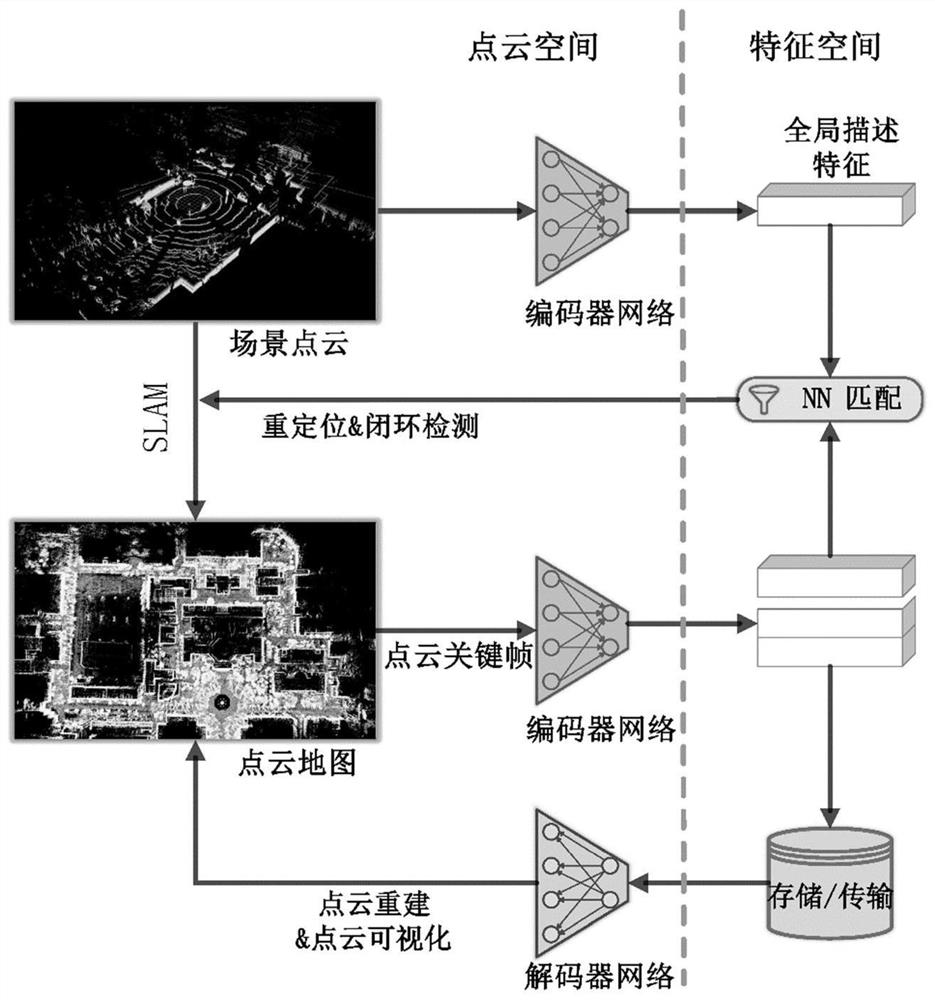

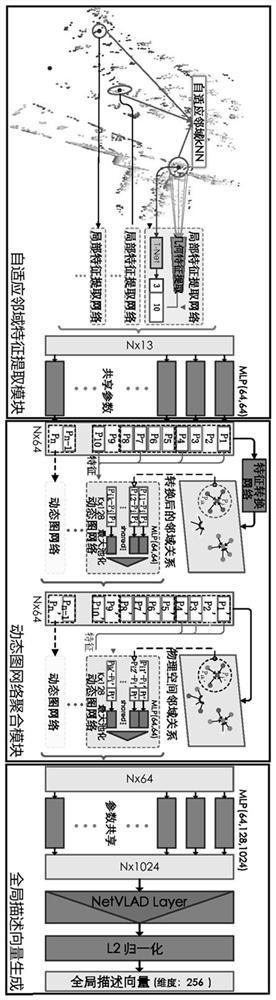

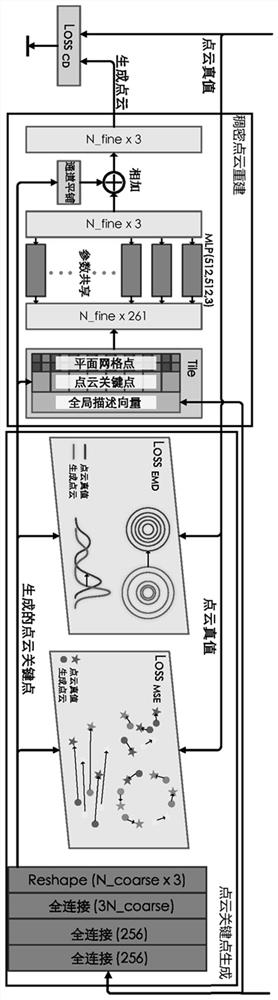

[0041] Such as figure 1 As shown, a point cloud feature space representation method for laser SLAM includes the following steps:

[0042] Step 1: Perform preprocessing such as filtering and downsampling on the point cloud samples in the point cloud dataset, and construct training point cloud sample pairs according to the similarity of the point cloud scene, including the positive sample p pos with negative samples p neg .

[0043] The similarity of the training sample pair is determined by the auxiliary judgment of the position coordinates of the samples in the map. The position interval within 10m is regarded as a positive sample with similar structure, and the distance between 10m and 50m is regarded as a negative sample selection range, and the negative sample is randomly selected. , the randomly selected samples at a distance of 50 meters are regarded as extremely dissimilar extremely negative samples.

[0044] Specifically: select the Oxford RobotCar dataset, use the p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com