Professional stereoscopic video visual comfort classification method based on attention and recurrent neural network

A technology of cyclic neural network and stereoscopic video, which is applied in the direction of biological neural network model, stereoscopic system, neural architecture, etc., and can solve problems such as the consideration of children's audience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0068] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

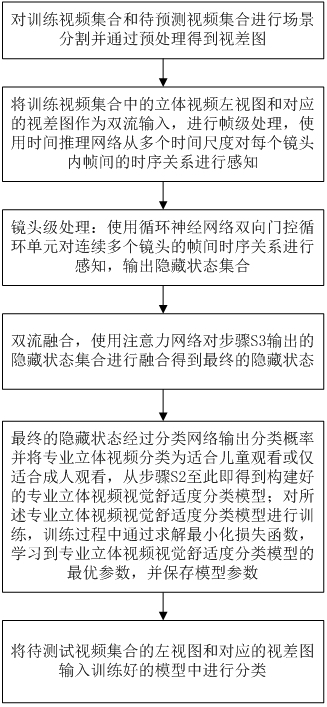

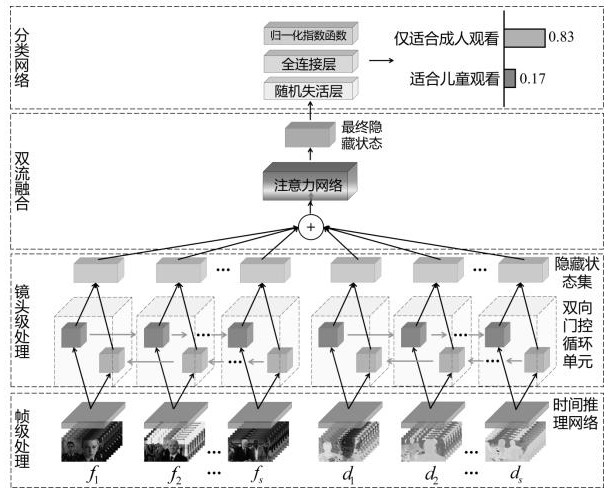

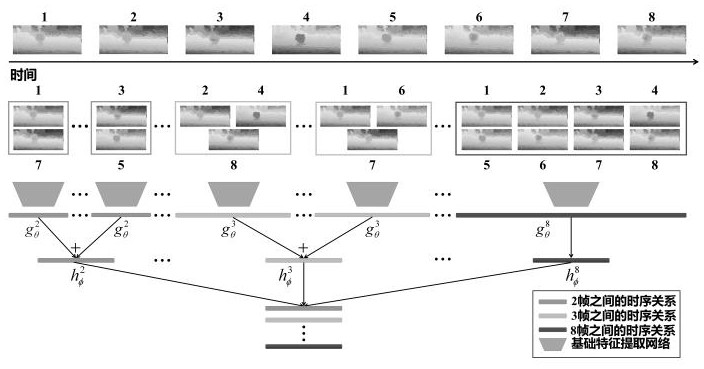

[0069] Such as figure 1 , figure 2 As shown, the present embodiment provides a professional stereoscopic video visual comfort classification method based on attention and recurrent neural network, comprising the following steps:

[0070] Step S1: Carry out scene segmentation on the training video set and the video set to be predicted and obtain the disparity map through preprocessing; specifically include the following steps:

[0071] Step S11: using a multimedia video processing tool to divide the video into frames of images;

[0072] Step S12: using the shot division algorithm to divide the stereoscopic video into non-overlapping video segments, each segment is called a shot;

[0073] Step S13: Divide each frame into left and right views, and use the SiftFlow algorithm to calculate the horizontal displacement of corresponding pixels in th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com