Recommended object determination method and device, electronic equipment and storage medium

A technology of object determination and electronic equipment, applied in the direction of electrical digital data processing, special data processing applications, instruments, etc., can solve the problems of low recommendation accuracy and insufficient consideration of cross-session information, etc., to improve accuracy and recommendation performance Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

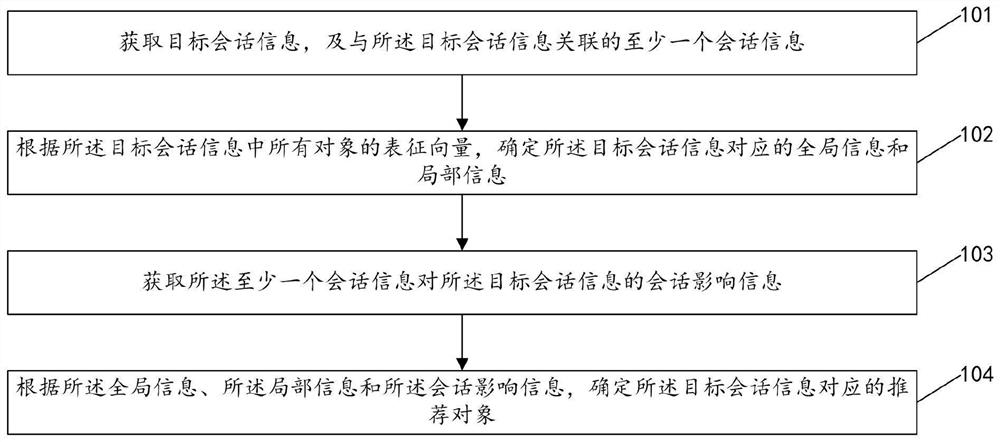

[0073] refer to figure 1 , which shows a flowchart of steps of a method for determining a recommended object provided by an embodiment of the present disclosure, as shown in figure 1 As shown, the method for determining a recommended object may specifically include the following steps:

[0074] Step 101: Obtain target session information and at least one piece of session information associated with the target session information.

[0075] The embodiments of the present disclosure can be applied to a scene where an object that the user may click next is determined, and the object is recommended to the user.

[0076] The target session information refers to the session information formed by the target user clicking on an object (such as a business, an item, etc.) within a current period of time.

[0077] The at least one piece of session information refers to session information of a historical period associated with the target session information.

[0078] Understandably, at...

Embodiment 2

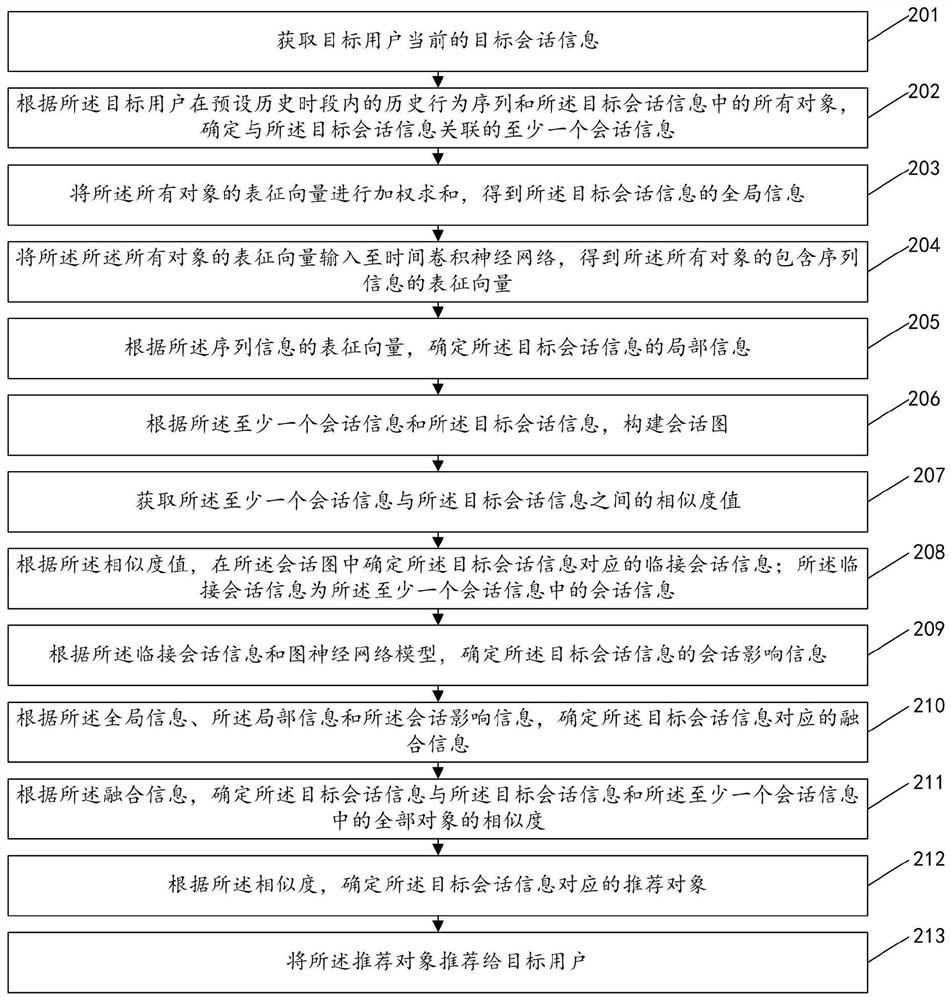

[0105] refer to figure 2 , which shows a flowchart of steps of another method for determining recommended objects provided by an embodiment of the present disclosure, as shown in figure 2 As shown, the method for determining a recommended object may specifically include the following steps:

[0106] Step 201: Obtain the current target session information of the target user.

[0107] The embodiments of the present disclosure can be applied to a scene where an object that the user may click next is determined, and the object is recommended to the user.

[0108] The target session information refers to the session information formed by the target user clicking on an object (such as a business, an item, etc.) within a current period of time.

[0109]When it is necessary to predict the next click behavior of the target user, the current target session information of the target user may be acquired, and then step 202 is performed.

[0110] Step 202: Determine at least one piece...

Embodiment 3

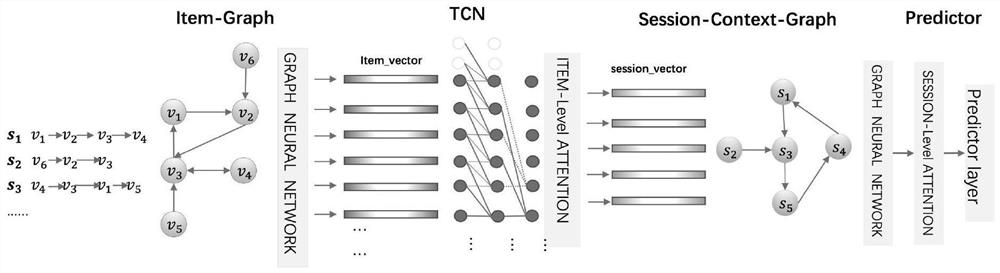

[0167] refer to image 3 , which shows a schematic structural diagram of an apparatus for determining a recommended object provided by an embodiment of the present disclosure, as shown in image 3 As shown, the recommended object determination device may specifically include the following modules:

[0168] A session information acquisition module 310, configured to acquire target session information and at least one session information associated with the target session information;

[0169] A global information determining module 320, configured to determine global information and local information corresponding to the target session information according to the characterization vectors of all objects in the target session information;

[0170] A session impact acquiring module 330, configured to acquire session impact information of the at least one session information on the target session information;

[0171] The recommended object determining module 340 is configured t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com