Ping-pong storage method and device for sparse neural network

A technology of ping-pong storage and neural network, which is applied in the direction of biological neural network model, neural architecture, input/output process of data processing, etc., to achieve the effect of ping-pong storage

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] In order to enable those skilled in the art to better understand the technical solutions in the embodiments of the present invention, and to make the above-mentioned purposes, features and advantages of the embodiments of the present invention more obvious and understandable, the technical solutions in the embodiments of the present invention are described below in conjunction with the accompanying drawings The program is described in further detail.

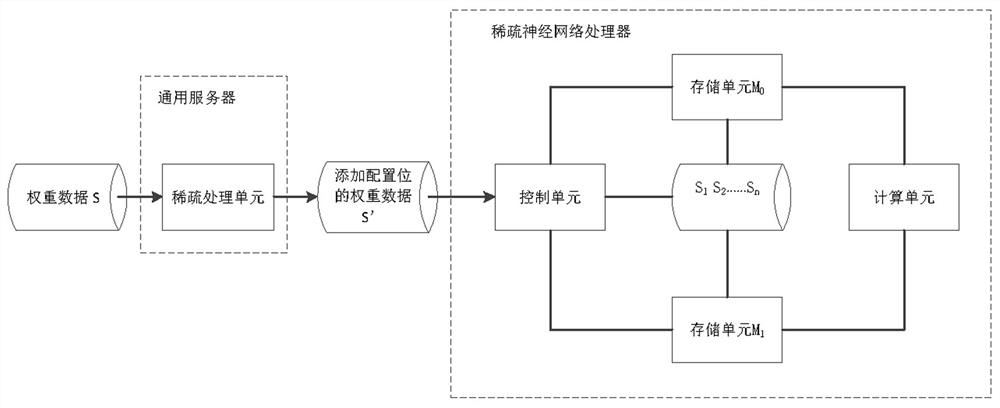

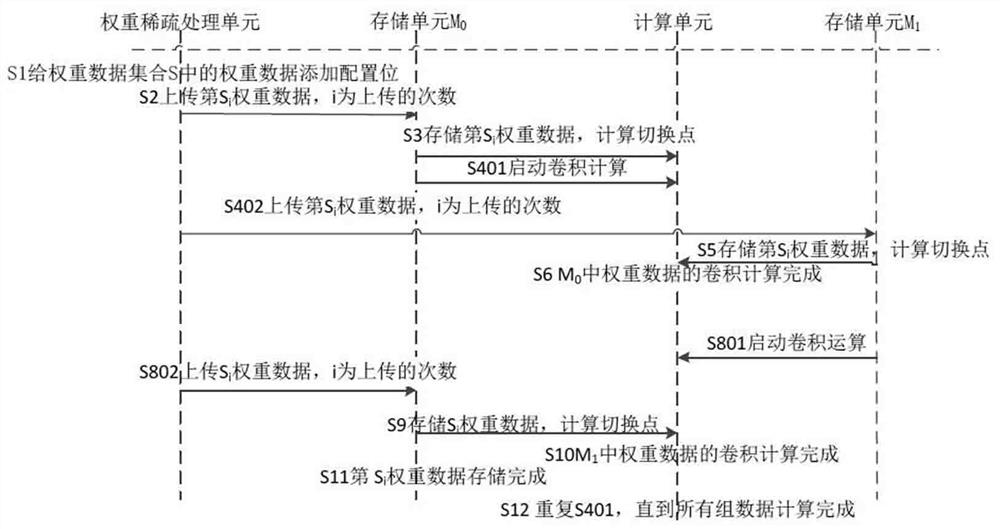

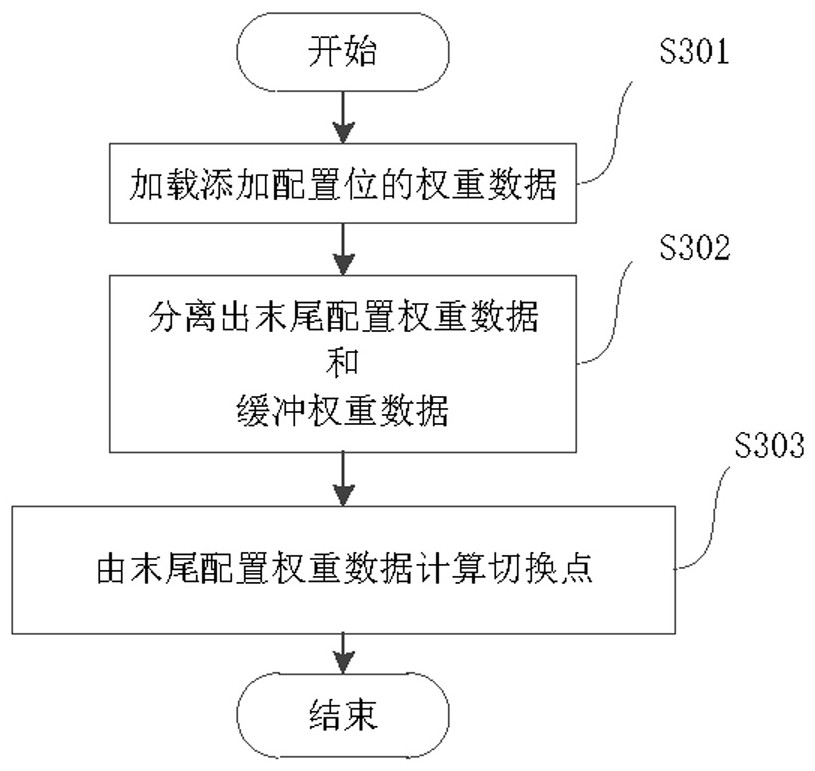

[0045] figure 1 is a schematic diagram of a ping-pong storage system for sparse neural networks. like figure 1 As shown, including the sparse processing unit in the general server and the control unit in the sparse neural network processor, two weight storage units M 0 and M 1 And the control unit, the sparse processing unit is used as the processing unit of the weight data. In addition to performing sparse processing on the weight data, it also adds configuration bits to the weight data during the processing process. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com