Two-way exposure fusion network model and method for low-illumination image enhancement

A technology that integrates network and image enhancement. It is applied in image enhancement, biological neural network model, image analysis, etc. It can solve the problems of unnatural enhancement results, inappropriate colors, and difficulty in implementation, and achieve the effect of good noise suppression ability.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

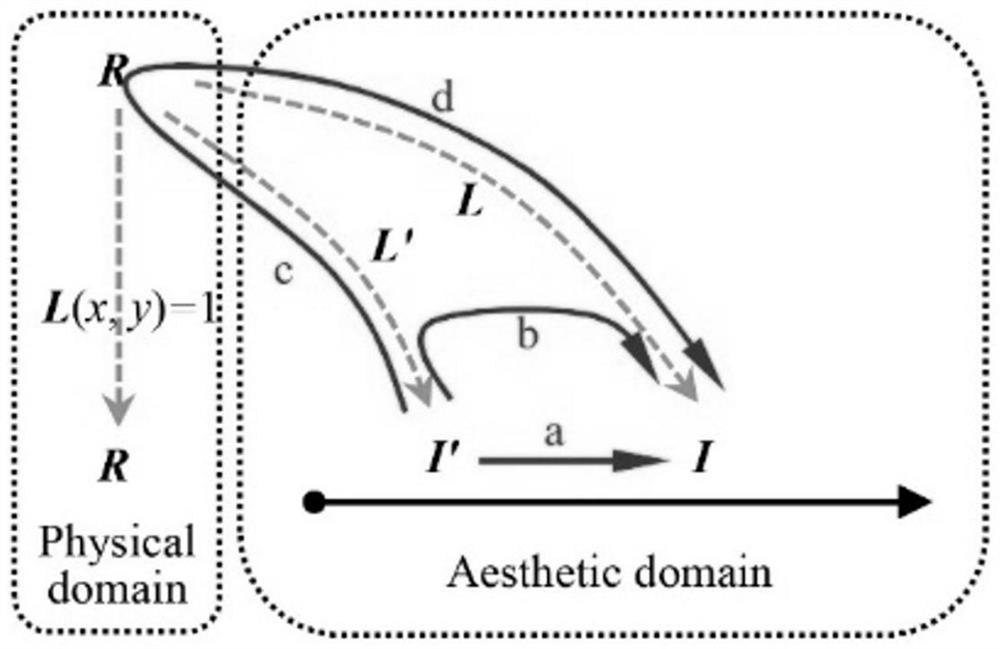

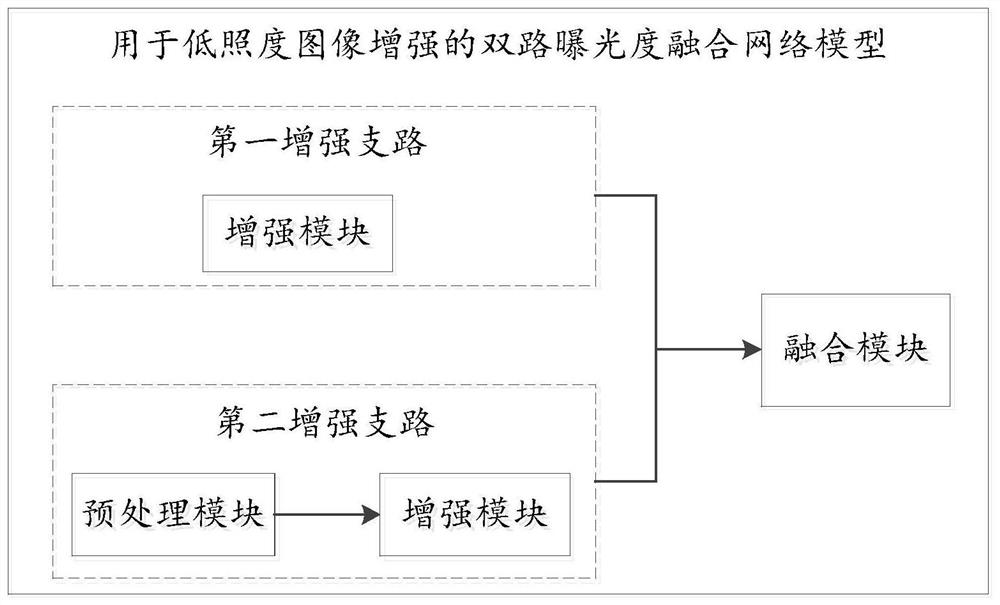

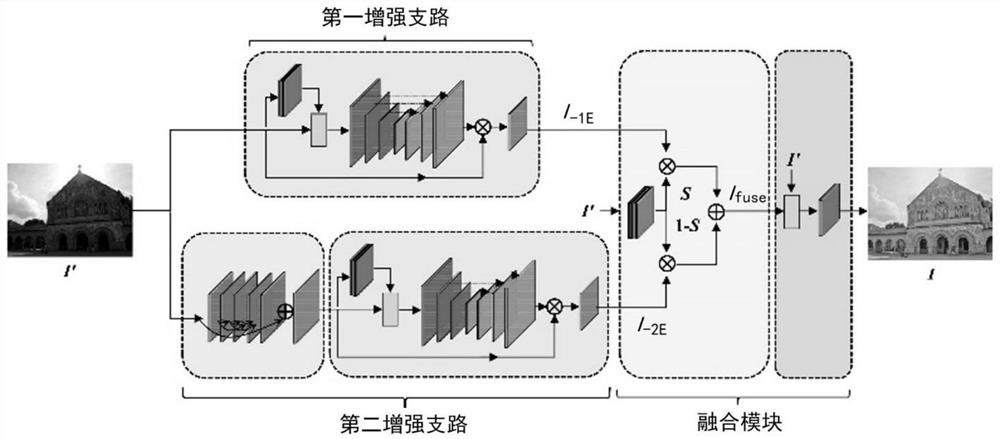

[0046] In order to solve the problems existing in the existing methods, this embodiment proposes a convolutional neural network-based two-way exposure fusion structural model for low-light image enhancement. The network model is inspired by the imitation of the human creative process, that is, good works can obtain empirical guidance (fusion) from multiple attempts (generation). In this embodiment, it is considered that the enhancement of low-illuminance images can also adopt this generation and fusion strategy. Since brightness is one of the most important and most likely to change factors in the imaging process, the enhancement strategy of this embodiment is positioned on how to carry out desired enhancement for images with various unknown illuminances.

[0047] For this reason, in the image generation stage, the method proposed in this embodiment uses a two-branch structure, and uses different enhancement strategies to deal with the two situations of extremely low and low i...

no. 2 example

[0177] This embodiment provides a two-way exposure fusion method for low-illuminance image enhancement, which can be implemented by electronic equipment. The execution flow of this method is as follows Figure 4 shown, including the following steps:

[0178] S101. Process the low-illuminance image to be enhanced using preset different enhancement strategies to obtain a two-way enhancement result corresponding to the low-illuminance image to be enhanced;

[0179] S102. Perform weighted fusion of two-way enhancement results corresponding to the low-illuminance image to be enhanced obtained by using different enhancement strategies, so as to obtain an enhanced image corresponding to the low-illuminance image to be enhanced;

[0180] Wherein, in the above S101, the image to be enhanced is enhanced by using the following formula:

[0181]

[0182] in, represents the enhanced image, Indicates the input image to be enhanced, represents element-wise dot product, represent...

no. 3 example

[0191] This embodiment provides an electronic device, which includes a processor and a memory; at least one instruction is stored in the memory, and the instruction is loaded and executed by the processor, so as to implement the method of the second embodiment.

[0192] The electronic device may have relatively large differences due to different configurations or performances, and may include one or more processors (central processing units, CPU) and one or more memories, wherein at least one instruction is stored in the memory, so The above instructions are loaded by the processor and perform the following steps:

[0193] S101. Process the low-illuminance image to be enhanced using preset different enhancement strategies to obtain a two-way enhancement result corresponding to the low-illuminance image to be enhanced;

[0194] S102. Perform weighted fusion of two-way enhancement results corresponding to the low-illuminance image to be enhanced obtained by using different enhan...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com