Infrared and visible light image fusion method and system based on generative adversarial network

An infrared image and image fusion technology, applied in the field of infrared and visible light image fusion, can solve the problems of inapplicability of infrared and visible light images, limit the development of fusion methods, and complexity, so as to avoid manual design and complex design, improve fusion performance, and achieve good results. The effect of visual effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0048] This embodiment provides an infrared and visible light image fusion method based on generating an adversarial network;

[0049] Infrared and visible light image fusion methods based on generative adversarial networks, including:

[0050] S101: Acquire an infrared image and a visible light image to be fused;

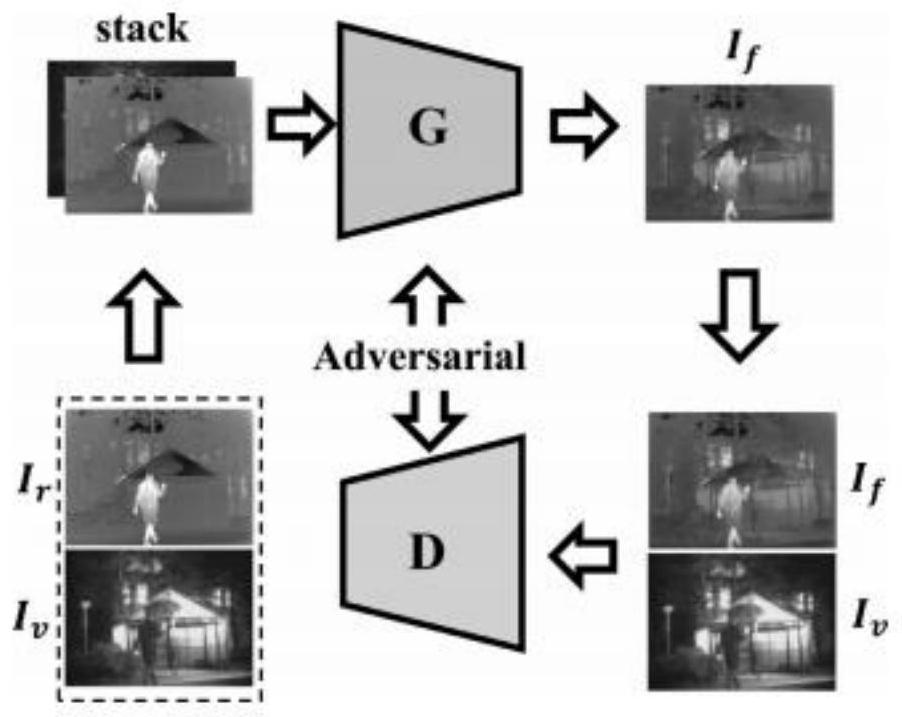

[0051] S102: Simultaneously input the infrared image and the visible light image to be fused into the pre-trained generation adversarial network, and output the fused image; as one or more embodiments, the generation adversarial network includes: Loss function; the overall loss function includes: content loss function, detail loss function, target edge enhancement loss function and adversarial loss function.

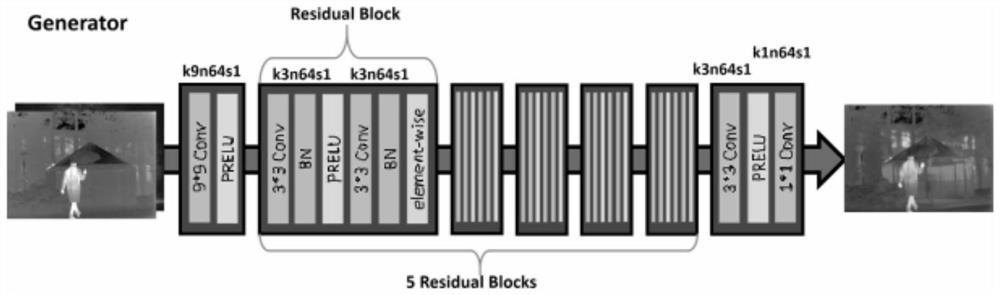

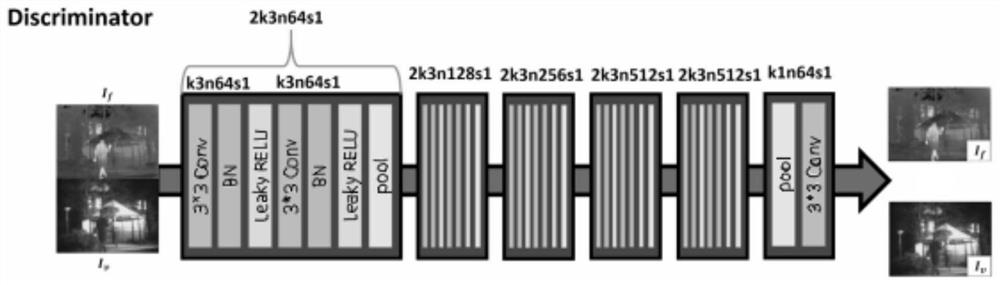

[0052] As one or more embodiments, the generated confrontational network includes: a generator and a discriminator; the generator refers to a Resnet classification network; the discriminator refers to a VGG-Net neural network.

[0053] Further, the content ...

Embodiment 2

[0143] Embodiment 2 This embodiment provides an infrared and visible light image fusion system based on generating an adversarial network; an infrared and visible light image fusion system based on generating an adversarial network, including:

[0144] An acquisition module configured to: acquire an infrared image and a visible light image to be fused;

[0145] A fusion module, which is configured to: simultaneously input the infrared image and the visible light image to be fused into a pre-trained generation adversarial network, and output the fused image; as one or more embodiments, the generation adversarial The network includes: a total loss function; the total loss function includes: a content loss function, a detail loss function, an object edge enhancement loss function and an adversarial loss function.

[0146] It should be noted here that the acquisition module and the fusion module above correspond to steps S101 to S102 in the first embodiment, and the examples and a...

Embodiment 3

[0147] Embodiment 3 This embodiment also provides an electronic device, including: one or more processors, one or more memories, and one or more computer programs; wherein, the processor is connected to the memory, and the above one or more The computer program is stored in the memory, and when the electronic device is running, the processor executes one or more computer programs stored in the memory, so that the electronic device executes the method described in Embodiment 1 above. Embodiment 4 This embodiment also provides a computer-readable storage medium for storing computer instructions. When the computer instructions are executed by a processor, the method described in Embodiment 1 is completed.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com