Symmetric environment repositioning method and device and robot

A robot and relocation technology, applied in the field of sweeping robots, can solve problems such as relocation errors, and achieve the effect of partial relocation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

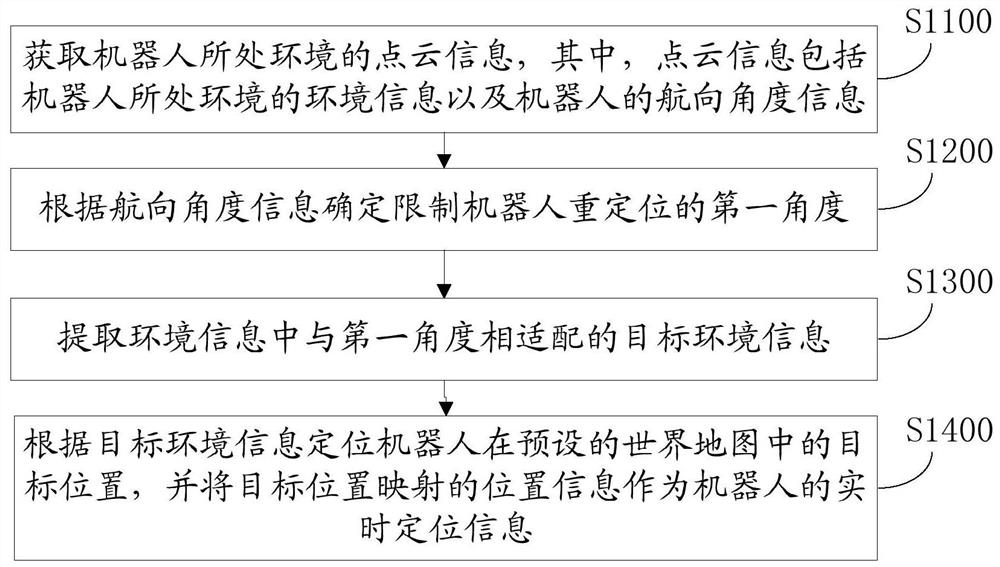

[0048] In some alternative embodiments, see figure 1 , figure 1 It is a schematic flowchart of a method for symmetric environment relocation according to an embodiment of the present application.

[0049] Such as figure 1 As shown, the present application provides a symmetric environment relocation method, including:

[0050] S1100. Obtain point cloud information of the environment where the robot is located, where the point cloud information includes environmental information of the environment where the robot is located and heading angle information of the robot;

[0051] Point cloud information can be environmental information collected by laser radar. Laser radar is a measurement equipment that integrates laser scanning and positioning and attitude determination systems. The laser radar system includes a laser and a receiving system. Its working principle is that the laser generates and emits A pulse of light, which hits an object and bounces back, is eventually picked ...

Embodiment 2

[0062] In some alternative embodiments, see Figure 4 , Figure 4 It is a schematic flow chart of loading a map in an embodiment of the symmetric environment relocation method of the present application.

[0063] Such as Figure 4 As shown, before the step of obtaining the point cloud information of the robot's environment, it also includes:

[0064] S1010. Obtain storage address information of the world map, wherein the world map includes a target area composed of multiple cleaning areas;

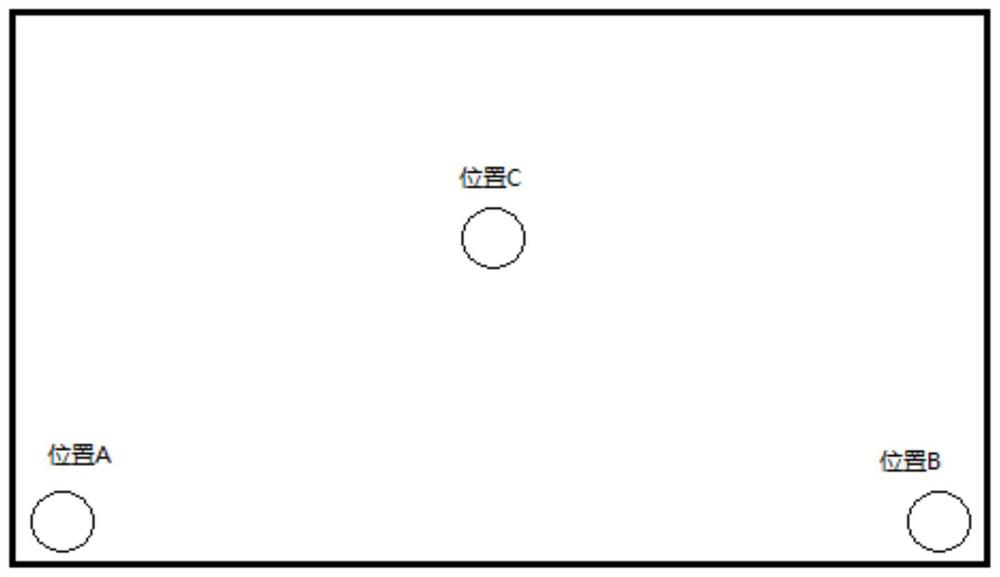

[0065] During the working process of the robot, the cleaning environment is scanned and the cleaning trajectory is planned to build a world map, and the cleaning environment is divided into multiple cleaning areas. For example, the household cleaning area is divided into multiple cleaning areas on the household cleaning map (such as network The target area is composed of multiple cleaning areas. Take the family environment including the living room and the bedroom as an example. The liv...

Embodiment 3

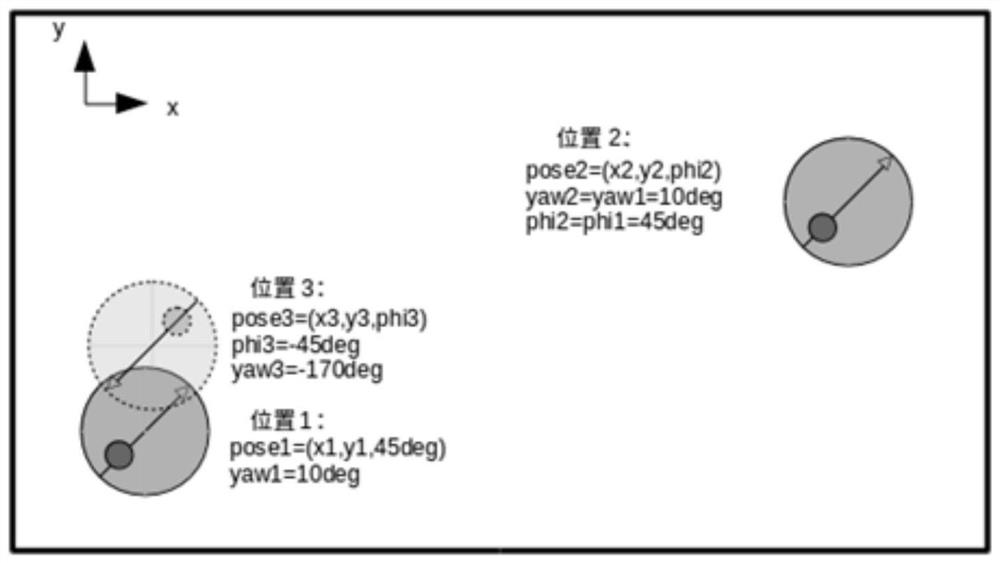

[0069] In some alternative embodiments, see Figure 5 , Figure 5 It is a schematic flowchart of locating the target position in an embodiment of the symmetric environment relocation method of the present application.

[0070] Such as Figure 5 As shown, the steps of locating the target position of the robot in the preset world map according to the target environment information include:

[0071] S1410. Extract any cleaning area in the target area as the target position, and compare the target position with the environment represented by the target environment information;

[0072] S1420. When the target position does not match the environment represented by the target environment information, extract the next cleaning area as the target position until the target position matches the environment represented by the target environment information.

[0073] The target area includes multiple cleaning areas, and the system compares the cleaning area in the target area with the t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com