Robot RGB-D SLAM method based on geometric and motion constraints in dynamic environment

A motion-constrained, dynamic-environment technology, applied in instruments, surveying and navigation, image analysis, etc., can solve problems such as camera pose deviation, enlarged visual SLAM positioning deviation, map point calculation errors, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0070] The present invention will be further described below in conjunction with the accompanying drawings.

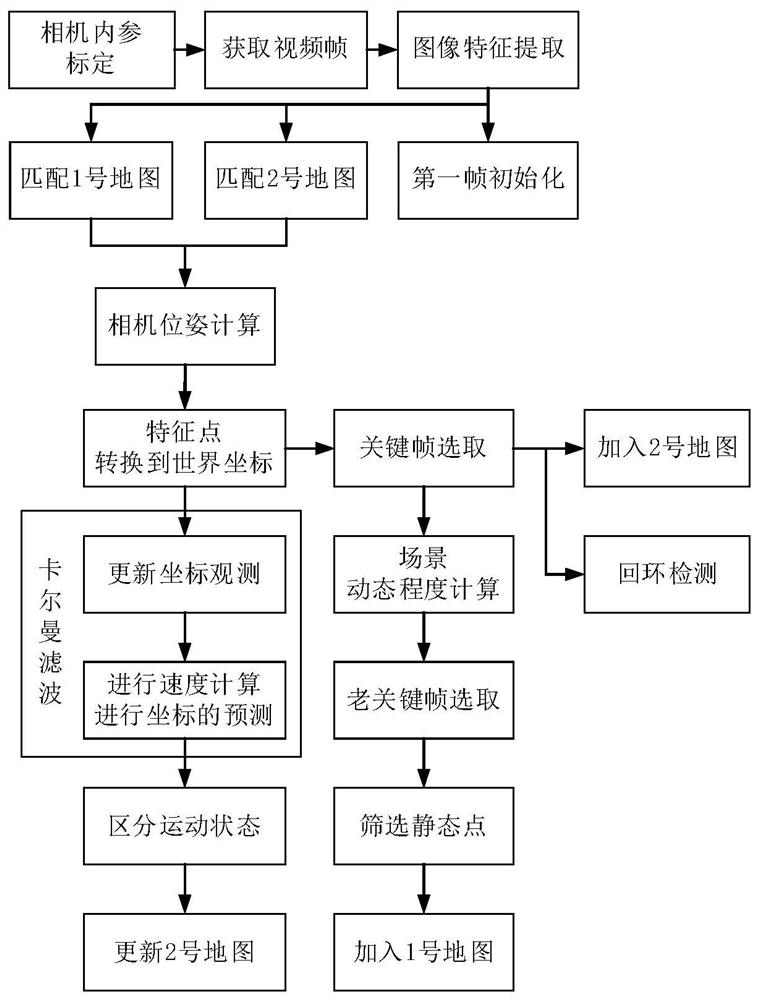

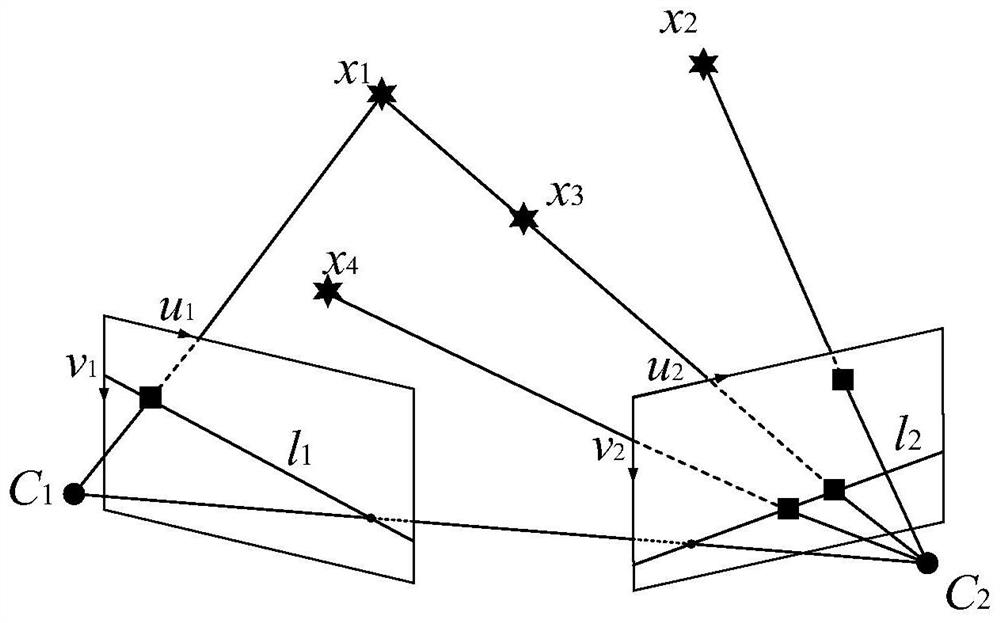

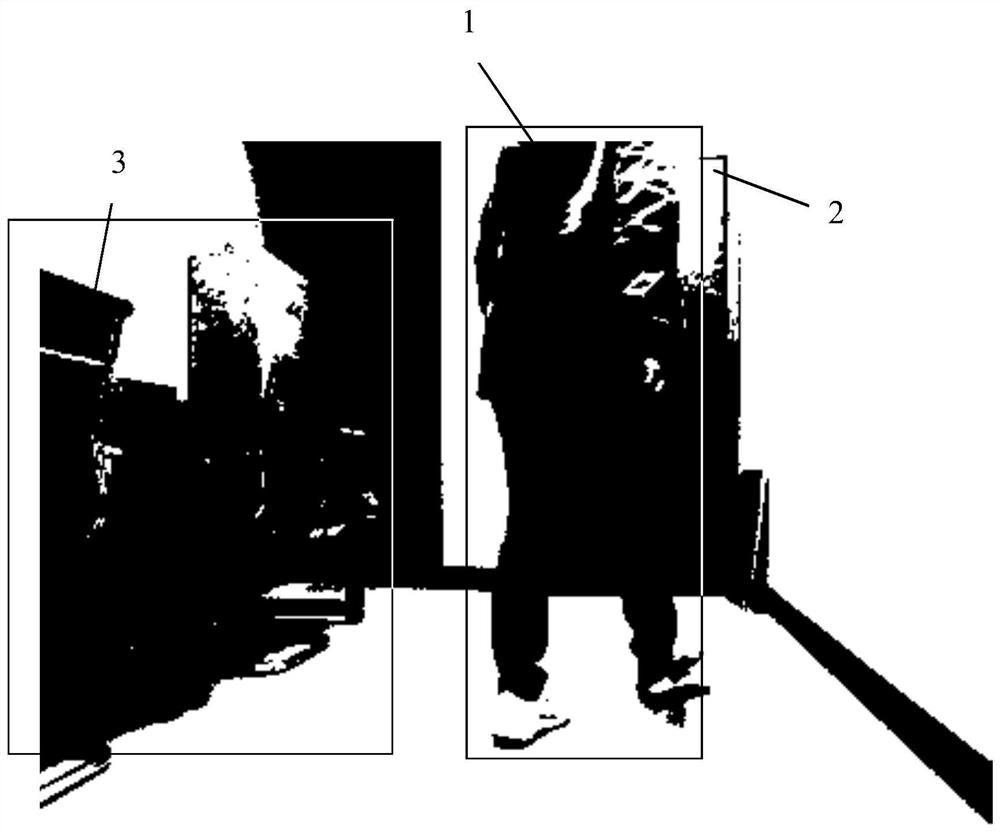

[0071] refer to Figure 1 to Figure 5 , a robot RGB-D SLAM method based on geometric and motion constraints in a dynamic environment. In an indoor dynamic environment, the method includes the following steps:

[0072] Step 1: Perform camera internal reference (camera principal point, focal length and distortion coefficient) calibration, the process is as follows:

[0073] Step 1.1: Use the camera to obtain multiple fixed-size checkerboard image data under different viewing angles;

[0074] Step 1.2: Use Zhang Zhengyou’s camera calibration method to calculate the internal parameters of the camera on the obtained checkerboard image data, and obtain the camera calibration result, which is recorded as K;

[0075] Step 2: Acquire the image frames in the video stream sequentially. First, build an image pyramid for the acquired image frames, and then divide the image into b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com