Distributed parallel deep neural network performance evaluation method for super computer

A deep neural network and supercomputer technology, applied in neural learning methods, biological neural network models, computing, etc., can solve the problem of inability to fully evaluate and effectively utilize the computing power of the Tianhe-3 supercomputer, no evaluation method is provided, and no prototype is available. Support and other issues to achieve the effect of shortening training time, realizing full utilization, and improving convergence speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

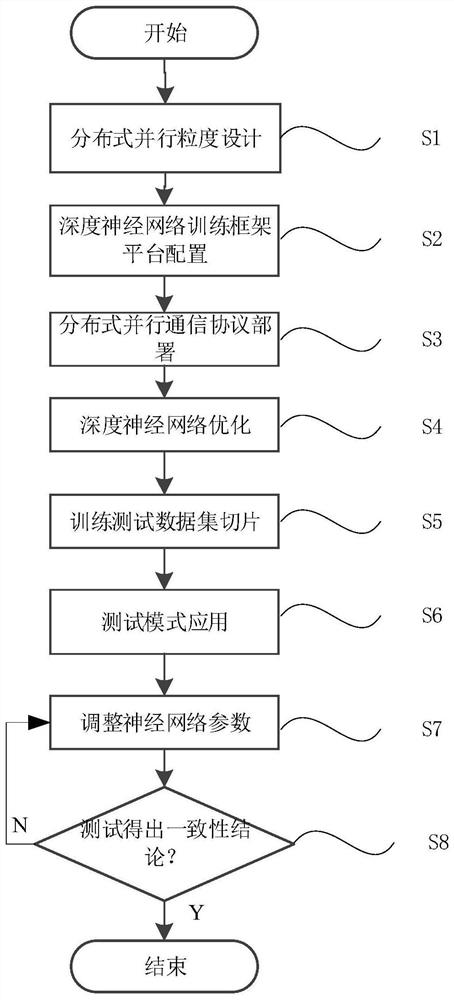

Method used

Image

Examples

Embodiment

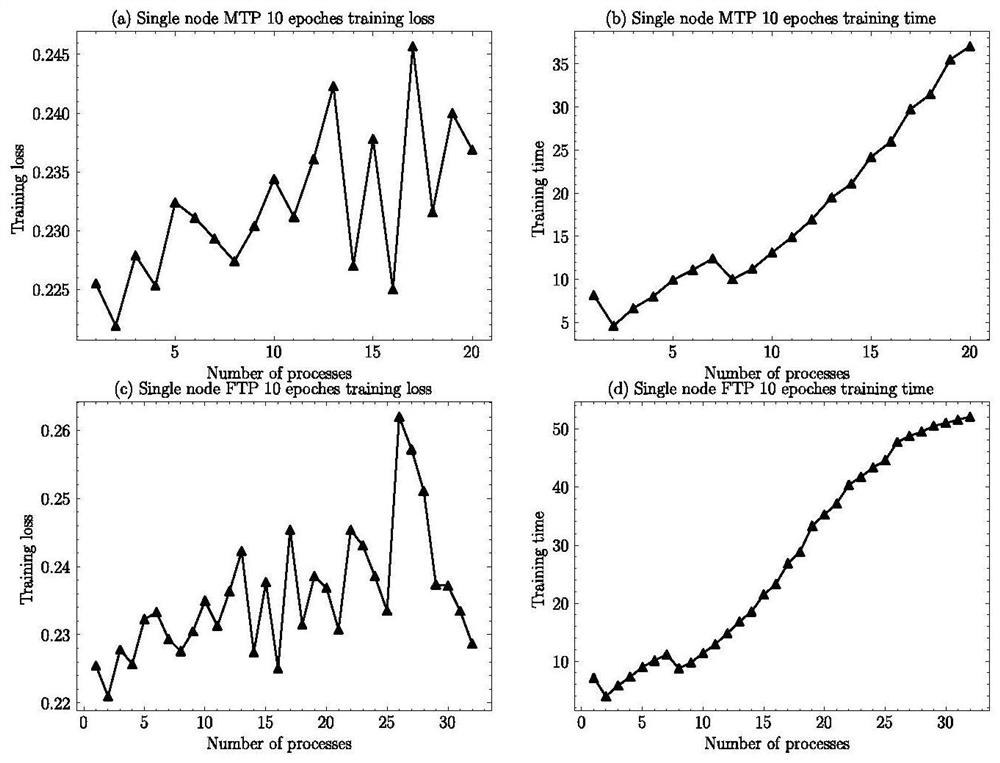

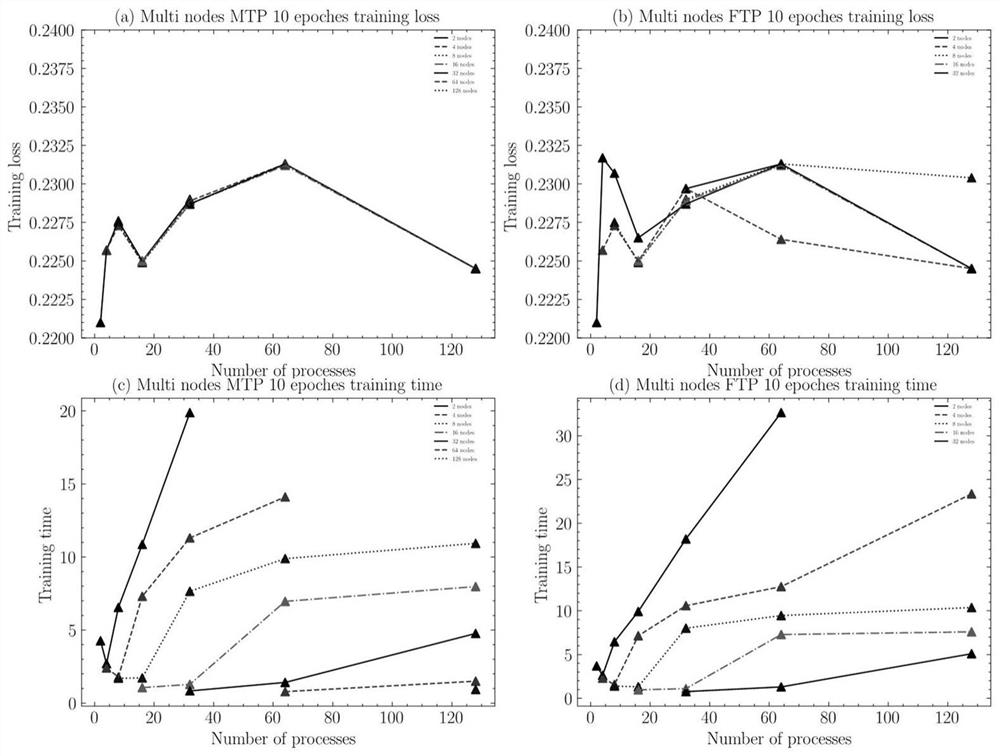

[0064] Taking the Tianhe-3 prototype as an example, the evaluation of the performance of the distributed parallel deep neural network based on the supercomputer of the present invention is carried out. The Tianhe-3 prototype has two different processor nodes, MT-2000+ and FT-2000+. In this embodiment, MT-2000+ and FT-2000+ single-node, multi-process parallel training tasks, MT-2000+ multi-node multi-process distributed training tasks and FT-2000+ multi-node multi-process distributed training tasks are designed respectively. Comprehensively evaluate the parallel training performance of a single node on the Tianhe-3 prototype and its scalability in multi-node distributed training. In order to ensure the robustness of the data, all the experimental results in this embodiment are the arithmetic mean value after five tests, and the evaluation results are as follows:

[0065] 1. The performance of a single node is as follows:

[0066] In a single MT2000+ node, the loss value of tra...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com