An in-memory computing bit unit and an in-memory computing device

A technology of bit cells and storage cells, applied in the field of in-memory computing, can solve the problems of wasting computing time and power consumption, leaking power consumption, and having no relative advantage in computing throughput.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

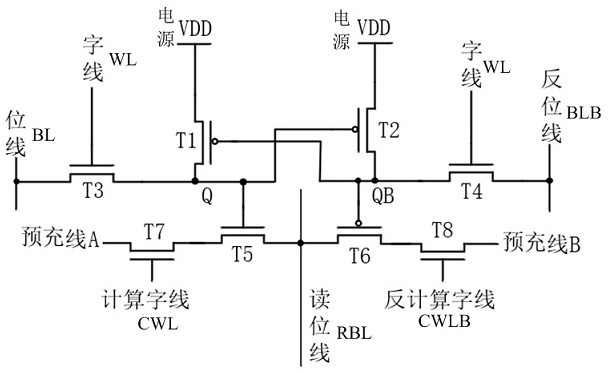

[0039] Such as figure 1 As shown, the present invention discloses an in-memory computing bit unit, and the in-memory computing bit unit includes:

[0040] A four-pipe storage unit and a four-pipe calculation unit, the four-pipe calculation unit is connected to the four-pipe storage unit; the bit line input end of the four-pipe storage unit is connected to the bit line BL, and the reverse side of the four-pipe storage unit The input terminal of the bit line is connected to the reverse bit line BLB, and the input terminal of the word line of the four-tube storage unit is connected to the word line WL; the four-tube storage unit is used for reading, writing and storing weight values; the four-tube calculation unit It is used for multiplying the input data and the weight value; the input data is determined according to the calculation word line CWL and the reverse calculation word line CWLB.

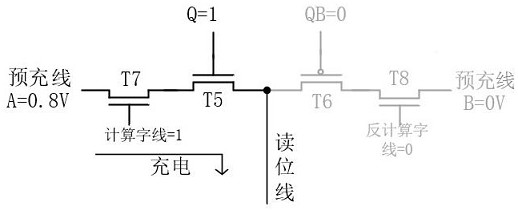

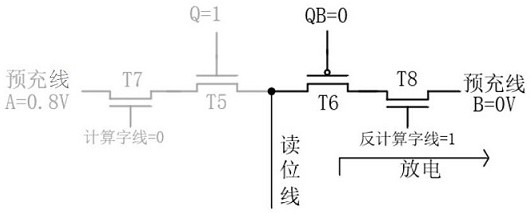

[0041] The four-tube calculation unit includes transistor T5, transistor T6, transistor...

Embodiment 2

[0059] Such as Figure 4 As shown, the present invention also provides an in-memory computing device, which includes: a bit line / prestored decoding driver ①, a word line decoding driver ③, a calculation word line decoding driver ②, an in-memory computing array and n An analog-to-digital converter ⑤; the in-memory computing array includes m×n above-mentioned in-memory computing bit units ④ arranged in an array.

[0060] The n bit line output terminals of the bit line / pre-storage decoding driver ① are respectively connected to n bit lines BL, and the 2n pre-fill line output terminals of the bit line / pre-storage decoding driver ① are connected to n pre-fill line output terminals respectively. Line A is connected to n pre-charge lines B, and the n anti-bit line output terminals of the bit line / pre-stored decoding driver ① are respectively connected to n anti-bit lines BLB; the m anti-bit line output terminals of the word line decoding driver ③ The word line output terminals are r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com