Acceleration method for exploring optimization space in deep learning compiler

A technology for optimizing space and deep learning, applied in neural learning methods, compiler construction, parser generation, etc., can solve the problems of large optimization space and huge time-consuming exploration of operator optimization space, so as to reduce time consumption and save Overhead, time-consuming effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0045] Specific embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings. It should be understood that the specific embodiments described here are only used to illustrate and explain the present invention, and are not intended to limit the present invention.

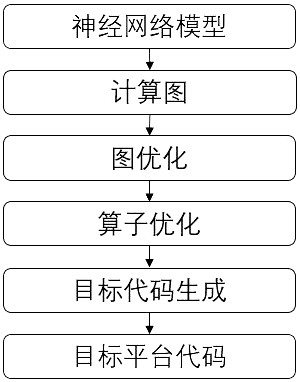

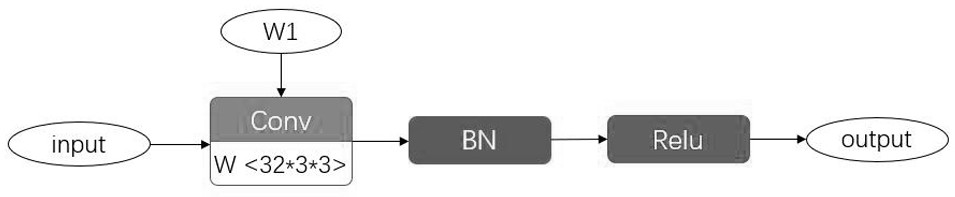

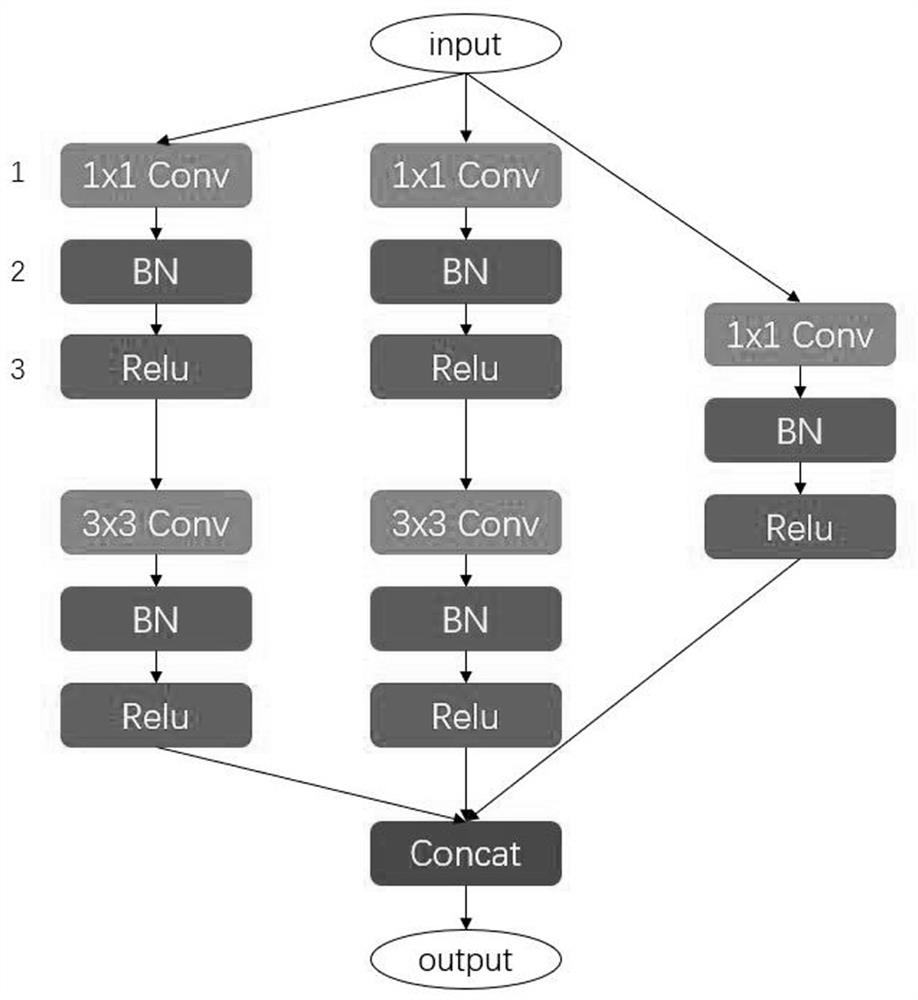

[0046] Such as figure 1As shown in , an acceleration method for exploring the optimization space in deep learning compilers, the purpose is to greatly reduce the time spent by the compiler in exploring the optimization space of operators at the expense of an acceptable increase in the inference time of deep learning networks. This method first abstracts the neural network into the form of a computational graph. Secondly, graph optimization is performed on the calculation graph, and an optimization space is defined for each operator in the optimized calculation graph. Then, based on the operator containing the optimal spatial information, a calculation method f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com