Dynamic vision sensor-oriented brain-like gesture sequence identification method

A visual sensor and sequence recognition technology, applied in neural learning methods, character and pattern recognition, instruments, etc., can solve the problems of lack of biological interpretability, inability to find extreme points, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

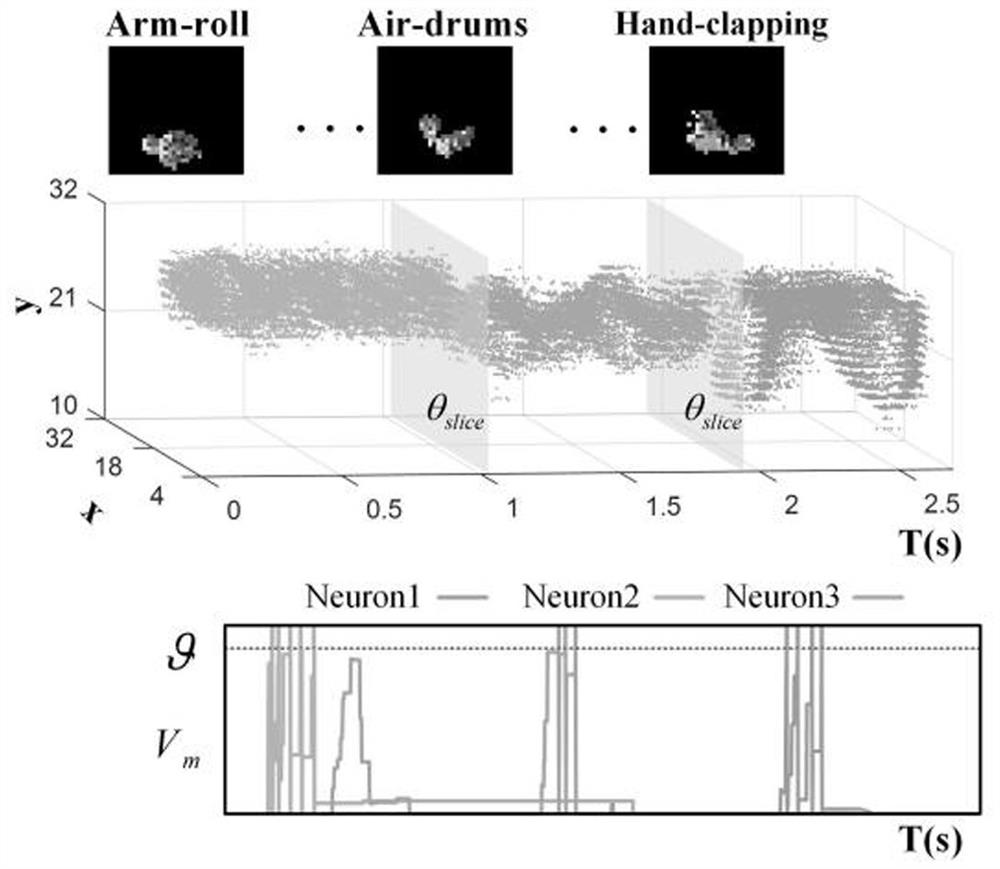

[0145] Select the three most similar gestures in the GESTURE-DVS data set, randomly form gesture sequences, and recognize them according to the above recognition methods. The recognition results are as follows image 3 As can be seen from the figure, the present invention can successfully recognize each gesture in the gesture sequence.

[0146] Further analyzing the model performance, it can be seen that the present invention has the following advantages:

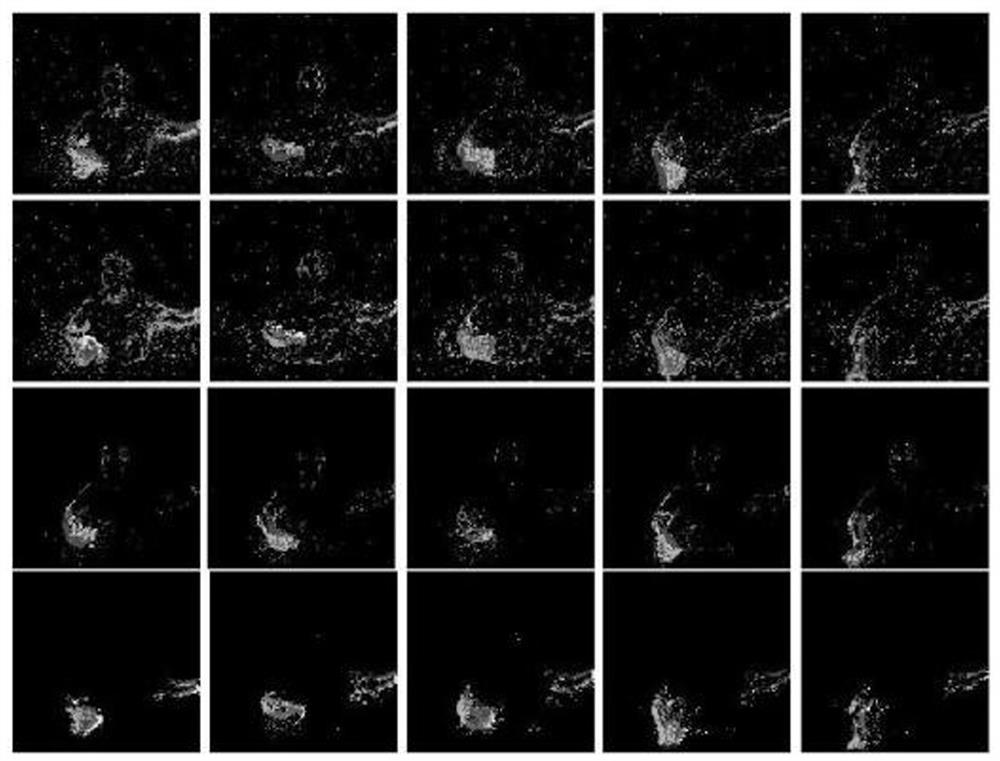

[0147] (1) Noise robustness: Figure 4 , the first row is the reconstructed frame map of the original event stream, the second row is the method based on the time plane, the third row is the method based on the denoising time plane, and the fourth row is the pulse space-time based method proposed in the present invention. flat method. like Figure 4 As shown in the fourth row of figures, the feature extraction method proposed in the present invention can not only effectively filter the noise events in the event stream, b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com