Syntactic structure fused Tibetan and Chinese language neural machine translation method

A machine translation and syntactic structure technology, applied in natural language translation, neural architecture, biological neural network model, etc., can solve the problems of syntactic structure difference and training difficulties.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

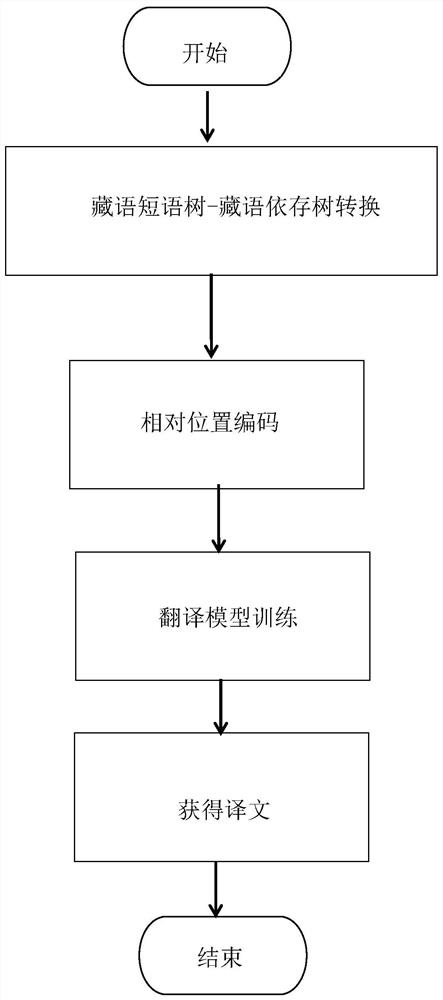

[0152] Such as figure 1 As shown, a Tibetan-Chinese language neural machine translation method that integrates syntactic structure includes the following steps:

[0153] Step 1: Perform Tibetan phrase tree-Tibetan dependency tree conversion.

[0154]Specifically, it includes: tagging the Tibetan phrase tree, designing the Tibetan phrase table, the dependency table, and setting the priority of the dependency relationship, and automatically completing the conversion from the Tibetan phrase tree to the dependency tree based on rules. Among them, the conversion process is the same as step 1.1.

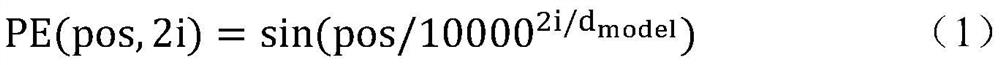

[0155] Step 2: Relative position encoding.

[0156] Specifically include: training of the dependency analysis model, and generation of the Tibetan dependency tree corresponding to the Tibetan corpus, so as to obtain the position representation of the Tibetan corpus and the dependency relationship, wherein, generating the Tibetan dependency tree corresponding to the Tibetan corpus is the ...

Embodiment 2

[0161] This embodiment will use specific examples to describe in detail the specific operation steps of a Tibetan-Chinese neural machine translation method that integrates syntactic structures according to the present invention.

[0162] The processing flow of a Tibetan-Chinese neural machine translation method that integrates syntactic structures is as follows: figure 1 shown. From figure 1 It can be seen that a Tibetan-Chinese neural machine translation method that integrates syntactic structures includes the following steps:

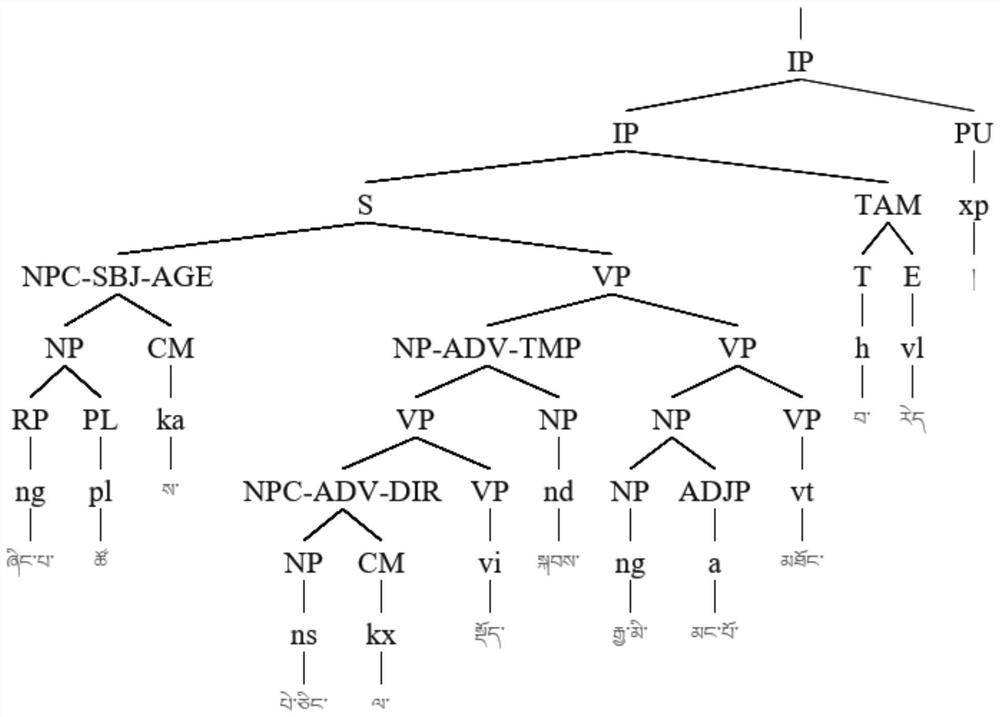

[0163] Step 1: Convert the Tibetan phrase tree-dependency tree. Such as figure 2 shown. The corresponding Chinese translation is "the peasants saw many Han people when they were in Beijing". The corresponding Chinese and labels of each token from left to right are farmers [acting case] Beijing [and character] when sitting, Han people see many [time body] [time body] [punctuation marks].

[0164] In the process of traversing the binary phrase tr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com