Cross-modal retrieval method based on modal specificity and shared feature learning

A feature learning and cross-modal technology, applied in neural learning methods, other database retrieval, digital data information retrieval, etc., can solve problems such as the combination of less modal-specific information and modal-shared information, loss of effective information, etc., to achieve Good semantic differentiation and the effect of reducing distribution differences

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

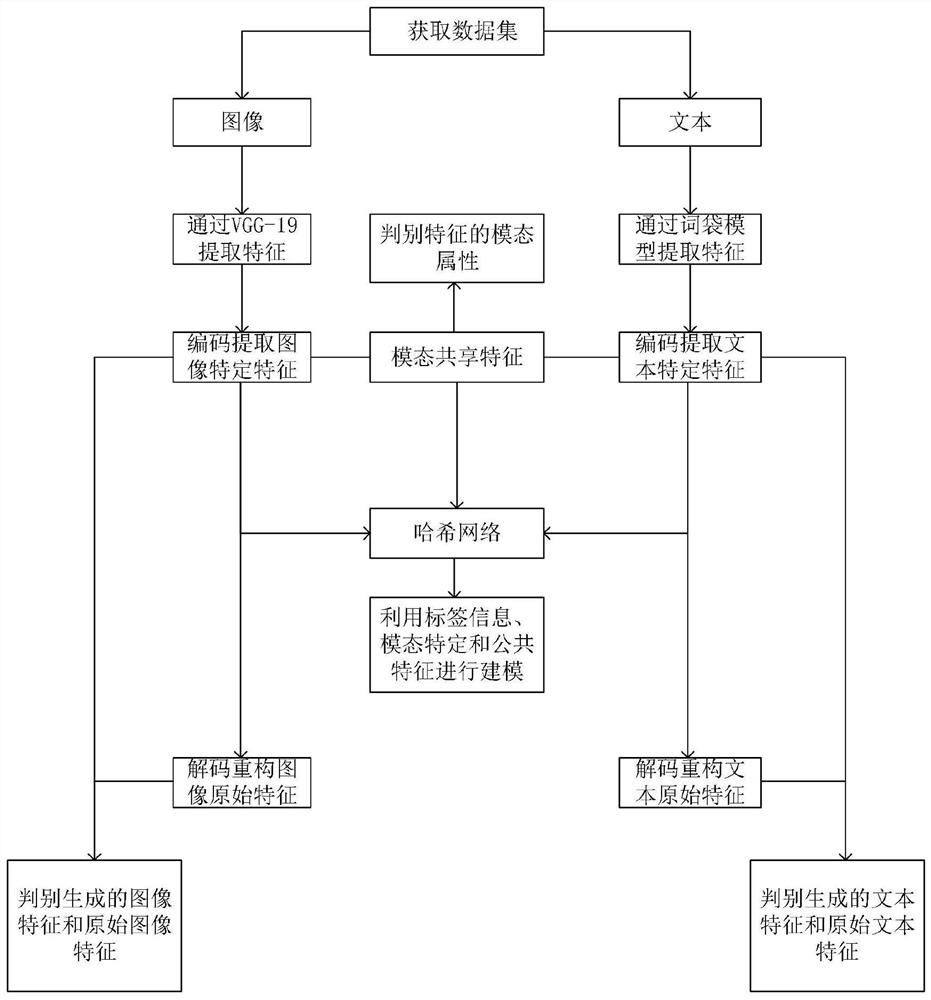

[0054] see figure 1 , this embodiment provides a cross-modal retrieval method based on modality-specific and shared feature learning, including the following steps:

[0055] Step S1, obtaining a cross-modal retrieval data set, and dividing the cross-modal retrieval data set into a training set and a test set;

[0056] Specifically, in this embodiment, the data sets obtained through conventional channels such as the Internet include: Wikipedia and NUS-WIDE, and these data sets are composed of labeled image-text pairs.

[0057] Step S2, performing feature extraction on the text and images in the training set;

[0058] Specifically, in this embodiment, the image features of the seventh part of the fully connected layer are extracted through the VGG-19 model; the text features are extracted through the bag-of-words model.

[0059] In this embodiment, the VGG-19 model used includes 16 convolutional layers and 3 fully connected layers. The network structure is: the first part cons...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com