Pedestrian re-identification method based on spatial reverse attention network

A pedestrian re-identification, space technology, applied in neural learning methods, character and pattern recognition, biological neural network models, etc., to achieve the effect of improving effectiveness and reliability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0039] The task of person re-identification aims to find the same pedestrian under different cameras. Although the development of deep learning has brought great improvements to person re-identification, it is still a challenging task. In recent years, attention mechanisms have been widely verified to have excellent effects on person re-identification tasks, but the combined effects of different types of attention mechanisms (such as spatial attention, self-attention, etc.) still remain to be discovered.

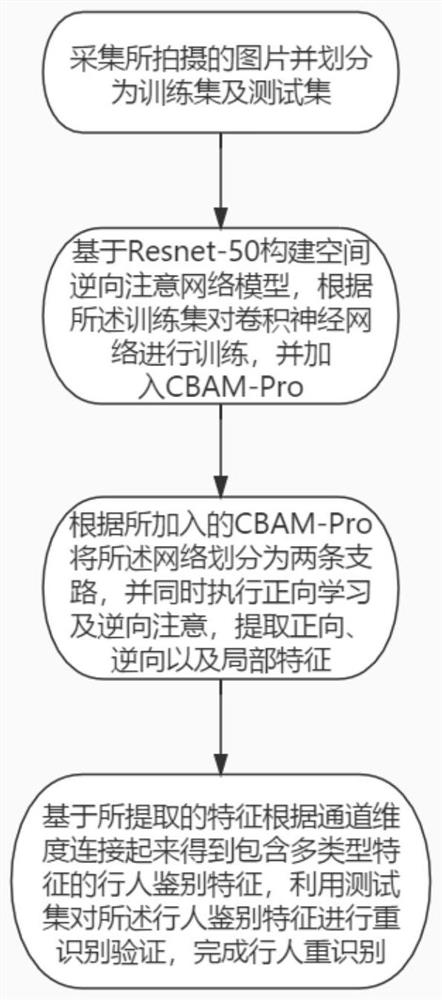

[0040] refer to Figure 1~3 , as an embodiment of the present invention, provides a method for pedestrian re-identification based on spatial reverse attention network, including:

[0041] S1: Collect the captured pictures and divide them into training set and test set;

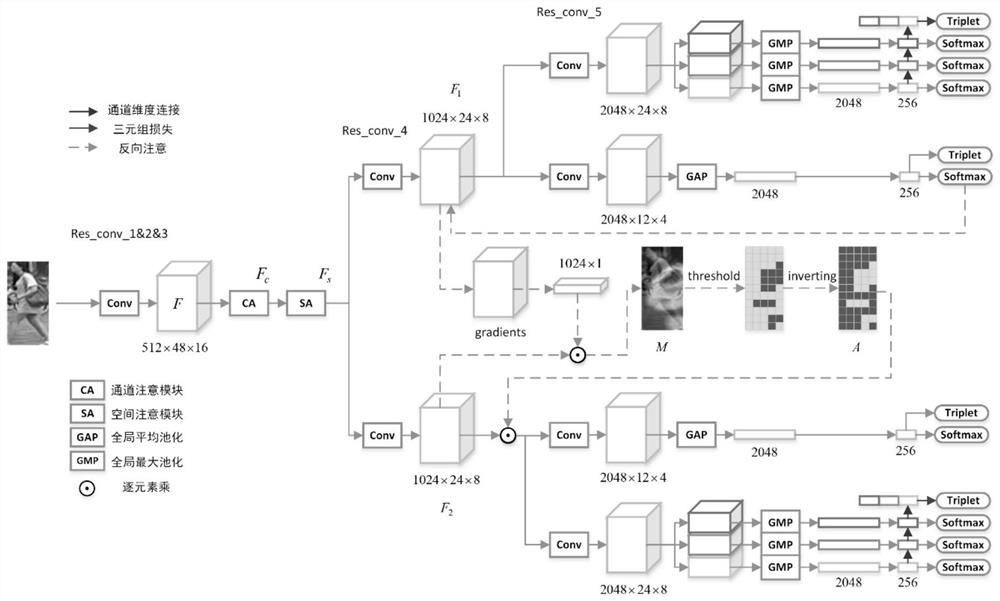

[0042]S2: Construct a spatial reverse attention network model based on Resnet-50, train the convolutional neural network according to the training set, and add CBAM-Pro;

[0043] It should be noted that th...

Embodiment 2

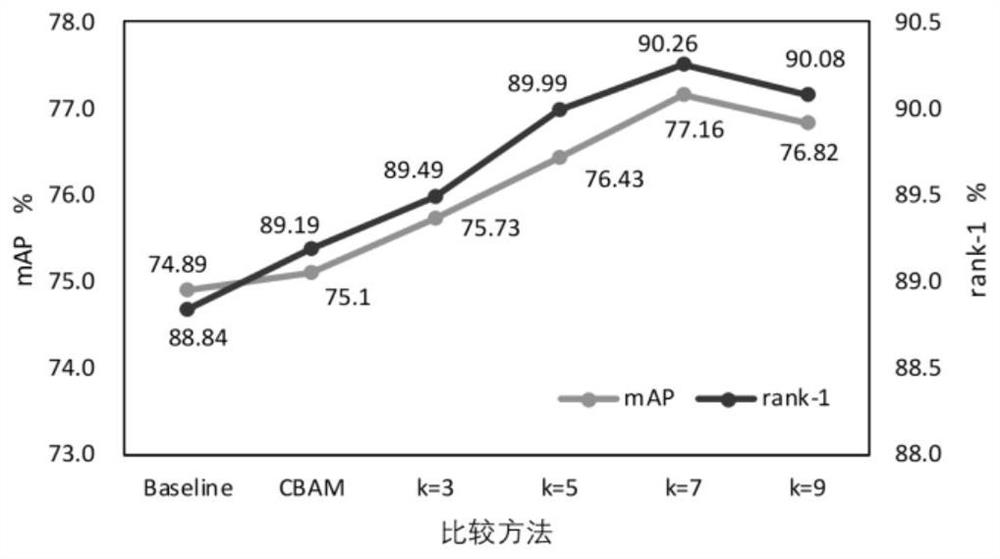

[0083] In order to verify and explain the technical effect adopted in this method, this embodiment adopts the traditional technical scheme and the method of the present invention to conduct a comparative test, and compares the test results by means of scientific demonstration to verify the real effect of this method.

[0084] In this embodiment, experiments are carried out on the three most commonly used data sets for pedestrian re-identification tasks: Marker-1501, DukeMTMC-reID, and CUHK03, using the first successful matching probability (rank-1) and average precision (mean average precision, mAP ) to evaluate the experimental results.

[0085] Among them, Marker-1501 includes 1501 pedestrians with different identities captured by 6 cameras. The data set generates 32668 pictures containing individual pedestrians through the DPM detector. They are divided into non-overlapping training / testing sets. The training set Contains 12,936 images of 751 pedestrians with different iden...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com